New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

memory leak when doing requests against AWS #3010

Comments

|

Could you run with https://docs.python.org/3/library/tracemalloc.html enabled? |

|

I've been trying to, but I ran into: https://bugs.python.org/issue33565 :( You wouldn't believe the pain I've had trying to diagnose several leaks in our application. |

|

Python version? Have you tried with older Aiohttp releases? |

|

3.6.5, 2.3.10 is the same |

|

FYI: looks like the warnings fix does not solve the leak (entirely) |

|

similarly objgraph is not showing any issues |

|

I've updated my script and I think I have a testcase against raw asyncio sockets, re-validating now. |

|

@asvetlov I've updated my test script per the gist link above which allows you to run w/ memory_profiler or tracemalloc, I've created tests for botocore/aiobotocore/aiohttp and asyncio (see -test param) botocore no longer leaks with the warnings fix, however aiohttp still leaks. I tried creating a raw asyncio http client based on aiohttp's implementation but have been unable to reproduce the leak with it. If you run the script w/ tracemalloc you'll see it does not report any leaks, nor does objgraph. So it sounds like there's a native leak somewhere. Any idea how to get the leak to reproduce with the native asyncio client in my script, or even better what the leak is? |

|

No idea yet :( |

|

btw this may be unrelated but in a separate project I noticed that simply using |

|

I believe I may be running into this same issue. I'm running into massive memory leaks doing SSL requests. EDIT: For clarity, I want to mention that I'm not using |

|

Well, I was able to fix my issue. It was as simple as this: connector = aiohttp.TCPConnector(enable_cleanup_closed=True)

session = aiohttp.ClientSession(connector=connector)The webserver I'm hitting must have a broken SSL implementation. Dunno if this would fix the issue that @thehesiod is having, however. |

|

Some additional information that may be related to this issue:

The same processing without saving files to S3 have a resulting memory footprint around ~80Mb And GC doesn't change it much. Cause of this I have 2 basic assumptions:

I will try to investigate this issue deeper to find out how exactly file contents is processed and what may cause this issues. |

Doesn't work in my case :( |

|

updated my test script with some new options, and ran with python3.7 (to avoid warning leak) with: so this seems to definitely be an SSL related leak |

|

FYI: |

|

another interesting note is that I had to add a test limiter to the insecure test because it's SOOO much faster than the https test, so some serious https optimization is needed |

|

Do You use uvloop? If yes, which version? |

|

@hellysmile me? no, just regular asyncio loop, you can see in my gist link above |

|

ok, looks like I'm now able to reproduce the leak with my raw asyncio socket test, see: logged python bug: https://bugs.python.org/issue34745 |

|

@thehesiod what about to try with uvloop? |

|

@hellysmile updated gist with uvloop support, still leaks w/ uvloop |

|

Not sure if I hit the wide side of the barn with it, but recently I was tracing memory leak in aiohttp app and came to this: aio-libs/multidict#307 For some reason latest aiohttp still depends on a buggy version. Upgrading multidict fixed the issue for me. |

|

@whale2 multidict memleak is not related to the issue: it was introduced by multidict 4.5.0 (2018-11-19) and finally fixed by 4.5.2 (2018-11-28). @thehesiod used older multidict version for his measurements. |

|

ya, plus I was able to repro with raw asyncio sockets per my cmt above |

|

looks like a fix has been proposed in https://bugs.python.org/issue34745! When I get a change I need to try it, if or anyone else gets a chance! |

|

@thehesiod the graph in python/cpython#12386 is the result of a slightly simplified version of your gist, would that do? |

|

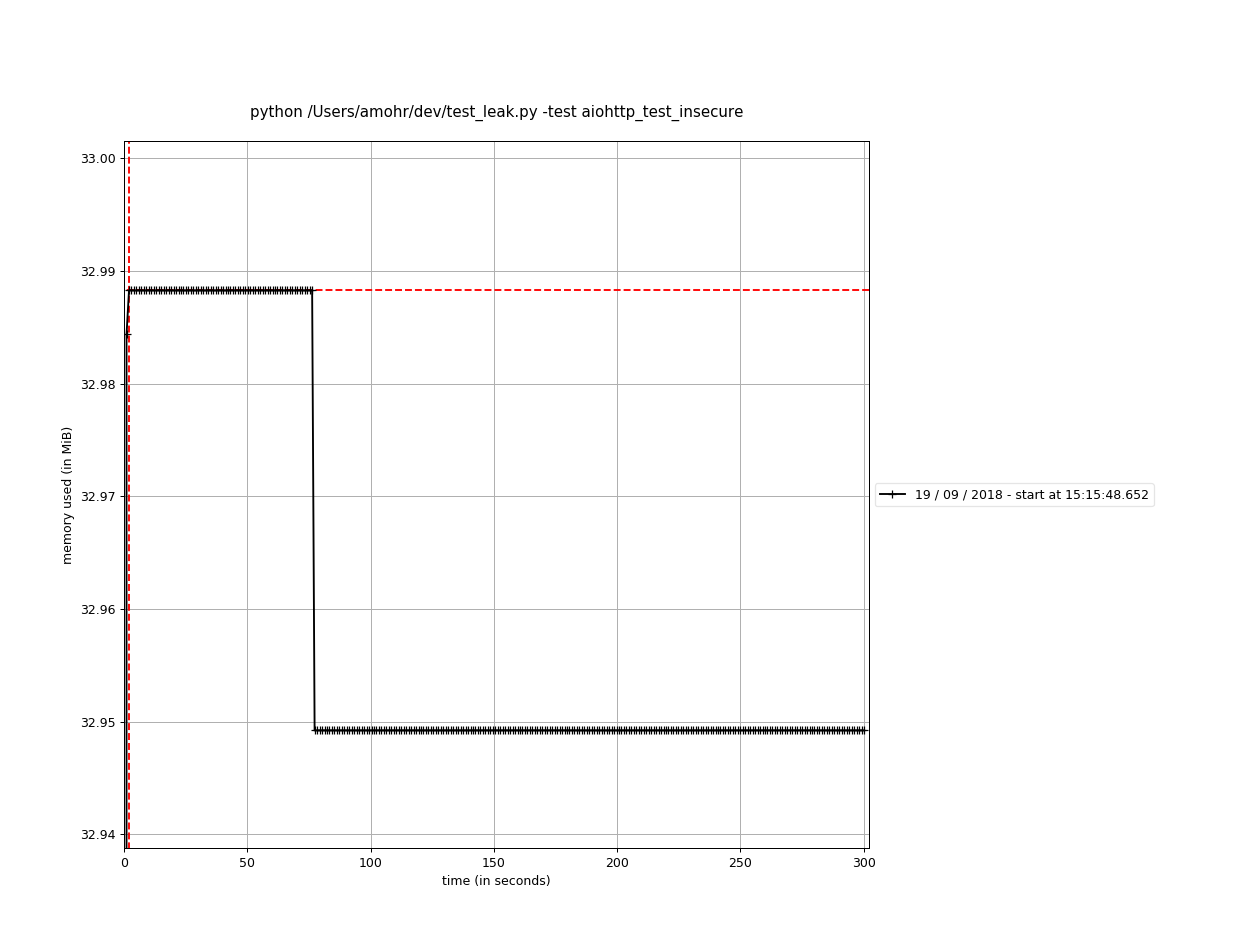

thanks @fantix , just ran my test w/ the aiohttp_test scenario with a build based on your PR (and a ported warnings fix for 3.6) and got the below graph, you rock! So happy I'm going to port this to our prod instances tonight! :) closing this issue! ty! |

|

Glad it works for you! |

|

@fantix looking at the new graph again, what's a little worrying is that memory is still increasing, however much slower. Definitely lower priority from original issue |

|

@thehesiod yeah, I feel you. I think we should have more of the same refcount-only tests like gevent to guarantee the core of asyncio or uvloop (maybe aiohttp too, didn't check) does not produce circular references. |

Long story short

while investigating a leak in botocore (boto/botocore#1464), I decided to check to see if this also affected aiobotocore. I found that even the

get_objectcase was leaking in aiobotocore. So I tried doing a the underlying GET aiohttp request and found it triggers a leak in aiohttp.Expected behaviour

no leak

Actual behaviour

leaking ~800kB over 300 requests

Steps to reproduce

update the below script with your credentials and run like so:

PYTHONMALLOC=mallocpython3 `which mprof` run --interval=1 /tmp/test_leak.py -test aiohttp_testscript: https://gist.github.com/thehesiod/dc04230a5cdac70f25905d3a1cad71ce

you should get a memory usage graph like so:

Your environment

The text was updated successfully, but these errors were encountered: