-

Notifications

You must be signed in to change notification settings - Fork 31

5. Using Word2Vec Model and Examples

Either you use pretrained model downloaded from here or you train a model step by step, you can use code below to load model in python.

from gensim.models import KeyedVectors

word_vectors = KeyedVectors.load_word2vec_format('trmodel', binary=True)

After that point, you can use word_vectors to make semantic operations.

word_vectors.most_similar(positive=["kral","kadın"],negative=["erkek"])

This is a classic example for word2vec. The most similar word vector for king+woman-man is queen as expected. Second one is "of king(kralı)", third one is "king's(kralın)". If the model was trained with lemmatization tool for Turkish language, the results would be more clear.

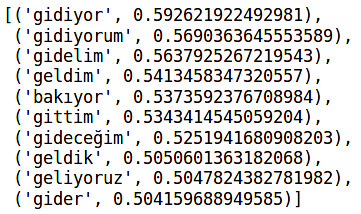

word_vectors.most_similar(positive=["geliyor","gitmek"],negative=["gelmek"])

Turkish is an aggluginative language. I have investigated this property. I analyzed most similar vector for +geliyor(he/she/it is coming)-gelmek(to come)+gitmek(to go). Most similar vector is gidiyor(he/she/it is going) as expected. Second one is "I am going". Third one is "lets go". So, we can see effects of tense and possesive suffixes in word2vec models.

word_vectors.doesnt_match(["elma","portakal","çilek","ev"])

ev

This finds the different words between [apple, orange, strawberry, house] as house.

Previous: 4. Training Word2Vec Model