New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ARTEMIS-1248 Reduce garbage while Paging #1361

Conversation

|

@franz1981 if you were using a thread local on activeMQBuffer and unsafeByteBufWrapper I would agree with you on saving GC. however... Page won't last much longer on a regular scenario. The Page will be served through PageCursorProviderImpl::getPageCache(); So.. this will be read basically only once... or if the cache on the PageCursorProviderImpl is beyond the limit, then the whole Page object would go away. so, you won't ever read it twice if you get the opportunity of reusing the field you're trying to reuse. it will probably be from a different object at the time you get there. So, I would say either use a ThreadLocal, or keep it the way it is.. since the Objects you are caching will be GCed from the released Page when the cache is full anyways. |

|

@clebertsuconic yep agree on the read side: I've done it just for completeness, but the biggest gain (measured) is on the write path. I've changed the sequence of writing too to be only sequential in order to avoid cache misses. |

|

@franz1981 I see.. you could still use a ThreadLocal there.. right? also: Why don't you use a PooledBuffer on the write? |

|

@clebertsuconic There is no need to use a ThreadLocal or a pooled buffer there because:

In the old version the most of the garbage was already avoided due to the fileFactory ByteBuffer thread local pooling, but I've measured after multiple Page writes lots of minor GCs due to many short living ActiveMQBuffer instances. This change aims reduce that instances to be only 1 per Page: it is not optimal but is a big improvement. |

|

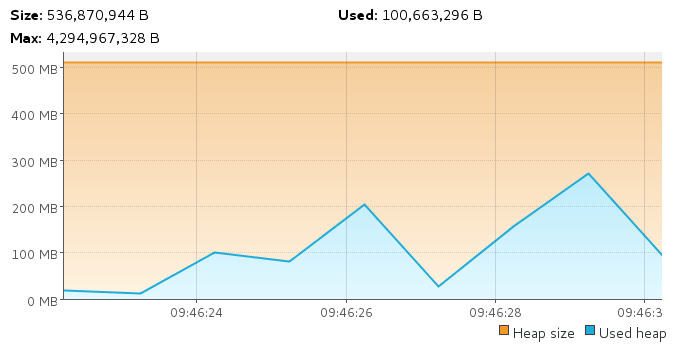

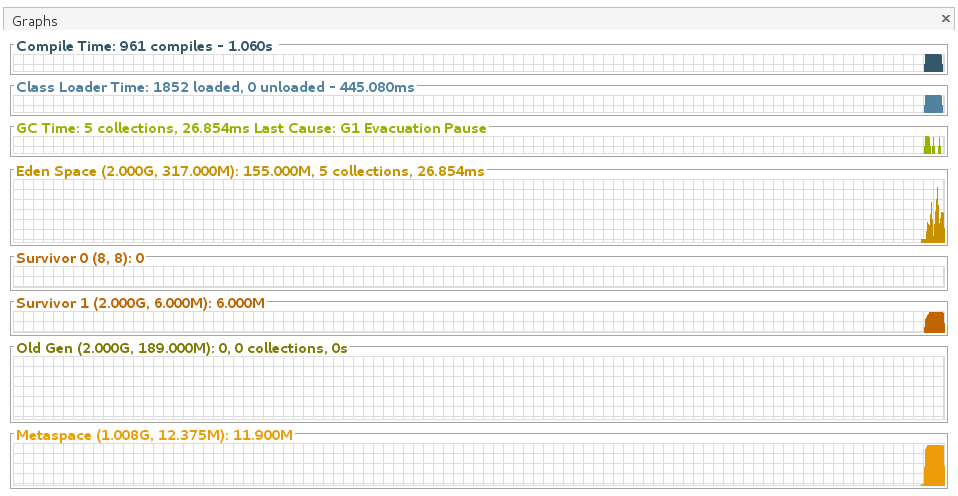

I agree this makes sense to me. @franz1981 is there a before and after vm gc / profiling graphs showing the drop in object creation and like wise drop in garabage collections. Will be the easiest proof that this change is beneficial. (We expect it to be btw) |

|

@michaelandrepearce @clebertsuconic

|

|

Looks really good. Test is quite short time wise, do you have an equiv for say a 30min to hour period. |

|

@michaelandrepearce I can run it for that extended period of time, but consider that this one is an isolated test with only page writes, hence:

This is necessary to show the isolated impact of this little change: with the whole broker you'll risk to run out of disk space if no Page reads is involved. |

|

I think it's worthwhile (agreed the short one shows the improvement in isolation) |

|

@franz1981 Page itself is short lived. it will create a new one every time we move to a different file. I was looking for a way to have the wrapper living beyond the existence of page, and to be ready for the next write on the next page. I was wondering if the write operation should live on Page itself or on StorageManager BTW. And also, I wasn't aware of the recent change at the FileFactory you recently made in May for the ThreadLocal Buffer.. I think we should use Pooled instead... it would be more appropriate I think.. but that's a different discussion. |

|

@clebertsuconic Having only one instance would be optimal, but considering that is a simple wrapper with no byte[] or off-heap memory allocated into it, just avoiding 100K it's a good compromise IMHO. About the changes on the NIO file factory, I've already tried (and measured) a solution with the Netty pools before pushing that version, but I found it to not be a good solution in this case for several reasons:

|

|

@michaelandrepearce Yes, agree...But in that case (probably) the thread pools executor services internal queues (+ capturing Runnables), the protocol handling + Netty will be the most important factories of garbage (from the GC point of view). Anyway I'll give try just to find out the current state of it and how it could impact in that case...but I don't know if I've enough disk to perform a test of 30 minutes :P |

|

@franz1981 did you run the whole testsuite on this? |

|

@clebertsuconic not yet! I'll run it now if is free 👍 |

13bdfa5

to

6ca9e4c

Compare

|

@franz1981 I merged this by accident... will leave it be now :) running the testsuite just to make sure it's ok |

No description provided.