You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

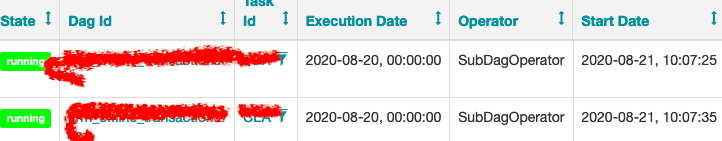

What happened: tasks not being scheduled to the correct pool

What you expected to happen: If the pool for the task is set to subdag_pool, for the task to be queued at this pool.

How to reproduce it:

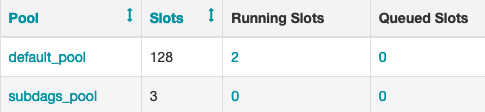

Subdag tasks take all the running slots in the current pool, so I created a separate pool for the sub-dag tasks.

And then assigned this pool to the subdags

In 1.10 all subdags are scheduled in the same workers/pools as the parent DAGs - by design. This has been changed in Airflow 2.0 where SubDags are processed in a different way and they can run in different workers/pools.

@potiuk I cannot check right now if this is still happening, sorry. I was using 2.0 from the master branch. I want to mention that I solved this issue setting the SubDagOperator (which is a sensor under the hood) to mode = 'reschedule' so it doesn't take a whole slot.

Is there a reason for the 'reschedule' mode not being the default? I have enough sensors to fill and block the pool. That's the source of this issue actually.

Anyway I'm using less sensors and building bigger dags now that we have TaskGroups.

Apache Airflow version: docker image

apache/airflow:master-python3.8What happened: tasks not being scheduled to the correct pool

What you expected to happen: If the pool for the task is set to

subdag_pool, for the task to be queued at this pool.How to reproduce it:

Subdag tasks take all the running slots in the current pool, so I created a separate pool for the sub-dag tasks.

And then assigned this pool to the subdags

But these tasks keep being scheduled at the

default_poolinstead of the newsubdag_pool.The text was updated successfully, but these errors were encountered: