New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ARROW-14738: [Python][Doc] Make return types clickable #11726

Conversation

|

I suppose you tried it locally? |

yep, tried locally. With instead of but the Table in "Return Type" becomes clickable. |

|

I was thinking we could maybe also use numpydoc for our docstring rendering. They also support making the return type into a clickable link, but generalize this to parameter types as well (and it doesn't result in the duplicate "Returns" issue you mentioned above). |

I did a quick test and it seems to work. |

|

I think it should be fine to switch to numpydoc in general (for example, pandas, numpy, scikit-learn are all using it). There might be some pyarrow-specific things (since there are slight differences between both), but I assume those be solvable. |

No, I don't remember anymore why I did that :( |

|

Is this ready, or still draft? |

It was a draft as it started as an experiment, but locally all the parts of the API reference I could check seem to render correctly and thus the transition to numpydoc seems reasonable. There was a CI failure when building the docs that I think I have addressed in 8365150 so I think we can consider this one ready to go |

|

Can you add Looking at the doc build on CI, there are some warnings: (I can also try to take a look at this next week) Another set look like: we might need to add "bool" (and some other words) to the list of things that should not be linked (see |

|

I was able to resolve the I'll have to investigate the missing stub files |

|

Most warnings seems to have been fixed, I still see some errors in |

Seems it might be related to |

|

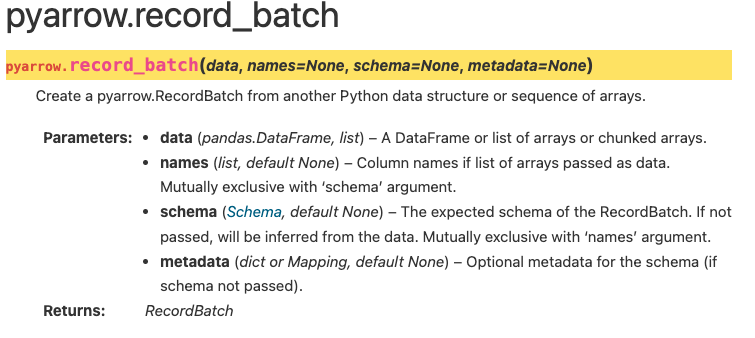

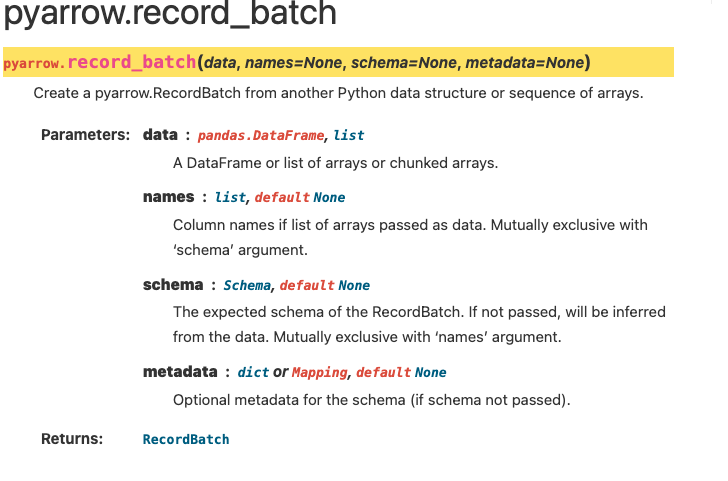

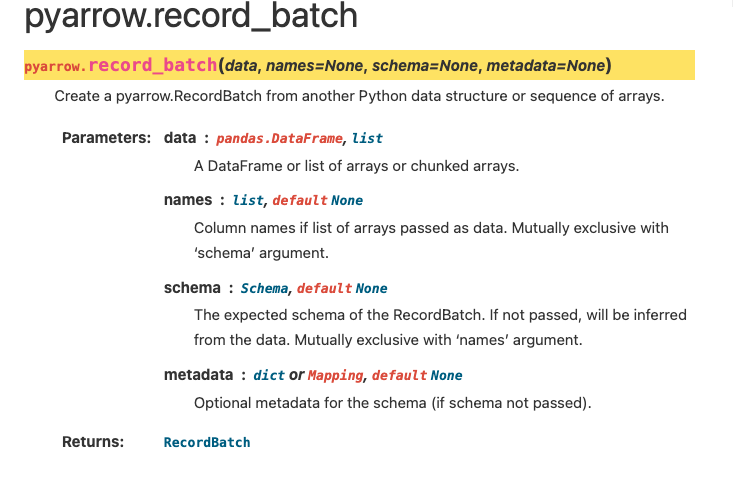

Took a look at this locally. For other's benefit, here's how this changes the RecordBatch API reference page: Looks pretty nice! Althrough I don't love the extra-heavy font-weight on the parameter names. Would be be willing to add this to the CSS at b, strong {

font-weight: bold;

}That would make the above page look like: |

docs/source/conf.py

Outdated

| 'IPython.sphinxext.ipython_directive', | ||

| 'IPython.sphinxext.ipython_console_highlighting', | ||

| 'breathe', | ||

| 'sphinx_tabs.tabs' | ||

| 'sphinx_tabs.tabs', | ||

| 'sphinx.ext.intersphinx' |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hmm, can we order this list alphabetically?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

👍 done

| @@ -1234,8 +1234,8 @@ cdef class Table(_PandasConvertible): | |||

| """ | |||

| A collection of top-level named, equal length Arrow arrays. | |||

|

|

|||

| Warning | |||

| ------- | |||

| Warnings | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There is a single warning here, is the plural intended?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, it's intended because numpydoc does only recognise "Warnings" as a valid header, not "Warning".

|

https://pandas.pydata.org/docs/dev/reference/api/pandas.DataFrame.mean.html

That's indeed odd (and I agree it would be good to add that small css snippet to make this look better). |

Actually it's there for the pandas docs too pandas.mov |

|

What's the video showing exactly? I see it changing back and forth between bold and very bold. But how is that triggered? |

|

@jorisvandenbossche @amol- Let's continue the font discussion in the theme repo. I don't want to hold up this PR on that. |

|

@wjones127 thanks for opening the issue! I tried this out locally, and I am seeing some other strange formatting artifacts (but related to what sphinx / numpydoc output). For example on the Table page (https://arrow.apache.org/docs/python/generated/pyarrow.Table.html in the online docs): For the first parameter it is done correctly, but for the second parameter the other words which are not auto-linked are formatted as code instead of normal text, for some reason. |

|

Given that further formatting discussions are probably expected to happen in the theme issue ( pydata/pydata-sphinx-theme#527 ) should we ship this to move documentation to numpydocs in preparation for 7.0.0? |

|

Personally, I find #11726 (comment) a somewhat annoying issue to ship as is (the strange formatting of the type list). I looked a bit into what numpydoc is doing here, and it seems that it is not very smart in how it tries to create links. Basically every word in the type section in the docstring gets transformed in a reference, and thus if nothing linkable is found by sphinx, it gets rendered as code. That's the reason you get "of" in "list of Array" rendered as code. Now, for a solution for this, I currently see two options: Option 1 is to use the numpydoc feature numpydoc_xref_ignore = {

"optional", "default", "None", "True", "False", "or", "of",

"iterator", "function", "object",

# TODO those could be removed if we rewrite the docstring a bit

"values", "coercible", "to", "arrays",

}Option 2 could be to use some custom CSS to let the code in the type explanation look like normal text. That would be something like: span.classifier code.xref span.pre {

color: rgba(var(--pst-color-text-base),1);

font-family: var(--pst-font-family-base);

font-size: 1rem;

}This might actually be the "easier" solution, but it's a bit more a hack (while the other uses an actual numpydoc option), and is a bit less robust (eg if the html structure generated by sphinx changes). |

|

@jorisvandenbossche

My suggestion would be to apply (1) and solve (2) using That solves the majority of cases, few instances of problems still remain like |

Personally I am not in favour of using type annotation syntax in the docstrings (if we want type annotations, we can actually use type annoations in the signature). My stance is that docstrings should be meant as human readable text, and I think only a minority of python users is familiar with typing syntax. If the "of" is the problem here, having that in the

Yes, I noticed that as well. I started looking into why, but didn't look further as just including in the ignore list also fixes it.

I don't think for example "function" should be identified/formatted as code? While it's indeed a generic python term, it's not an actual Python code keyword or builtin or .. (i.e. "if you type it in a console, you get an error"), so I think we should see that as prose text?

The reason that I included (now, there is a bug in numpydoc that listing those 3 values in the ignore list doesn't have any effect, because the "ignore" list has no priority over some default values they link (which you can supplement with

That's because there is a missing space in our code: arrow/python/pyarrow/array.pxi Lines 714 to 716 in ccffcea

numpydoc is sensitive to the delimiter actually being |

Well that's sort of the problem here; the numpydoc's having a hard time reading the human-readable text 😉 That being said, I think it's totally fine in the case of While |

But I didn't mean any random "free-form" text :). But yes, I agree with you that most cases that don't fall into this "type1 or type2" or "type1 of type2" cases, should probably be rewritten anyway, and moved into the description field. So fully agreed with:

Note that the test you linked also uses some basic items in an ignore list: https://github.com/numpy/numpydoc/blob/e9384ce346359cbec556454ae69c1af44d6a9017/numpydoc/tests/test_xref.py#L200 (so that's something we in any case want to copy) |

Well,

Note that |

|

@jorisvandenbossche do we want to include this in 7.0? Personally I'd like to :) |

|

@amol- I pushed a commit with some changes:

|

Fine for me, we can always iterate. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @amol-, @jorisvandenbossche! Merging on green.

|

Benchmark runs are scheduled for baseline = fd580db and contender = e9e16c9. e9e16c9 is a master commit associated with this PR. Results will be available as each benchmark for each run completes. |

No description provided.