New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

KVMHAMonitor thread blocks indefinitely while NFS not available #2890

Comments

|

After performing more tests, virtlockd does help prevent from getting duplicate VMs. With Host HA disabled, the blocked host goes to a But, if Host HA is enabled, the blocked host gets rebooted because of the bug above. The VMs on the host get rescheduled on that same host when it comes up, but it still should not have been rebooted because of blocked NFS. |

|

This morning I confirmed the behavior on 4.9 is different than 4.11. When there's a long lasting (say 15 minutes) NFS hang the agent stays Up and when NFS operations resumes everyone's happy. Note we did disable the automatic reboot in the heartbeat script for that to work. This saved us from massive reboots and VM outages before when we had a network maintenance that cut all KVM host from NFS for 22 minutes. |

|

Confirmed we see similar behavior on 4.11.2rc3 and the agent went in Down state. Agent logs: 810986-e702-36ea-a87b-fd48064ecb12 2018-10-23 13:21:28,804 WARN [kvm.resource.KVMHAChecker] (Script-3:null) (logid:) Interrupting script. Note sometimes you will see the agent successfully go in Disconnect state but the host HA framework might still fire after the kvm.ha.degraded.max.period timer and that is not expected. In any case we want to avoid massive KVM host resets via IPMI for storage related problems because this is more damaging than waiting for primary storage to come back. |

|

When you block NFS on a host, eventually all the agentRequest-Handler and UgentTask threads gradually hang as well. It seems after the last UgentTask handler thread hangs, is about the time the host is marked as Down. |

|

hi @csquire @somejfn, thanks for this issue! I think it's correct that the host goes into 'Down' state after loosing it's grip on the storage, since this is basically making it non-operative. Going into 'Disconnected' state would only mean the connection between management and host is compromised. On the other hand duplicated VMs is definitely something that needs to get addressed, prior marking the host as 'Down' when we have a VM-HA enabled. Just to be sure, can you please confirm you don't see these duplicated VMs on a non VM-ha enabled instances? I'd like to narrow down this issue and make sure it's in the VM-HA logic. |

|

Correct. Only VM-HA enabled would get restarted and create a duplicate

when the host goes down. Still, I don't think this a good behavior to

fire VM-HA (because of host Up --> Down state) under any scenarios caused

by transient storage disconnection. If the host goes down after 5

minutes, VM-HA restarts VM about one minute later, and then if the NFS

issue gets resolved you have almost 100% probability of root disk

corruption and you don't know where the 2 VMs are since Cloudstack only

remembers the last copy it started.

…On Mon, Oct 29, 2018 at 3:21 AM Boris Stoyanov - a.k.a Bobby < ***@***.***> wrote:

hi @csquire <https://github.com/csquire> @somejfn

<https://github.com/somejfn>, thanks for this issue!

I think it's correct that the host goes into 'Down' state after loosing

it's grip on the storage, since this is basically making it inoperable.

Going into 'Disconnected' state would only mean the connection between

management and host is compromised.

On the other hand duplicated VMs is definitely something that needs to get

addressed, prior marking the host as 'Down' when we have a VM-HA enabled.

Just to be sure, can you please confirm you don't see these duplicated VMs

on a non VM-ha enabled instances? I'd like to narrow down this issue and

make sure it's in the VM-HA logic.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#2890 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AOflqLPAw0oQ2_po-4BHKpzHRDZMUc9Hks5upqxxgaJpZM4XNOD8>

.

|

|

I think the leanest way to fence the resource would be, prior to setting the host down to iterate all it's VMs and shut them down, only then to proceed and mark the host as 'Down', once were there, there's no issue with VM-HA starting a new instance on a separate host. |

|

With NFS not available and since those are hard mounts, even a "virsh

destroy" would not work. Libvirtd will block until the NFS mount issue is

resolved. I think that ideally the cloudstack-agent would do every task

in a non-blocking way and not be affected by primary storage hiccups. For

instance, to avoid the thread pool blocking on libvirtd tasks, why no

implement a configurable timeout on thosetasks with sensible defaults ? I

don't see a good reason a call to libvirtd take more than a few seconds

(beside known long lasting tasks such as live migration)

As for fencing, afaik the host HA framework was created for the purpose or

reliable fencing... but will cause more damage than good if the end result

is to reboot all KVM hosts via IPMI (compared to just wait for NFS to come

back)

…On Mon, Oct 29, 2018 at 9:18 AM Boris Stoyanov - a.k.a Bobby < ***@***.***> wrote:

I think the leanest way to fence the resource would be, prior to setting

the host down to iterate all it's VMs and shut them down, only then to

proceed and mark the host as 'Down', once were there, there's no issue with

VM-HA starting a new instance on a separate host.

I guess this needs further investigation and a fix as described.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#2890 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AOflqMVZGtW3QGGlL-DfyzMy6duL7EXQks5upwAhgaJpZM4XNOD8>

.

|

|

@csquire I could not reproduce the issue. I guess it depends on how you're simulating NFS/shared-storage failure and what your iptables rules are. In my case I simply shutdown the NFS server instead of iptables rules and observed following: I could see the The only change in behaviour I see is that the KVM host is not rebooted but only the agent gets shutdown. The downside of this approach is that when the kvm agent is restarted and NFS server is started, the KVM hosts and its VMs are not responsive and I had to reboot the host manually to start on a clean slate, perhaps the previous behaviour of triggering a host reboot was better. Can you advise if we should revert that behaviour - @DaanHoogland @PaulAngus @borisstoyanov ? |

|

Based on the triaging exercise, I've moved this to 4.11.3.0 as further discussion is pending. I've taken the least risk approach to revert part of the change in behaviour and submitted - #2984 |

|

If you just shutdown the NFS server, the NFS client will get immediate

response (TCP reset) so this is not the same as blocking the NFS server IP

with iptables and DROP rules like I do for testing the network outage:

*iptables -I INPUT -s nfs_server_ip -j DROP ; iptables -I OUTPUT -d

nfs_server_ip -j DROP*

It may take more than one attempt to see the tread pool block.

…On Tue, Oct 30, 2018 at 4:47 AM Rohit Yadav ***@***.***> wrote:

Based on the triaging exercise, I've moved this to 4.11.3.0 as further

discussion is pending. I've taken the least risk approach to revert part of

the change in behaviour and submitted - #2984

<#2984>

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#2890 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AOflqLrd7IVAIUdSuy-hW7NLhwXPDnfbks5uqBISgaJpZM4XNOD8>

.

|

ISSUE TYPE

COMPONENT NAME

CLOUDSTACK VERSION

CONFIGURATION

OS / ENVIRONMENT

SUMMARY

Also see comment thread on PR #2722

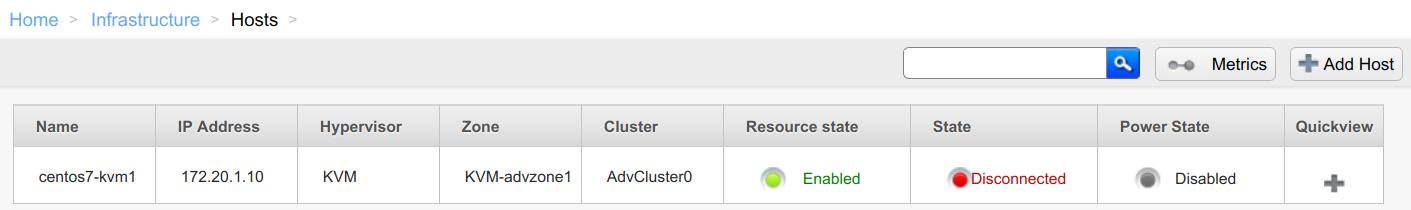

We installed an RC release which includes PR #2722 on a test system expecting the host to get marked as

Disconnectedafter using iptables to drop NFS requests, but instead the host gets marked asDown. My investigation shows that the linestorage = conn.storagePoolLookupByUUIDString(uuid);blocks indefinitely. So, kvmheartbeat.sh is never executed, a host investigation is started, the host with blocked NFS is marked as Down and finally all VMs on that host are rescheduled and result in duplicate VMs.I pulled a thread dump and found the KVMHAMonitor thread will hang here until NFS is unblocked.

STEPS TO REPRODUCE

EXPECTED RESULTS

ACTUAL RESULTS

The text was updated successfully, but these errors were encountered: