New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

DRILL-8037: Add V2 JSON Format Plugin based on EVF #2364

Conversation

|

@paul-rogers I've cherry-picked commit from your rev2 branch. Didn't get all of them, because of big code conflicts and some of them are outdated. |

c8a31ec

to

6ccb92f

Compare

|

@vdiravka, thanks for doing this! It will take me a bit to remember where I left this. I'll do the review in small bits. |

|

@vdiravka, turns out there was one more commit that I had failed to push to |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks again for merging this code across. I'm sure it was not an easy task.

I went though this PR, comparing it to a diff of my original branch with its base. Looks like you got most of the changes. I only found a few areas, noted below, where there were differences.

Please also grab that last commit I just pushed and I'll do a final review.

I saw that you had made a few good cleanups in doing the merge, so that means you probably took a good look at the code. I'll assume that counts as a review of my changes, and I'm just reviewing the merge (so that I'm not approving my own changes!)

...java-exec/src/main/java/org/apache/drill/exec/physical/resultSet/impl/SingleVectorState.java

Outdated

Show resolved

Hide resolved

| .fsConf(fsConf) | ||

| .defaultName(PLUGIN_NAME) | ||

| .readerOperatorType(READER_OPERATOR_TYPE) | ||

| .writerOperatorType(WRITER_OPERATOR_TYPE) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Add the following:

// Temporary until V2 is the default.There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It is enabled by default. So what is temporary here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry, I saw this in the PR description:

The new "V2" JSON scan is controlled by a new option:

store.json.enable_v2_reader, which is false by default in this PR.

Which I thought meant that the V2 reader is not enabled by default, hence the suggested comment.

| @@ -692,6 +692,7 @@ drill.exec.options: { | |||

| # Property name and value should be separated by =. | |||

| # Properties should be separated by new line (\n). | |||

| store.hive.conf.properties: "", | |||

| store.json.enable_v2_reader: true, | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do we want to use the V2 reader by default? I think this should be false for now.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes, we want by default

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If so, then please change the PR description quoted above.

| } | ||

| finally { | ||

| String set = "alter session set `" | ||

| + ExecConstants.JSON_READER_SKIP_INVALID_RECORDS_FLAG + "` = false"; | ||

| + ExecConstants.JSON_READER_SKIP_INVALID_RECORDS_FLAG + "` = false"; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There are some changes here and above that need to be copied over.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What changes?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The code uses a method to do the alter session rather than building up a string. See this file.

| import org.junit.BeforeClass; | ||

| import org.junit.Test; | ||

|

|

||

| public class TestExtendedTypes extends BaseTestQuery { | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There is some cleanup in TestComplexTypeWriter.java to be copied across.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Spaces? Could you point me what cleanup do you mean?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Interesting. Seems OK now. Maybe I read the diff backwards...

| @@ -78,10 +78,10 @@ public void testMongoExtendedTypes() throws Exception { | |||

| List<QueryDataBatch> resultList = testSqlWithResults(String.format("select * from dfs.`%s`", originalFile)); | |||

| String actual = getResultString(resultList, ","); | |||

| String expected = "drill_timestamp_millies,bin,bin1\n2015-07-07 03:59:43.488,drill,drill\n"; | |||

| Assert.assertEquals(expected, actual); | |||

| assertEquals(expected, actual); | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This file has some extended type tests to be copied over.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do you mean to add test cases for every extended type from the file?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Seems fine now.

| private void resetV2Reader() { | ||

| client.resetSession(ExecConstants.ENABLE_V2_JSON_READER_KEY); | ||

| } | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Changes in TestJsonNanInf.java need to be copied over.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What changes?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @paul-rogers I am going to enable V2 by default. All tests passed and fixed, except cases with enabled options for UNION and JSON Corrupted files, but these features are experimental, so we are fine to go without them now.

So here are my additional changes rebased onto latest master and including all your commits from rev2

|

This pull request fixes 2 alerts when merging 42863f4 into ecdb8db - view on LGTM.com fixed alerts:

|

|

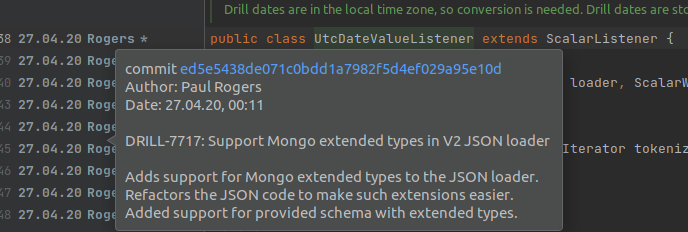

Sorry, I perhaps read the diffs backward before: the things I thought were missing seem actually fine. Just to double-check, there were some additional complex type handling and tests in this commit that would be useful to add. |

Hi Paul. I double checked and looks like the changes from commit is already incorporated. I made cheery-pick, resolved 3 merge conflicts and after that there are no new changes. The other question what do you think is that useful to have dfs plugin config to switch to V1 JSON reader? I think, since the goal to switch to V2 fully, the system/session option is enough |

|

@vdiravka, the commit in question was something I pushed about a week ago: it was some last changes that were still on my local machine that I'd not yet pushed. Sorry about that! They were not on GitHub when you grabbed the original commits. For example, the new file |

For passing session option in tests I have created the new methods I see, you've already commited this changes and file in |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@vdiravka, thanks for the explanations. Hard to remember stuff that happened over a year ago...

The changes look good. Thanks much for getting this merged!

LGTM. +1

|

I found one regression for LATTERAL and UNNEST operators with V2. SchemaChange starts to be happened, but it shouldn't. |

|

@vdiravka Will the fix included in this PR or a new separate PR? |

|

I'll try to prepare the fix today in this PR. If it will take longer, than it is fine to include in the separate PR |

|

@vdiravka reached out to me on this bug. His explanation:

Here is my analysis: You are tight that the OK, so we agree that a type change is bad. What you're describing is the same type, but a different vector. This is a bug in several ways, and not the way you might think. First, it seems logical that if column x is a non-null INT in one batch, and is a non-null INT in the next batch, that no schema change has occurred. But, Drill will surprise you. Most operators assume that the vector itself has not changed: only the data stored within the vector. This is a subtle point, so let me expand it. A vector is a permanent holder or a column. The vector holds one or more data buffers. Those buffers change from batch to batch. Think of it as a bucket brigade: the old-style way that people fought fires. A chain of people forms (the vectors). They hand buckets one to the next (the data buffers). Now, I was pretty surprised when I first discovered this. So was Boaz when he wrote hash agg. But, most of Drill's code assumes that, in setup, the code binds to a specific vector. In the next batch, that same binding is valid; only the data changes. This shows up in code gen and other places. In fact, a goodly part of EVF is concerned with this need for "vector continuity" even across readers. (Let that sink in: two readers that have nothing to do with each other must somehow still share common vectors. That alone should tell us the vector design in Drill is a bit strange and needs rethinking. But, that's another topic.) So, now let's review the bug. On the one hand, But, on the other hand, So, the first thing to check is if the types are at all different. (If the "major types" differ.) If so, then we have a legitimate schema change that Drill can't handle. Now, if you find that the types are the same, then we have a bug in EVF, specifically in the Let me ask another question. When this occurs, is it in the first batch of the second (or later) reader? If so, then that reader should have pulled the vector from the cache. The question is, why didn't it? If the error occurs within the first reader, then the bug is more subtle. How could the JSON reader accomplish that? The Yet another question is why these tests didn't fail 3 months ago when the tests first ran. And, why did the tests pass way back when I wrote this code? Did anything change elsewhere that could trigger this? I don't see how, but we should probably ask the question. Still another possibility is that there has always been a schema change, the prior code failed to point out the schema change (by returning a Fortunately, these are unit tests, so we should be able to sort out the issues without too much trouble. I'll also note that there were a few fixes to EVF V2 that I made in my PR, but those were around a subtle but in implicit columns. Suggestion: give the above a shot to see if you can see the problem. Otherwise, tomorrow I'll try to squeeze in time to grab this branch and do some debugging. After all, I wrote this stuff originally, so I should try to make it work. |

|

Oddly, |

|

This pull request fixes 2 alerts when merging 2fdec26 into 556b972 - view on LGTM.com fixed alerts:

|

|

Hi @paul-rogers I have rebased the branch to master branch. And in separate new commit removed the hack, which hid the schema change in the Obtaining vector from cache here leads to errors in this and other test cases: So as for me looks like we need to implement supporting schema change for hashAgg operator or obtaining |

|

This pull request fixes 2 alerts when merging 9eb445a into 556b972 - view on LGTM.com fixed alerts:

|

|

@vdiravka, good sleuthing! You did indeed find the hole in the system. Map (and repeated map) vectors are special: they are just holders for the actual data vectors. If they are reused, we get all the previous map members, which may or may not be a problem. I guess it would be a problem if reader 1 has a.b be an INT, while reader 2 wants a.b to be a VARCHAR. I guess a question is whether the HashAgg maintains a pointer to the map itself, or only the physical columns within it. It has no pointers to the map itself, we can special-case maps: they are considered the same schema if their contents are the same, whether or not the map vector itself is the same. Another choice would be to store the map vector in the cache, but strip all the physical columns out of it when the caller asks for it again. The caller then reassembles the physical columns, also from the cache, and hopefully creates the same map structure as the previous reader. Based on what you learned of the HashAgg, which of these might work? |

|

@vdiravka, which test do I run to see the failure? I tried that one test mentioned above, but it worked for me. Actually, I should probably create a separate unit test for this scenario. I'll do that to see if I can reproduce the issue. If so, then I'll see if I can find a fix, perhaps one of the ideas mentioned above. Then, we can validate the fix on that test case you have which is failing. |

950984e

to

4e2c483

Compare

|

This pull request fixes 2 alerts when merging 4e2c483 into 634ffa2 - view on LGTM.com fixed alerts:

|

* Snapshot: reworked JSON field parser creation * Updated JSON loader * Redo value listener with tokens * Extended long type works * More simple extended types * Added $date * Binary type * All extended type except arrays * Extended arrays partly working * More arrays work * Refactor element parser interfaces * Rename RowSetTests --> RowSetTest * More factory cleanup * Revised unknown field creation * In middle of factory/parser restructuring * Scalars, object, some variants work again * JSON loader tests pass * File cleanup * Old extended types test passes * Renamed JSON packages * Tested extended provided types

|

@paul-rogers Additionally DRILL-8196, DRILL-8197 and DRILL-8201 are opened and going to be resolved in separate PRs soon. |

|

This pull request fixes 3 alerts when merging 8fd294a into 5080424 - view on LGTM.com fixed alerts:

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@vdiravka, thanks again for merging the code.

LGTM +1

* Enable store.json.enable_v2_reader by default * Fix TestJsonReader doulbe quotes test cases. Update jackson 2.12.1 -> 2.13.0 * Disable V2 for experimental UNION datatype * Fix regressions * Fix json Schema Provision (it wasn't provided for JsonLoaderBuilder). The previous schema provision was a fake, the reader schema was infered from the json content. It fixes the scan and reader schema validation. And it starts to apply the provided schema to ANALYZE COMMANDS, fixed TestMetastoreWithEasyFormatPlugin#testAnalyzeOnJsonTable

|

Thanks for the review @paul-rogers |

|

This pull request fixes 3 alerts when merging 05b2933 into 7c37323 - view on LGTM.com fixed alerts:

|

DRILL-8037: Add V2 JSON Format Plugin based on EVF

Description

This adds new V2 beta JSON Format Plugin based on the "Extended Vector Framework".

This is follow up DRILL-6953 (was closed with the decision to merge it by small pieces).

So it is based on #1913 and rev2 work.

Documentation

"V1" - "legacy" reader

"V2" - new beta version JSON Format Plugin based on the result set loader.

The V2 version is a bit more robust, and supports the row set framework. However, V1 supports unions and reading corrupted JSON files.

The new "V2" JSON scan is controlled by a new option:

store.json.enable_v2_reader, which is true by default in this PR.Adds a "projection type" to the column writer so that the

JSON parser can receive a "hint" as to the expected type.

The hint is from the form of the projected column: a[0],

a.b or just a.

Therefore it supports schema provision. Example:

Testing

A lot of existing test cases are running for both readers. It is needed until V1 is still present in Drill code.