METRON-1569 Allow user to change field name conversion when indexing … #1022

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the contribution @nickwallen. I made a first pass and have a couple questions about the caching implementation in context of the existing Zookeeper Curator configuration management.

| private FieldNameConverter createInstance(String sensorType, WriterConfiguration config) { | ||

|

|

||

| // default to the 'DEDOT' field name converter to maintain backwards compatibility | ||

| FieldNameConverter result = new DeDotFieldNameConverter(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Might we want to call the enum when we create these objects?

FieldNameConverters.DEDOT.get();

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure, that makes sense.

| * after they are created. Once they expire, the {@link WriterConfiguration} is used to | ||

| * reload the {@link FieldNameConverter}. | ||

| * | ||

| * <p>The user can change the {@link FieldNameConverter} in use at runtime. A change |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I want to say that this is handled through the ConfigurationsUpdater classes already already. I believe the writer passes in an updated config on each call.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe I am misunderstanding you, but the ConfigurationsUpdater ensures that our configuration classes stay in-sync with Zk. That's not what this class does.

This creates the appropriate FieldNameConverter instance for each source type that is then used by the ElasticsearchWriter to do the field name conversion. Are you proposing a different way to do this?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

To help clarify, I could have implemented a different FieldNameConverterFactory; maybe one called SimpleFieldNameConverterFactory.

This 'simple' factory wouldn't even have to use a cache. Every time ElasticsearchWriter calls create(String sensorType, WriterConfiguration config), it could just instantiate a new instance of the right FieldNameConverter.

But of course, I didn't do that because this occurs for every field in every message that is written to Elasticsearch. The cache is there for performance.

I offer this only as an example to help clarify the purpose of the CachedFieldNameConverterFactory.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think I'm just not fully grokking why we need additional caching here rather than FieldNameConverters.DEDOT.get();. It seems like the enum handles caching by virtue of the constructors in the enum itself? NOOP(new NoopFieldNameConverter())

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So you're saying, hey why not just...

- Take out the caching and just have something like a

SimpleFieldNameConverterFactory. This would translate the (sourceType, WriterConfiguration) to the rightFieldNameConverter. - The caching would be handled by

FieldNameConvertersin the sense that they are effectively singletons.

I think that would work if all FieldNameConverter implementations are stateless. And they all are stateless today, but I tried not to make that a constraint. I actually tried to make this work whether they are stateless or not.

But alas there is a bug in what I did because I need each call to FieldNameConverters.get() to return a new instance. (If we want to stick with this approach.)

I'd be open to removing the cache, if its not needed and we think FieldNameConverters would always remain stateless.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The prior commit 'b18c3bf' was just me fixing my original caching implementation, so at least I had a version of that with the bug fixed.

The latest commit 'cee3994' removes the cache and relies on the FactoryNameConverters class to hand out shared instances. This is what we have discussed.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, agreed on the possible need for handling other writers. Is there a reason we wouldn't put that logic in the enum factory rather than creating another new class for it? It wouldn't even need to depend on the WriterConfiguration, actually.

EDIT - would need that create to be static

public enum FieldNameConverters implements FieldNameConverter {

NOOP(new NoopFieldNameConverter()),

DEDOT(new DeDotFieldNameConverter());

private FieldNameConverter converter;

FieldNameConverters(FieldNameConverter converter) {

this.converter = converter;

}

public FieldNameConverter create(String sensorType, Optional<String> converterName) {

// default to the 'DEDOT' field name converter to maintain backwards compatibility

return FieldNameConverters.valueOf(converterName.orElse("DEDOT"));

}

@Override

public String convert(String originalField) {

return converter.convert(originalField);

}

}

I'm not sure I follow about the testing - do you mean integration testing? We have an ElasticsearchWriterTest class that @justinleet wrote - https://github.com/apache/metron/blob/master/metron-platform/metron-elasticsearch/src/test/java/org/apache/metron/elasticsearch/writer/ElasticsearchWriterTest.java

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not sure I follow about the testing

If you look closely at those tests, all that class tests is the response using buildWriteReponse. It does not actually test the class using the write method. When you try to do that, it blows up because of dependency issues.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok, I see what you mean. Agreed, it would be good to have some tests around that write method at some point, but I think it's ok to keep that out of scope for this particular PR. We have a WriterBoltIntegrationTest, but it's only covering parsers right now. At a later time we could look at expanding it to cover multiple writers and topologies and provide hooks on those builders to enable one-time setup and teardown across a suite of tests.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I can add the create to the FieldNameConverters. That makes sense here. Its going to be slightly different than what you have above, but is what you were going for.

| */ | ||

| private FieldNameConverter createInstance(String sensorType, WriterConfiguration config) { | ||

|

|

||

| // default to the 'DEDOT' field name converter to maintain backwards compatibility |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks interesting, but one bit of functionality has changed as part of doing this PR. Currently, we specify the field converter at the writer implementation level, so:

- ES uses dedot

- HDFS uses noop

- solr uses noop

By doing this, we're actually not maintaining backwards compatibility, we're changing the behavior for the HDFS writer and Solr to dedot. What I'd suggest doing is adding a method to the BulkMessageWriter interface like so:

default FieldNameConverter defaultFieldNameConverter() {

return FieldNameConverters.NOOP.get();

}

and in ElasticsearchWriter specify DEDOT as the default. Also, here, you probably want to pass in the default field name converter if unspecified as a 3rd argument.

This would allow us to maintain backwards compatibility and enable users to override.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Remember that only the ElasticsearchWriter does field name conversion. HDFS and Solr do not do field name conversion and so are backwards compatible.

In the README, I think I specified that this field is only applicable for 'elasticsearch'.

In the future, we could certainly role this functionality out to the other Writers, but right now its not there. AFAIK

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OH! yes, you're right. Sorry for jumping to conclusions, I assumed you generalized this to the bulk message writer level, rather than keeping it at the ES writer level. My bad, I retract the objection.

…her reusing the same instance each time.

| * <p>The instances are created and managed by the {@link FieldNameConverters} class. | ||

| */ | ||

| public class SharedFieldNameConverterFactory implements FieldNameConverterFactory { | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks like it's moving in the right direction, but as @mmiklavc gave an example for, why would we not replace this class with a static create(String sensorType, WriterConfig config) method on the enum?

| * <p>Allows the field name converter to be specified using a short-hand | ||

| * name, rather than the entire fully-qualified class name. | ||

| */ | ||

| public enum FieldNameConverters { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Minor nit, can we have this implement FieldNameConverter so the enum can be used directly without calling get()?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok. Check 'er out.

|

Ok, I'm comfortable with the change now. +1 by inspection, pending @mmiklavc |

|

+1 by inspection. This is great @nickwallen, thanks for the contribution. |

The

ElasticsearchWriterhas a mechanism to transform the field names of a message before it is written to Elasticsearch. Right now this mechanism is hard-coded to replace all '.' dots with ':' colons.This mechanism was needed for Elasticsearch 2.x which did not allow dots in field names. Now that Metron supports Elasticsearch 5.x this is no longer a problem. A user should be able to configure the field name transformation when writing to Elasticsearch, as needed.

While it might have been simpler to just remove the de-dotting mechanism, this would break backwards compatibility. Taking this approach provides users with an upgrade path.

Changes

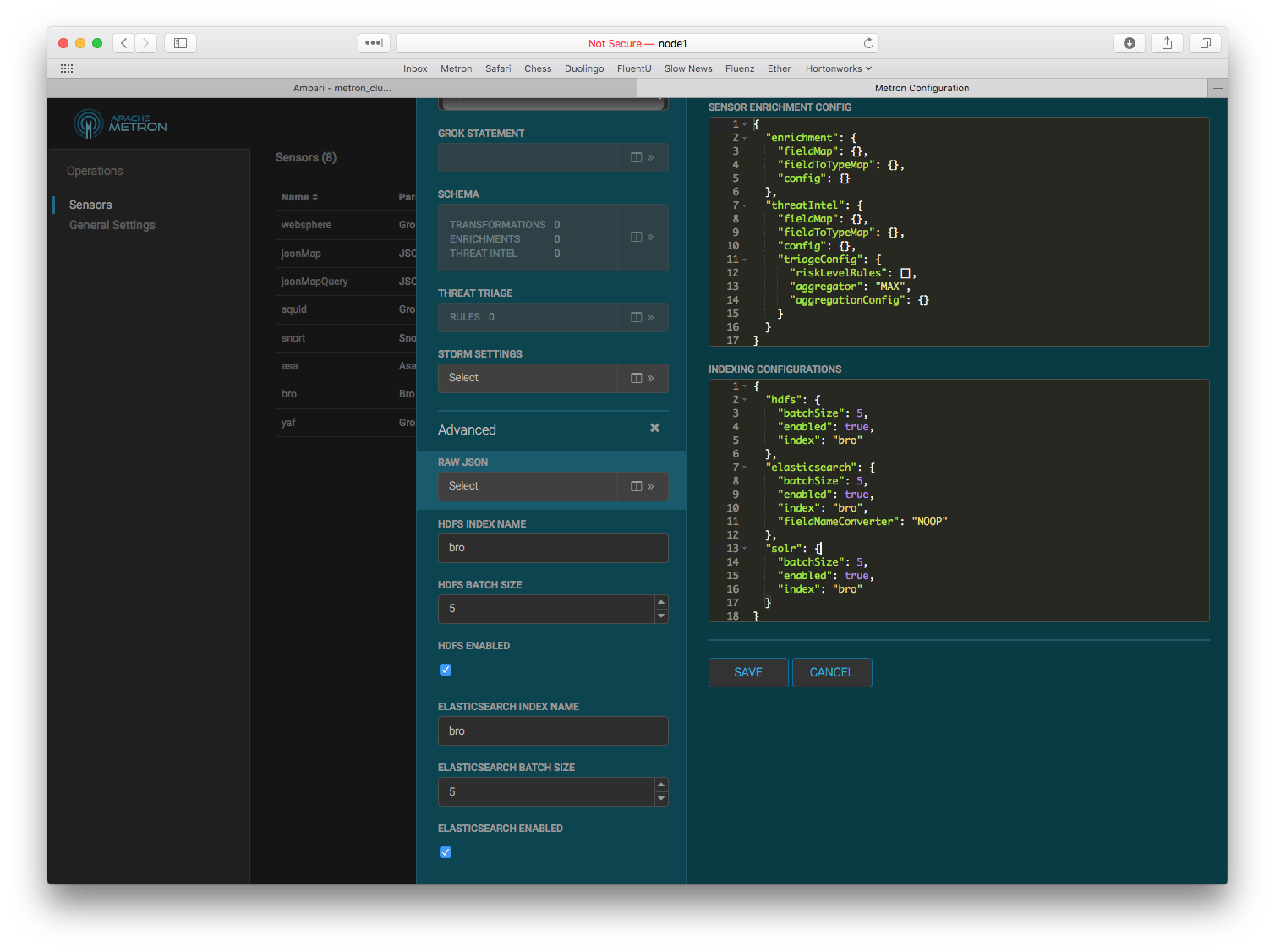

This change allows the user to configure the field name converter as part of the index writer configuration.

Acceptable values include the following.

DEDOT: Replaces all '.' with ':' which is the default, backwards compatible behavior.NOOP: No field name change.If no "fieldNameConverter" is defined, it defaults to using

DEDOTwhich maintains backwards compatibility.A cache of

FieldNameConverters is maintained since the index writer configuration can be changed at run-time and each sensor has its own index writer configuration.An example configuration looks-like the following.

Code Changes

Added the

fieldNameConverterparameter to the Index writer configuration.Moved the

FieldNameConverterimplementations to a dedicated package inmetron-common.Renamed

ElasticsearchFieldNameConvertertoDeDotFieldNameConverter.Implemented the

NoopFieldNameConverterwhich does not modify the field name.Created

FieldNameConvertersclass that allows a user to specify eitherDEDOTorNOOPto choose the appropriate implementation.Implemented a

CachedFieldNameConverterFactorythat encapsulates all the logic for choosing and instantiating the appropriateFieldNameConverter.Updated

ElasticsearchWriterto use theCachedFieldNameConverterFactory.Updated the README to document the new configuration parameter.

Manual Testing

Launch a development environment and login.

Validate the environment by ensuring alerts are visible in the Alerts UI and that the Ambari Service Check completes successfully. This ensures that the change is backwards compatible.

Login to the Storm UI and enable DEBUG logging for

org.apache.metron.commonandorg.apache.metron.elasticsearch.The Storm worker logs in

/var/log/storm/worker-artifacts/random_access_indexing*/worker.logshould contain the following log statements, if you have enabled DEBUG logging correctly. This shows that the defaultDEDOTconverter is in-use.Launch the REPL.

Change the field name converter to NOOP.

It can take up to 5 minutes for the topology to pick-up this change. The old

FieldNameConverterneeds to expire from the cache first.Go back to the Storm worker logs. When the change takes effect, we should see a log like the following indicating that the

NoopFieldNameConverterwas created.In the same logs, we will start to see tuples fail to be indexed. Elasticsearch complains because the templates have been created to expect

source:type, but that field no longer exists because theFieldNameConverterwas changed.Pull Request Checklist