-

Notifications

You must be signed in to change notification settings - Fork 3.5k

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

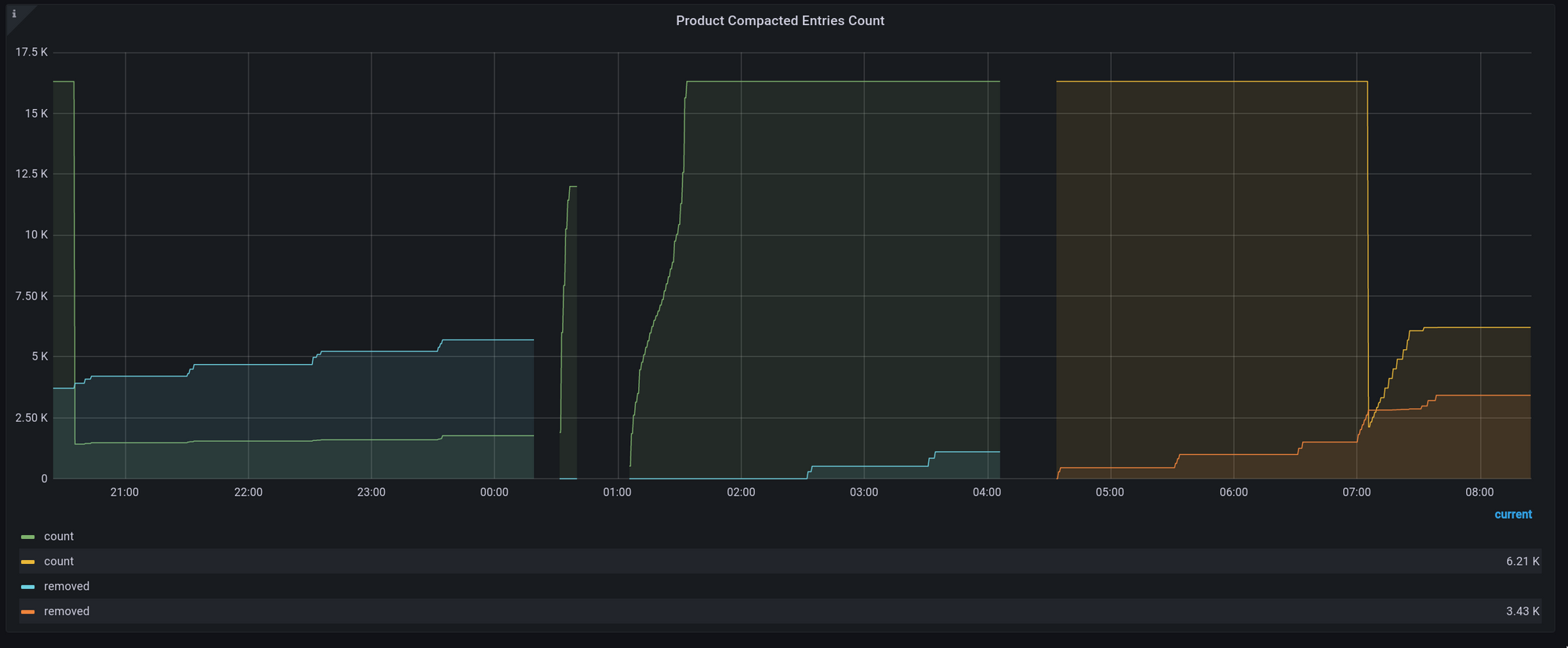

Fix compactor skips data from last compacted Ledger (#12429)

## Motivation The PR is fixing the compacted data lost during the data compaction. We see a few events deletion but the compacted events obviously dropped a lot.  After investigating more details about the issue, only the first read operation reads the data from the compacted ledger, since the second read operation, the broker start read data from the original topic. ``` 2021-10-19T23:09:30,021+0800 [broker-topic-workers-OrderedScheduler-7-0] INFO org.apache.pulsar.compaction.CompactedTopicImpl - =====[public/default/persistent/c499d42c-75d7-48d1-9225-2e724c0e1d83] Read from compacted Ledger = cursor position: -1:-1, Horizon: 16:-1, isFirstRead: true 2021-10-19T23:09:30,049+0800 [broker-topic-workers-OrderedScheduler-7-0] INFO org.apache.pulsar.compaction.CompactedTopicImpl - =====[public/default/persistent/c499d42c-75d7-48d1-9225-2e724c0e1d83] Read from original Ledger = cursor position: 16:0, Horizon: 16:-1, isFirstRead: false ``` ## Modifications The compaction task depends on the last snapshot and the incremental entries to build the new snapshot. So for the compaction cursor, we need to force seek the read position to ensure the compactor can read the complete last snapshot because the compactor will read the data before the compaction cursor mark delete position. ## Verifying this change New test added for checking the compacted data will not lost. (cherry picked from commit 1830f90)

- Loading branch information

1 parent

e8a629c

commit 4269f76

Showing

5 changed files

with

61 additions

and

7 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters