-

Notifications

You must be signed in to change notification settings - Fork 88

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

DISPATCH-1739 Add GitHub Action w/ sharding and bubblewrap #809

Conversation

|

One issue I know of. For some reason, dispatch skips SASL tests, even though I believe I installed all that's needed I also couldn't find a way to use here the artifacts that the Proton pipeline is building. CC @gemmellr |

Or not use -j2? How much of a difference does it make? You commented later its mainly for excercising bwrap and is more useful on Travis.

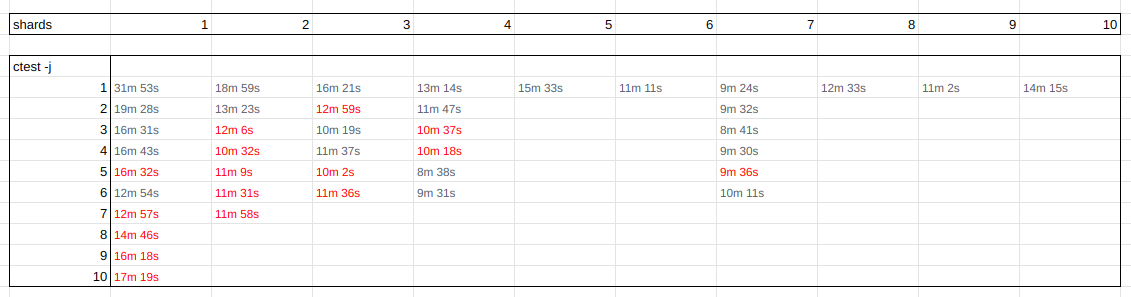

Presumably due to all the setup costs relative to the time spent on tests, or the fact 1 shard is clearly longer than others and maybe still ends up that way? Also, did you try less than 4? I see one of them has a test run around twice as long as most others, so unless all the long-running tests are perfectly spaced to fall in that shard, is it possible having less shards might actually distribute the test time more evenly such that it isnt much slower overall (if at all; it could even be faster if the tests group better), while giving less different output areas to look at (not personally a huge fan of the sharding, significantly complicates the build config and results, may point to some inefficient tests that it seems needed to begin with)

I think thats fine, possibly even preferable. Its not that lengthy a build, and its likely to eventually want different versions as e.g the Travis tests use. |

Probably. I'll leave -DUSE_BWRAP=ON and remove the -j2. On Travis it cuts the total time in half. Here, most time savings is by doing the shards.

Yes, there is about 1 min improvement in total time between 3 and 4. I did not try 2. There is the Python tox test, that is the most lengthy one. It is essentially running the other tests on py27 and then 36. I was thinking of giving it a shard of its own, but it seemed to me overly elaborate. |

26b6fea

to

637b79a

Compare

|

The test times look a fair bit longer in the latest version, presumably thats as much/more due to removing -j2? Did you also try doing that leaving it with 4 shards first, to see the difference each change made? Maybe using 4 (or more :o) would be better if not using the -j2. (I dislike the complexity sharding adds, but if having it then it should at least result in much faster runs to offset that. Right now its probbly barely halving the test time, so the overall job still looks well over half the time of a basic singular run) |

|

I am now thinking of these opportunities for speedup

Having a target goal for the test runtime would help. I am now thinking of aiming for <10 minutes for the ASan instrumented run. Using larger -j values is risky as it increases the probability of spurious failures in the tests, caused by timeouts. Long term, it would help to have more efficient tests. A lot of what's there now is something a QE team would traditionally write; orchestrated end-to-end system tests, except it is better integrated with the build process, and therefore somewhat easier to debug than a stand-alone test suite (possibly running on multiple machines or docker containers) would be. edit: I like splitting the compile and test phases into separate GHA jobs. I think the complexity from that is actually outweighed by the clear separation of the two phases and the knowledge of what precisely is needed to compile, and what files are needed to run the tests, and the ability to fetch the workspace, replicate environment (There is PPA repository with Python3.6 for Focal) and run what tests I want on my machine from sources compiled in Travis. |

Seems reasonable.

Yep, I had just noticed the main build was caching there, and was actually thinking of suggesting maybe the workflows should be combined. Alternatively, ensuring the build isnt duplicated would make sense if its that slow.

Hard to say. In part it depends how often folks are going to use it and actually look at it. Thus far I think most folks rely on their local testing and dont much care about the CI. Though maybe thats as it is slower. It might help if the main developers actually chipped in here.

Fair enough. I lean towards simpler CI thats easier to maintain and more like what people using the source do. I'll admit working on mostly Java stuff means I have things easier, generally having little need to consider replicating environments or using compiled-elsewhere bits. |

Bubblewrap (https://github.com/containers/bubblewrap) is an unprivileged sandbox. It allows running tests in parallel, without any port clashes with already running servers.

637b79a

to

27102c0

Compare

|

Caching npm is not helpful, but caching compiles with ccache is. The Build/Compile step in this PR takes under 2 minutes when the cache is ready. I've checked few combinations of shard count and thread count. I certainly don't want to go higher than -j2, due to spurious failures on timeouts that happen often with more threads. Keeping the shard count low is good for ergonomic, to have small number of PR jobs. So I am going with two shards and two threads, in the end. It runs for about 12 minutes (build + the slower of the shards), which should be OK for now. The equivalent job on Travis takes 30 minutes. Skipping Proton tests/examples won't help much, when ccache is already deployed. Skipping the Console build for ASan GH Action is helpful, it saves almost two minutes of the compile time. Partitioning the tests into shards in an intelligent manner (using knowledge of their past duration) would help a lot, but that adds complications. Maybe next time. |

This is a CI job triggered by pushes and pull requests sent to GitHub. Output artifacts include parts of the workspace before running tests; this is what the machines running test shards get. At the end, each shard outputs a XML CTest report. Note that `ctest -j2` results in test log lines being mixed up in stdout. Refer to the output XML report or use grep (grep for lines starting with the test number) to get understandable log. * Using python 3.6 as this is the version that works on all platforms tested. * Using two shards with -j2, which should balance speed and convenience. * Using ccache w/ 400MB size limit, to avoid purges during the build.

27102c0

to

c824790

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Glad to see you found newlines (between steps), much nicer :)

| uses: actions/upload-artifact@v2 | ||

| if: ${{ ! cancelled() }} | ||

| with: | ||

| name: Test_Results_${{matrix.os}}_${{matrix.buildType}}_${{matrix.shard}} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If there are to be different runtimeCheck values these will clash. If there arent, maybe drop the related matrix element.

| uses: actions/upload-artifact@v2 | ||

| if: failure() | ||

| with: | ||

| name: cores_${{matrix.os}}_${{matrix.buildType}}_${{matrix.shard}} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ditto.

|

I just included the runtimeCheck value to the test names, consistent with the workspace filename used, and pushed the change. |

|

GH Action on master is done, while Travis has not even started with rat check ;) Beating Travis at speed is not much of an accomplishment, but I'm happy with the result anyways. |

|

Give it time ;) Everyone will flock to GHA, the apache org might have not have a shared quota big enough to avoid huge projects from causing queues for the rest, and then Travis/other could become more responsive. Repeat cycle. It happens every time something becomes the shiny new CI Env hehe. The ASF Jenkins servers long used to be uselessly overloaded - now they too easily beat Travis / Appveyor most of the time as enough bigger jobs moved away from using Jenkins to using those. Hence why I like having CI jobs in all the envs. Invariably at any given point at least one of them is backlogged, down, or walking wounded, but others are in a better state. |

This is a CI job triggered by pushes and pull requests sent to GitHub.

Output artifacts include parts of the workspace before running tests; this is

what the machines running test shards get. At the end, each shard outputs

a XML CTest report.

Note that

ctest -j2results in test log lines being mixed up in stdout.Refer to the output XML report or use grep (grep for lines starting with

the test number) to get understandable logs.