SINGA-487 Add Sparsification Algorithms #566

Merged

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

This PR implements some sparsification schemes, we transfer only gradient elements which are significant. When we make use of cuda thrust parallel algorithm to convert the dense array into sparse array, the overhead is relatively low.

It supports two mode, controlled by the flag topK:

Moreover, there is a flag corr to use the local accumulate gradient for correction. The flag is true by default, because it is common to use the local accumulate gradient correction in sparsification.

Some reference papers for the Sparsification:

[1] N. Strom. Scalable distributed dnn training using commodity gpu cloud computing. In Proceedings of gpu cloud computing. In Proceedings of the InterSpeech 2015. International Speech

Communication Association (ISCA), September 2015.

[2] A. F. Aji and K. Hea

eld. Sparse communication for distributed gradient descent. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing (EMNLP 2017), pages 440{445. Association for Computational Linguistics (ACL), September 2017.

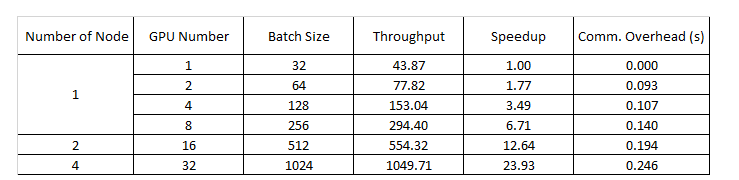

I have added an examples file sparsification_mnist.py to test the accuracy. The following results is based on a 8 GPUs AWS instance p2.x8large of the GPU model K80.