Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

[SPARK-28293][SQL] Implement Spark's own GetTableTypesOperation

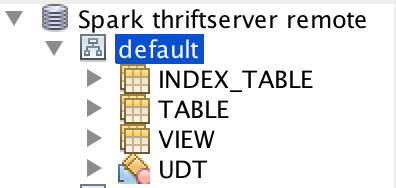

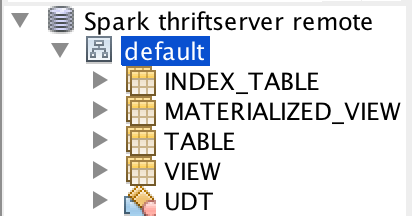

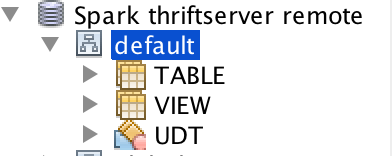

## What changes were proposed in this pull request? The table type is from Hive now. This will have some issues. For example, we don't support `index_table`, different Hive supports different table types: Build with Hive 1.2.1:  Build with Hive 2.3.5:  This pr implement Spark's own `GetTableTypesOperation`. ## How was this patch tested? unit tests and manual tests:  Closes #25073 from wangyum/SPARK-28293. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com>

- Loading branch information

1 parent

167fa04

commit 045191e

Showing

8 changed files

with

154 additions

and

13 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

85 changes: 85 additions & 0 deletions

85

...r/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkGetTableTypesOperation.scala

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,85 @@ | ||

| /* | ||

| * Licensed to the Apache Software Foundation (ASF) under one or more | ||

| * contributor license agreements. See the NOTICE file distributed with | ||

| * this work for additional information regarding copyright ownership. | ||

| * The ASF licenses this file to You under the Apache License, Version 2.0 | ||

| * (the "License"); you may not use this file except in compliance with | ||

| * the License. You may obtain a copy of the License at | ||

| * | ||

| * http://www.apache.org/licenses/LICENSE-2.0 | ||

| * | ||

| * Unless required by applicable law or agreed to in writing, software | ||

| * distributed under the License is distributed on an "AS IS" BASIS, | ||

| * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | ||

| * See the License for the specific language governing permissions and | ||

| * limitations under the License. | ||

| */ | ||

|

|

||

| package org.apache.spark.sql.hive.thriftserver | ||

|

|

||

| import java.util.UUID | ||

|

|

||

| import org.apache.hadoop.hive.ql.security.authorization.plugin.HiveOperationType | ||

| import org.apache.hive.service.cli._ | ||

| import org.apache.hive.service.cli.operation.GetTableTypesOperation | ||

| import org.apache.hive.service.cli.session.HiveSession | ||

|

|

||

| import org.apache.spark.internal.Logging | ||

| import org.apache.spark.sql.SQLContext | ||

| import org.apache.spark.sql.catalyst.catalog.CatalogTableType | ||

| import org.apache.spark.util.{Utils => SparkUtils} | ||

|

|

||

| /** | ||

| * Spark's own GetTableTypesOperation | ||

| * | ||

| * @param sqlContext SQLContext to use | ||

| * @param parentSession a HiveSession from SessionManager | ||

| */ | ||

| private[hive] class SparkGetTableTypesOperation( | ||

| sqlContext: SQLContext, | ||

| parentSession: HiveSession) | ||

| extends GetTableTypesOperation(parentSession) with SparkMetadataOperationUtils with Logging { | ||

|

|

||

| private var statementId: String = _ | ||

|

|

||

| override def close(): Unit = { | ||

| super.close() | ||

| HiveThriftServer2.listener.onOperationClosed(statementId) | ||

| } | ||

|

|

||

| override def runInternal(): Unit = { | ||

| statementId = UUID.randomUUID().toString | ||

| val logMsg = "Listing table types" | ||

| logInfo(s"$logMsg with $statementId") | ||

| setState(OperationState.RUNNING) | ||

| // Always use the latest class loader provided by executionHive's state. | ||

| val executionHiveClassLoader = sqlContext.sharedState.jarClassLoader | ||

| Thread.currentThread().setContextClassLoader(executionHiveClassLoader) | ||

|

|

||

| if (isAuthV2Enabled) { | ||

| authorizeMetaGets(HiveOperationType.GET_TABLETYPES, null) | ||

| } | ||

|

|

||

| HiveThriftServer2.listener.onStatementStart( | ||

| statementId, | ||

| parentSession.getSessionHandle.getSessionId.toString, | ||

| logMsg, | ||

| statementId, | ||

| parentSession.getUsername) | ||

|

|

||

| try { | ||

| val tableTypes = CatalogTableType.tableTypes.map(tableTypeString).toSet | ||

| tableTypes.foreach { tableType => | ||

| rowSet.addRow(Array[AnyRef](tableType)) | ||

| } | ||

| setState(OperationState.FINISHED) | ||

| } catch { | ||

| case e: HiveSQLException => | ||

| setState(OperationState.ERROR) | ||

| HiveThriftServer2.listener.onStatementError( | ||

| statementId, e.getMessage, SparkUtils.exceptionString(e)) | ||

| throw e | ||

| } | ||

| HiveThriftServer2.listener.onStatementFinish(statementId) | ||

| } | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

34 changes: 34 additions & 0 deletions

34

...r/src/main/scala/org/apache/spark/sql/hive/thriftserver/SparkMetadataOperationUtils.scala

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,34 @@ | ||

| /* | ||

| * Licensed to the Apache Software Foundation (ASF) under one or more | ||

| * contributor license agreements. See the NOTICE file distributed with | ||

| * this work for additional information regarding copyright ownership. | ||

| * The ASF licenses this file to You under the Apache License, Version 2.0 | ||

| * (the "License"); you may not use this file except in compliance with | ||

| * the License. You may obtain a copy of the License at | ||

| * | ||

| * http://www.apache.org/licenses/LICENSE-2.0 | ||

| * | ||

| * Unless required by applicable law or agreed to in writing, software | ||

| * distributed under the License is distributed on an "AS IS" BASIS, | ||

| * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | ||

| * See the License for the specific language governing permissions and | ||

| * limitations under the License. | ||

| */ | ||

|

|

||

| package org.apache.spark.sql.hive.thriftserver | ||

|

|

||

| import org.apache.spark.sql.catalyst.catalog.CatalogTableType | ||

| import org.apache.spark.sql.catalyst.catalog.CatalogTableType.{EXTERNAL, MANAGED, VIEW} | ||

|

|

||

| /** | ||

| * Utils for metadata operations. | ||

| */ | ||

| private[hive] trait SparkMetadataOperationUtils { | ||

|

|

||

| def tableTypeString(tableType: CatalogTableType): String = tableType match { | ||

| case EXTERNAL | MANAGED => "TABLE" | ||

| case VIEW => "VIEW" | ||

| case t => | ||

| throw new IllegalArgumentException(s"Unknown table type is found: $t") | ||

| } | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters