-

Notifications

You must be signed in to change notification settings - Fork 28.1k

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

[SPARK-30312][SQL] Preserve path permission and acl when truncate table

### What changes were proposed in this pull request?

This patch proposes to preserve existing permission/acls of paths when truncate table/partition.

### Why are the changes needed?

When Spark SQL truncates table, it deletes the paths of table/partitions, then re-create new ones. If permission/acls were set on the paths, the existing permission/acls will be deleted.

We should preserve the permission/acls if possible.

### Does this PR introduce any user-facing change?

Yes. When truncate table/partition, Spark will keep permission/acls of paths.

### How was this patch tested?

Unit test.

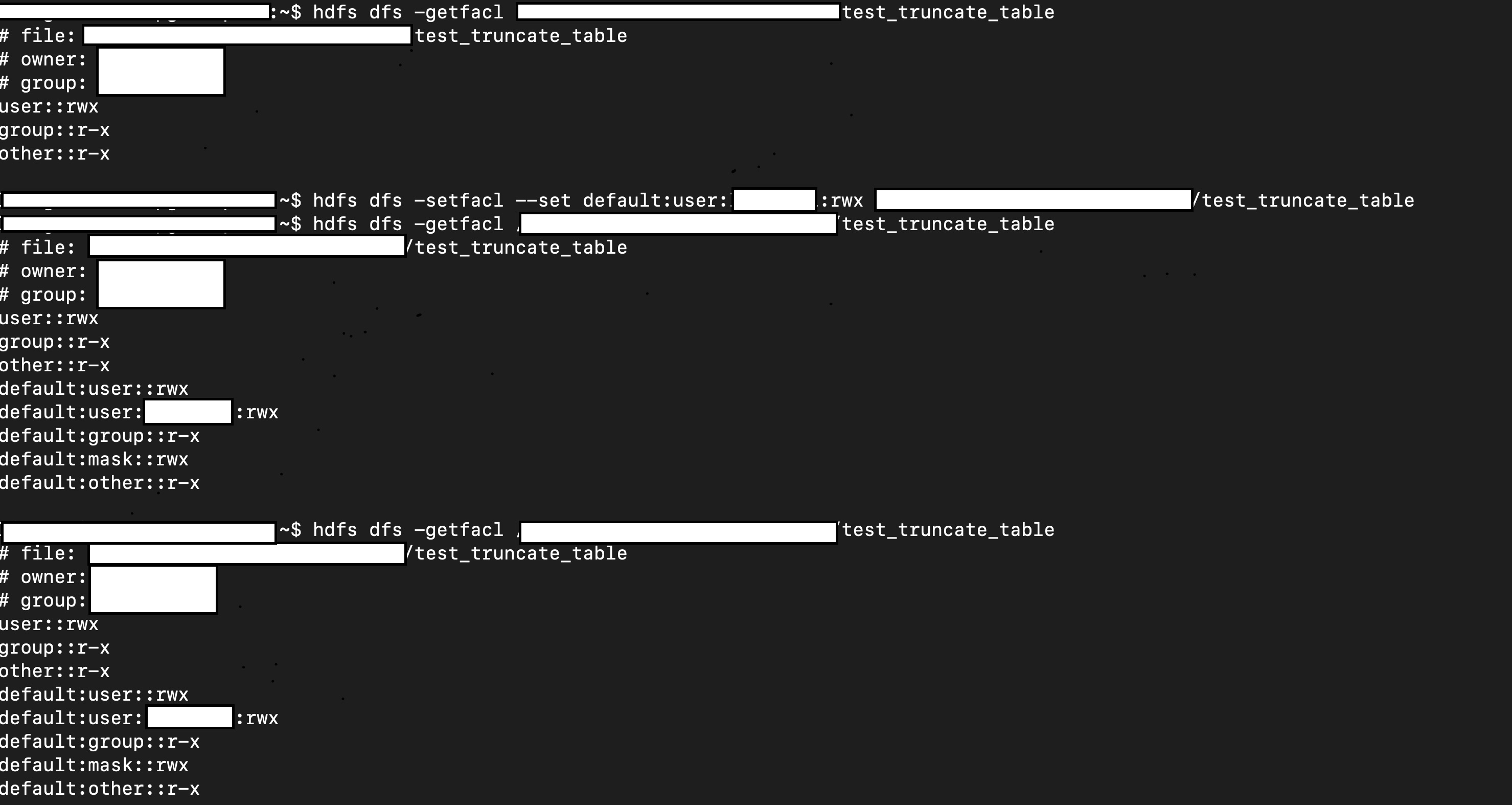

Manual test:

1. Create a table.

2. Manually change it permission/acl

3. Truncate table

4. Check permission/acl

```scala

val df = Seq(1, 2, 3).toDF

df.write.mode("overwrite").saveAsTable("test.test_truncate_table")

val testTable = spark.table("test.test_truncate_table")

testTable.show()

+-----+

|value|

+-----+

| 1|

| 2|

| 3|

+-----+

// hdfs dfs -setfacl ...

// hdfs dfs -getfacl ...

sql("truncate table test.test_truncate_table")

// hdfs dfs -getfacl ...

val testTable2 = spark.table("test.test_truncate_table")

testTable2.show()

+-----+

|value|

+-----+

+-----+

```

Closes #26956 from viirya/truncate-table-permission.

Lead-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Co-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>- Loading branch information

1 parent

7fb17f5

commit b5bc3e1

Showing

3 changed files

with

136 additions

and

1 deletion.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters