-

Notifications

You must be signed in to change notification settings - Fork 28k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-25135][SQL] Insert datasource table may all null when select from view #22124

Conversation

|

Test build #94861 has finished for PR 22124 at commit

|

| @@ -490,7 +490,8 @@ object DDLPreprocessingUtils { | |||

| case (expected, actual) => | |||

| if (expected.dataType.sameType(actual.dataType) && | |||

| expected.name == actual.name && | |||

| expected.metadata == actual.metadata) { | |||

| expected.metadata == actual.metadata && | |||

| expected.exprId.id == actual.exprId.id) { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

why does this fix the problem?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is not a correct change. please ignore this.

| @@ -901,6 +901,12 @@ class Analyzer( | |||

| // If the projection list contains Stars, expand it. | |||

| case p: Project if containsStar(p.projectList) => | |||

| p.copy(projectList = buildExpandedProjectList(p.projectList, p.child)) | |||

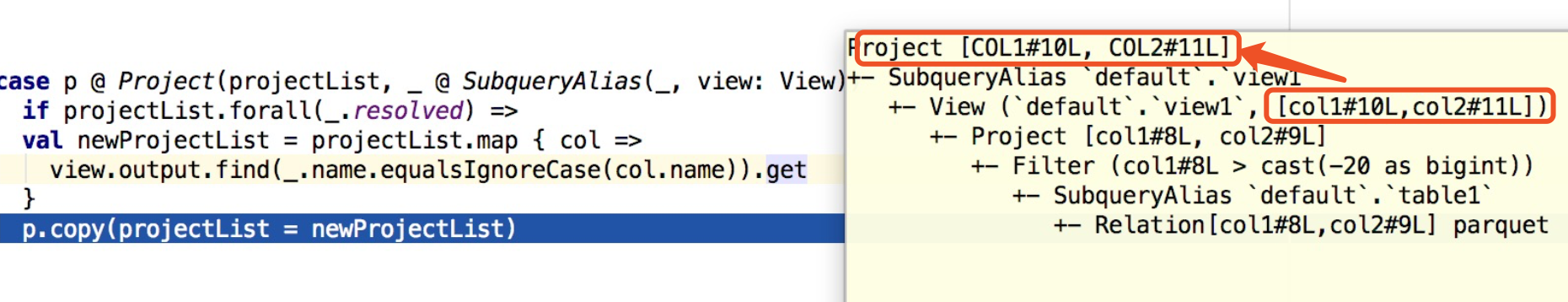

| case p @ Project(projectList, _ @ SubqueryAlias(_, view: View)) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

can you explain how this bug happens and what's the root cause? |

|

Thanks @cloud-fan I updated it in description. |

|

Test build #94882 has finished for PR 22124 at commit

|

why the parquet file columns is lower-cased? the root project has names upper-cased, doesn't it? |

|

The root project should be consistent with the schema of the target table. But it is inconsistent now. Before this PR: After this PR: Before SPARK-22834 |

|

Test build #94921 has finished for PR 22124 at commit

|

Can you point out in the codebase where the inconsistency comes from? |

|

Hi @wangyum , thanks for working on this. |

| def apply(plan: LogicalPlan): LogicalPlan = { | ||

| plan match { | ||

| case c: Command => c | ||

| case _ => removeRedundantAliases(plan, AttributeSet.empty) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't get it. For the query

*(1) Project [col1#8L AS COL1#14L, col2#9L AS COL2#15L]

+- *(1) Filter (isnotnull(col1#8L) && (col1#8L > -20))

+- *(1) FileScan parquet default.table1[col1#8L,col2#9L] Batched: true, Format: Parquet, Location: InMemoryFileIndex[file:/tmp/yumwang/spark/parquet], PartitionFilters: [], PushedFilters: [IsNotNull(col1), GreaterThan(col1,-20)], ReadSchema: struct<col1:bigint,col2:bigint>

Why is the alias treated as redundant? The name does change, isn't it?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, this is correct. Without this PR, RemoveRedundantAliases works like this:

=== Applying Rule org.apache.spark.sql.catalyst.optimizer.RemoveRedundantAliases ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-ae504f50-9543-49fb-a InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-ae504f50-9543-49fb-acf

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 03:03:52 PDT 2018 Created Time: Mon Aug 20 03:03:52 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: hive Provider: hive

Table Properties: [transient_lastDdlTime=1534759432] Table Properties: [transient_lastDdlTime=1534759432]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-ae504f50-9543-49fb-acf0-8b2736665d26/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-ae504f50-9543-49fb-acf0-8b2736665d26/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Partition Provider: Catalog Partition Provider: Catalog

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

-- COL2: long (nullable = true) |-- COL2: long (nullable = true)

!), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@60582d55, [COL1#10L, COL2#11L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@60582d55, [col1#8L, col2#9L]

!+- Project [col1#8L AS col1#10L, col2#9L AS col2#11L] +- Project [col1#8L, col2#9L]

+- Filter (col1#8L > -20) +- Filter (col1#8L > -20)

+- Relation[col1#8L,col2#9L] parquet +- Relation[col1#8L,col2#9L] parquetThere was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For example:

val path = "/tmp/spark/parquet"

val cnt = 30

spark.range(cnt).selectExpr("id as col1").write.mode("overwrite").parquet(path)

spark.sql(s"CREATE TABLE table1(col1 bigint) using parquet location '$path'")

spark.sql("create view view1 as select col1 from table1 where col1 > -20")

// The column name of table2 is inconsistent with the column name of view1.

spark.sql("create table table2 (COL1 BIGINT) using parquet")

// When querying the view, ensure that the column name of the query matches the column name of the target table.

spark.sql("insert overwrite table table2 select COL1 from view1")The execution plan change track:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveReferences ===

!'Project ['id AS col1#2] Project [id#0L AS col1#2L]

+- Range (0, 30, step=1, splits=Some(1)) +- Range (0, 30, step=1, splits=Some(1))

17:02:55.061 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.CleanupAliases ===

Project [id#0L AS col1#2L] Project [id#0L AS col1#2L]

+- Range (0, 30, step=1, splits=Some(1)) +- Range (0, 30, step=1, splits=Some(1))

17:02:59.174 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.execution.datasources.DataSourceAnalysis ===

!'CreateTable `table1`, ErrorIfExists CreateDataSourceTableCommand `table1`, false

17:02:59.909 WARN org.apache.hadoop.hive.metastore.ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

17:03:00.094 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations ===

'Project ['col1] 'Project ['col1]

+- 'Filter ('col1 > -20) +- 'Filter ('col1 > -20)

! +- 'UnresolvedRelation `table1` +- 'SubqueryAlias `default`.`table1`

! +- 'UnresolvedCatalogRelation `default`.`table1`, org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

17:03:00.254 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.execution.datasources.FindDataSourceTable ===

'Project ['col1] 'Project ['col1]

+- 'Filter ('col1 > -20) +- 'Filter ('col1 > -20)

! +- 'SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

! +- 'UnresolvedCatalogRelation `default`.`table1`, org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe +- Relation[col1#5L] parquet

17:03:00.267 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveReferences ===

'Project ['col1] 'Project ['col1]

!+- 'Filter ('col1 > -20) +- 'Filter (col1#5L > -20)

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.306 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.TypeCoercion$ImplicitTypeCasts ===

'Project ['col1] 'Project ['col1]

!+- 'Filter (col1#5L > -20) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.309 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveReferences ===

!'Project ['col1] Project [col1#5L]

+- Filter (col1#5L > cast(-20 as bigint)) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.314 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.ResolveTimeZone ===

Project [col1#5L] Project [col1#5L]

+- Filter (col1#5L > cast(-20 as bigint)) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.383 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.execution.datasources.DataSourceAnalysis ===

!'CreateTable `table2`, ErrorIfExists CreateDataSourceTableCommand `table2`, false

17:03:00.729 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations ===

'Project ['col1] 'Project ['col1]

+- 'Filter ('col1 > -20) +- 'Filter ('col1 > -20)

! +- 'UnresolvedRelation `table1` +- 'SubqueryAlias `default`.`table1`

! +- 'UnresolvedCatalogRelation `default`.`table1`, org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

17:03:00.730 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.execution.datasources.FindDataSourceTable ===

'Project ['col1] 'Project ['col1]

+- 'Filter ('col1 > -20) +- 'Filter ('col1 > -20)

! +- 'SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

! +- 'UnresolvedCatalogRelation `default`.`table1`, org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe +- Relation[col1#5L] parquet

17:03:00.731 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveReferences ===

'Project ['col1] 'Project ['col1]

!+- 'Filter ('col1 > -20) +- 'Filter (col1#5L > -20)

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.734 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.TypeCoercion$ImplicitTypeCasts ===

'Project ['col1] 'Project ['col1]

!+- 'Filter (col1#5L > -20) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.735 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveReferences ===

!'Project ['col1] Project [col1#5L]

+- Filter (col1#5L > cast(-20 as bigint)) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.737 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.ResolveTimeZone ===

Project [col1#5L] Project [col1#5L]

+- Filter (col1#5L > cast(-20 as bigint)) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.742 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations ===

'InsertIntoTable 'UnresolvedRelation `table2`, true, false 'InsertIntoTable 'UnresolvedRelation `table2`, true, false

+- 'Project ['COL1] +- 'Project ['COL1]

! +- 'UnresolvedRelation `view1` +- SubqueryAlias `default`.`view1`

! +- View (`default`.`view1`, [col1#6L])

! +- Project [col1#5L]

! +- Filter (col1#5L > cast(-20 as bigint))

! +- SubqueryAlias `default`.`table1`

! +- Relation[col1#5L] parquet

17:03:00.744 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveReferences ===

'InsertIntoTable 'UnresolvedRelation `table2`, true, false 'InsertIntoTable 'UnresolvedRelation `table2`, true, false

!+- 'Project ['COL1] +- Project [COL1#6L]

+- SubqueryAlias `default`.`view1` +- SubqueryAlias `default`.`view1`

+- View (`default`.`view1`, [col1#6L]) +- View (`default`.`view1`, [col1#6L])

+- Project [col1#5L] +- Project [col1#5L]

+- Filter (col1#5L > cast(-20 as bigint)) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.768 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveRelations ===

!'InsertIntoTable 'UnresolvedRelation `table2`, true, false 'InsertIntoTable 'UnresolvedCatalogRelation `default`.`table2`, org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe, true, false

+- Project [COL1#6L] +- Project [COL1#6L]

+- SubqueryAlias `default`.`view1` +- SubqueryAlias `default`.`view1`

+- View (`default`.`view1`, [col1#6L]) +- View (`default`.`view1`, [col1#6L])

+- Project [col1#5L] +- Project [col1#5L]

+- Filter (col1#5L > cast(-20 as bigint)) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:00.852 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.execution.datasources.FindDataSourceTable ===

!'InsertIntoTable 'UnresolvedCatalogRelation `default`.`table2`, org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe, true, false 'InsertIntoTable Relation[COL1#7L] parquet, true, false

+- Project [COL1#6L] +- Project [COL1#6L]

+- SubqueryAlias `default`.`view1` +- SubqueryAlias `default`.`view1`

+- View (`default`.`view1`, [col1#6L]) +- View (`default`.`view1`, [col1#6L])

+- Project [col1#5L] +- Project [col1#5L]

+- Filter (col1#5L > cast(-20 as bigint)) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

DataSourceStrategy 1:COL1#8L

DataSourceStrategy 2:COL1#6L

17:03:00.896 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.execution.datasources.DataSourceAnalysis ===

!'InsertIntoTable Relation[COL1#7L] parquet, true, false InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

!+- Project [COL1#6L] Database: default

! +- SubqueryAlias `default`.`view1` Table: table2

! +- View (`default`.`view1`, [col1#6L]) Owner: yumwang

! +- Project [col1#5L] Created Time: Mon Aug 20 17:03:00 PDT 2018

! +- Filter (col1#5L > cast(-20 as bigint)) Last Access: Wed Dec 31 16:00:00 PST 1969

! +- SubqueryAlias `default`.`table1` Created By: Spark 2.4.0-SNAPSHOT

! +- Relation[col1#5L] parquet Type: MANAGED

! Provider: parquet

! Table Properties: [transient_lastDdlTime=1534809780]

! Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

! Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

! InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

! OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

! Storage Properties: [serialization.format=1]

! Schema: root

! |-- COL1: long (nullable = true)

! ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L]

! +- Project [COL1#6L]

! +- SubqueryAlias `default`.`view1`

! +- View (`default`.`view1`, [col1#6L])

! +- Project [col1#5L]

! +- Filter (col1#5L > cast(-20 as bigint))

! +- SubqueryAlias `default`.`table1`

! +- Relation[col1#5L] parquet

17:03:00.916 WARN org.apache.spark.sql.hive.HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.AliasViewChild ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L]

+- Project [COL1#6L] +- Project [COL1#6L]

+- SubqueryAlias `default`.`view1` +- SubqueryAlias `default`.`view1`

+- View (`default`.`view1`, [col1#6L]) +- View (`default`.`view1`, [col1#6L])

! +- Project [col1#5L] +- Project [cast(col1#5L as bigint) AS col1#6L]

! +- Filter (col1#5L > cast(-20 as bigint)) +- Project [col1#5L]

! +- SubqueryAlias `default`.`table1` +- Filter (col1#5L > cast(-20 as bigint))

! +- Relation[col1#5L] parquet +- SubqueryAlias `default`.`table1`

! +- Relation[col1#5L] parquet

yumwang123:COL1#6L

17:03:00.949 WARN org.apache.spark.sql.internal.BaseSessionStateBuilder$$anon$2:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.EliminateSubqueryAliases ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L]

+- Project [COL1#6L] +- Project [COL1#6L]

! +- SubqueryAlias `default`.`view1` +- View (`default`.`view1`, [col1#6L])

! +- View (`default`.`view1`, [col1#6L]) +- Project [cast(col1#5L as bigint) AS col1#6L]

! +- Project [cast(col1#5L as bigint) AS col1#6L] +- Project [col1#5L]

! +- Project [col1#5L] +- Filter (col1#5L > cast(-20 as bigint))

! +- Filter (col1#5L > cast(-20 as bigint)) +- Relation[col1#5L] parquet

! +- SubqueryAlias `default`.`table1`

! +- Relation[col1#5L] parquet

17:03:00.959 WARN org.apache.spark.sql.internal.BaseSessionStateBuilder$$anon$2:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.EliminateView ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L]

+- Project [COL1#6L] +- Project [COL1#6L]

! +- View (`default`.`view1`, [col1#6L]) +- Project [cast(col1#5L as bigint) AS col1#6L]

! +- Project [cast(col1#5L as bigint) AS col1#6L] +- Project [col1#5L]

! +- Project [col1#5L] +- Filter (col1#5L > cast(-20 as bigint))

! +- Filter (col1#5L > cast(-20 as bigint)) +- Relation[col1#5L] parquet

! +- Relation[col1#5L] parquet

17:03:00.975 WARN org.apache.spark.sql.internal.BaseSessionStateBuilder$$anon$2:

=== Applying Rule org.apache.spark.sql.catalyst.optimizer.ColumnPruning ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L]

!+- Project [COL1#6L] +- Project [cast(col1#5L as bigint) AS col1#6L]

! +- Project [cast(col1#5L as bigint) AS col1#6L] +- Filter (col1#5L > cast(-20 as bigint))

! +- Project [col1#5L] +- Relation[col1#5L] parquet

! +- Filter (col1#5L > cast(-20 as bigint))

! +- Relation[col1#5L] parquet

17:03:00.980 WARN org.apache.spark.sql.internal.BaseSessionStateBuilder$$anon$2:

=== Applying Rule org.apache.spark.sql.catalyst.optimizer.ConstantFolding ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L]

+- Project [cast(col1#5L as bigint) AS col1#6L] +- Project [cast(col1#5L as bigint) AS col1#6L]

! +- Filter (col1#5L > cast(-20 as bigint)) +- Filter (col1#5L > -20)

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:01.047 WARN org.apache.spark.sql.internal.BaseSessionStateBuilder$$anon$2:

=== Applying Rule org.apache.spark.sql.catalyst.optimizer.SimplifyCasts ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L]

!+- Project [cast(col1#5L as bigint) AS col1#6L] +- Project [col1#5L AS col1#6L]

+- Filter (col1#5L > -20) +- Filter (col1#5L > -20)

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:01.058 WARN org.apache.spark.sql.internal.BaseSessionStateBuilder$$anon$2:

=== Applying Rule org.apache.spark.sql.catalyst.optimizer.RemoveRedundantAliases ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

!), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [col1#5L]

!+- Project [col1#5L AS col1#6L] +- Project [col1#5L]

+- Filter (col1#5L > -20) +- Filter (col1#5L > -20)

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

17:03:01.061 WARN org.apache.spark.sql.internal.BaseSessionStateBuilder$$anon$2:

=== Applying Rule org.apache.spark.sql.catalyst.optimizer.ColumnPruning ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [col1#5L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [col1#5L]

!+- Project [col1#5L] +- Filter (col1#5L > -20)

! +- Filter (col1#5L > -20) +- Relation[col1#5L] parquet

! +- Relation[col1#5L] parquet

17:03:01.116 WARN org.apache.spark.sql.internal.BaseSessionStateBuilder$$anon$2:

=== Applying Rule org.apache.spark.sql.catalyst.optimizer.InferFiltersFromConstraints ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [col1#5L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [col1#5L]

!+- Filter (col1#5L > -20) +- Filter (isnotnull(col1#5L) && (col1#5L > -20))

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet

queryExecution:== Parsed Logical Plan ==

'InsertIntoTable 'UnresolvedRelation `table2`, true, false

+- 'Project ['COL1]

+- 'UnresolvedRelation `view1`

== Analyzed Logical Plan ==

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default

Table: table2

Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED

Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1]

Schema: root

-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L]

+- Project [COL1#6L]

+- SubqueryAlias `default`.`view1`

+- View (`default`.`view1`, [col1#6L])

+- Project [cast(col1#5L as bigint) AS col1#6L]

+- Project [col1#5L]

+- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet

== Optimized Logical Plan ==

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default

Table: table2

Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED

Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1]

Schema: root

-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [col1#5L]

+- Filter (isnotnull(col1#5L) && (col1#5L > -20))

+- Relation[col1#5L] parquet

== Physical Plan ==

Execute InsertIntoHadoopFsRelationCommand InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default

Table: table2

Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED

Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1]

Schema: root

-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [col1#5L]

+- *(1) Project [col1#5L]

+- *(1) Filter (isnotnull(col1#5L) && (col1#5L > -20))

+- *(1) FileScan parquet default.table1[col1#5L] Batched: true, Format: Parquet, Location: InMemoryFileIndex[file:/tmp/spark/parquet], PartitionFilters: [], PushedFilters: [IsNotNull(col1), GreaterThan(col1,-20)], ReadSchema: struct<col1:bigint>The main 3 changes are:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveReferences ===

'InsertIntoTable 'UnresolvedRelation `table2`, true, false 'InsertIntoTable 'UnresolvedRelation `table2`, true, false

!+- 'Project ['COL1] +- Project [COL1#6L]

+- SubqueryAlias `default`.`view1` +- SubqueryAlias `default`.`view1`

+- View (`default`.`view1`, [col1#6L]) +- View (`default`.`view1`, [col1#6L])

+- Project [col1#5L] +- Project [col1#5L]

+- Filter (col1#5L > cast(-20 as bigint)) +- Filter (col1#5L > cast(-20 as bigint))

+- SubqueryAlias `default`.`table1` +- SubqueryAlias `default`.`table1`

+- Relation[col1#5L] parquet +- Relation[col1#5L] parquet=== Applying Rule org.apache.spark.sql.catalyst.analysis.AliasViewChild ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L]

+- Project [COL1#6L] +- Project [COL1#6L]

+- SubqueryAlias `default`.`view1` +- SubqueryAlias `default`.`view1`

+- View (`default`.`view1`, [col1#6L]) +- View (`default`.`view1`, [col1#6L])

! +- Project [col1#5L] +- Project [cast(col1#5L as bigint) AS col1#6L]

! +- Filter (col1#5L > cast(-20 as bigint)) +- Project [col1#5L]

! +- SubqueryAlias `default`.`table1` +- Filter (col1#5L > cast(-20 as bigint))

! +- Relation[col1#5L] parquet +- SubqueryAlias `default`.`table1`

! +- Relation[col1#5L] parquet=== Applying Rule org.apache.spark.sql.catalyst.optimizer.RemoveRedundantAliases ===

InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable( InsertIntoHadoopFsRelationCommand file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2, false, Parquet, Map(serialization.format -> 1, path -> file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2), Overwrite, CatalogTable(

Database: default Database: default

Table: table2 Table: table2

Owner: yumwang Owner: yumwang

Created Time: Mon Aug 20 17:03:00 PDT 2018 Created Time: Mon Aug 20 17:03:00 PDT 2018

Last Access: Wed Dec 31 16:00:00 PST 1969 Last Access: Wed Dec 31 16:00:00 PST 1969

Created By: Spark 2.4.0-SNAPSHOT Created By: Spark 2.4.0-SNAPSHOT

Type: MANAGED Type: MANAGED

Provider: parquet Provider: parquet

Table Properties: [transient_lastDdlTime=1534809780] Table Properties: [transient_lastDdlTime=1534809780]

Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2 Location: file:/private/var/folders/tg/f5mz46090wg7swzgdc69f8q03965_0/T/warehouse-04d554d2-7ddb-4e13-b065-164afe065972/table2

Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe Serde Library: org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe

InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat InputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat OutputFormat: org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat

Storage Properties: [serialization.format=1] Storage Properties: [serialization.format=1]

Schema: root Schema: root

-- COL1: long (nullable = true) |-- COL1: long (nullable = true)

!), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [COL1#6L] ), org.apache.spark.sql.execution.datasources.InMemoryFileIndex@c613921e, [col1#5L]

!+- Project [col1#5L AS col1#6L] +- Project [col1#5L]

+- Filter (col1#5L > -20) +- Filter (col1#5L > -20)

+- Relation[col1#5L] parquetWe need COL1#6L, but after some optimization, the outputColumns changed to col1#5L.

|

Test build #94948 has finished for PR 22124 at commit

|

|

Test build #94955 has finished for PR 22124 at commit

|

# Conflicts: # sql/core/src/test/scala/org/apache/spark/sql/SQLQuerySuite.scala

|

@wangyum please don't rush into code changes, it's more efficient to come up with a good solution before doing any coding work.

This is the key. We must find out which optimizer rule caused it and how. |

|

Test build #95006 has finished for PR 22124 at commit

|

|

close it. I have create a new PR. |

What changes were proposed in this pull request?

How to reproduce:

The root cause is when insert a table contains view. for example:

the execution plan optimized to:

At this time the

outputColumnsiscol1#5L. So spark will usecol1as the column name of the parquet.Before SPARK-22834. The

allColumnsisqueryExecution.analyzed.output, it's not optimized.I have three ways to solve this issue:

Read the column name in the view with the actual column name. but it is difficult to handle all cases. Please see the third commit.

Do not remove redundant alias if plan is

Command. Because alias may be useful.Change this line from

case a if resolver(a.name, name) => a.withName(name)tocase a if resolver(a.name, name) => a. But this change will cause some test failures:This pr use the second way to fix this issue.

How was this patch tested?

unit tests