New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Branch 2.4 #22860

Closed

Closed

Branch 2.4 #22860

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

## What changes were proposed in this pull request? SPARK-10399 introduced a performance regression on the hash computation for UTF8String. The regression can be evaluated with the code attached in the JIRA. That code runs in about 120 us per method on my laptop (MacBook Pro 2.5 GHz Intel Core i7, RAM 16 GB 1600 MHz DDR3) while the code from branch 2.3 takes on the same machine about 45 us for me. After the PR, the code takes about 45 us on the master branch too. ## How was this patch tested? running the perf test from the JIRA Closes #22338 from mgaido91/SPARK-25317. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 64c314e) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…lization Exception ## What changes were proposed in this pull request? mapValues in scala is currently not serializable. To avoid the serialization issue while running pageRank, we need to use map instead of mapValues. Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #22271 from shahidki31/master_latest. Authored-by: Shahid <shahidki31@gmail.com> Signed-off-by: Joseph K. Bradley <joseph@databricks.com> (cherry picked from commit 3b6591b) Signed-off-by: Joseph K. Bradley <joseph@databricks.com>

…ouping key in group aggregate pandas UDF ## What changes were proposed in this pull request? This PR proposes to add another example for multiple grouping key in group aggregate pandas UDF since this feature could make users still confused. ## How was this patch tested? Manually tested and documentation built. Closes #22329 from HyukjinKwon/SPARK-25328. Authored-by: hyukjinkwon <gurwls223@apache.org> Signed-off-by: Bryan Cutler <cutlerb@gmail.com> (cherry picked from commit 7ef6d1d) Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request? Add value length check in `_create_row`, forbid extra value for custom Row in PySpark. ## How was this patch tested? New UT in pyspark-sql Closes #22140 from xuanyuanking/SPARK-25072. Lead-authored-by: liyuanjian <liyuanjian@baidu.com> Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: Bryan Cutler <cutlerb@gmail.com> (cherry picked from commit c84bc40) Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

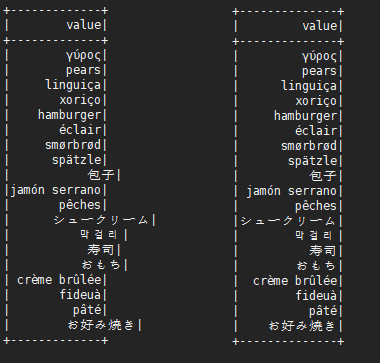

…alignment problem This is not a perfect solution. It is designed to minimize complexity on the basis of solving problems. It is effective for English, Chinese characters, Japanese, Korean and so on. ```scala before: +---+---------------------------+-------------+ |id |中国 |s2 | +---+---------------------------+-------------+ |1 |ab |[a] | |2 |null |[中国, abc] | |3 |ab1 |[hello world]| |4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] | |5 |中国(你好)a |[“中(国), 312] | |6 |中国山(东)服务区 |[“中(国)] | |7 |中国山东服务区 |[中(国)] | |8 | |[中国] | +---+---------------------------+-------------+ after: +---+-----------------------------------+----------------+ |id |中国 |s2 | +---+-----------------------------------+----------------+ |1 |ab |[a] | |2 |null |[中国, abc] | |3 |ab1 |[hello world] | |4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] | |5 |中国(你好)a |[“中(国), 312]| |6 |中国山(东)服务区 |[“中(国)] | |7 |中国山东服务区 |[中(国)] | |8 | |[中国] | +---+-----------------------------------+----------------+ ``` ## What changes were proposed in this pull request? When there are wide characters such as Chinese characters or Japanese characters in the data, the show method has a alignment problem. Try to fix this problem. ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)  Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #22048 from xuejianbest/master. Authored-by: xuejianbest <384329882@qq.com> Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request? This is a follow-up pr of #22200. When casting to decimal type, if `Cast.canNullSafeCastToDecimal()`, overflow won't happen, so we don't need to check the result of `Decimal.changePrecision()`. ## How was this patch tested? Existing tests. Closes #22352 from ueshin/issues/SPARK-25208/reduce_code_size. Authored-by: Takuya UESHIN <ueshin@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 1b1711e) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request? How to reproduce permission issue: ```sh # build spark ./dev/make-distribution.sh --name SPARK-25330 --tgz -Phadoop-2.7 -Phive -Phive-thriftserver -Pyarn tar -zxf spark-2.4.0-SNAPSHOT-bin-SPARK-25330.tar && cd spark-2.4.0-SNAPSHOT-bin-SPARK-25330 export HADOOP_PROXY_USER=user_a bin/spark-sql export HADOOP_PROXY_USER=user_b bin/spark-sql ``` ```java Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.security.AccessControlException: Permission denied: user=user_b, access=EXECUTE, inode="/tmp/hive-$%7Buser.name%7D/user_b/668748f2-f6c5-4325-a797-fd0a7ee7f4d4":user_b:hadoop:drwx------ at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:259) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:205) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190) ``` The issue occurred in this commit: apache/hadoop@feb886f. This pr revert Hadoop 2.7 to 2.7.3 to avoid this issue. ## How was this patch tested? unit tests and manual tests. Closes #22327 from wangyum/SPARK-25330. Authored-by: Yuming Wang <yumwang@ebay.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit b0ada7d) Signed-off-by: Sean Owen <sean.owen@databricks.com>

…itions parameter ## What changes were proposed in this pull request? This adds a test following #21638 ## How was this patch tested? Existing tests and new test. Closes #22356 from srowen/SPARK-22357.2. Authored-by: Sean Owen <sean.owen@databricks.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 4e3365b) Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request? This pr removed the method `updateBytesReadWithFileSize` in `FileScanRDD` because it computes input metrics by file size supported in Hadoop 2.5 and earlier. The current Spark does not support the versions, so it causes wrong input metric numbers. This is rework from #22232. Closes #22232 ## How was this patch tested? Added tests in `FileBasedDataSourceSuite`. Closes #22324 from maropu/pr22232-2. Lead-authored-by: dujunling <dujunling@huawei.com> Co-authored-by: Takeshi Yamamuro <yamamuro@apache.org> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit ed249db) Signed-off-by: Sean Owen <sean.owen@databricks.com>

…ases of sql/core and sql/hive ## What changes were proposed in this pull request? In SharedSparkSession and TestHive, we need to disable the rule ConvertToLocalRelation for better test case coverage. ## How was this patch tested? Identify the failures after excluding "ConvertToLocalRelation" rule. Closes #22270 from dilipbiswal/SPARK-25267-final. Authored-by: Dilip Biswal <dbiswal@us.ibm.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit 6d7bc5a) Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request? Before Apache Spark 2.3, table properties were ignored when writing data to a hive table(created with STORED AS PARQUET/ORC syntax), because the compression configurations were not passed to the FileFormatWriter in hadoopConf. Then it was fixed in #20087. But actually for CTAS with USING PARQUET/ORC syntax, table properties were ignored too when convertMastore, so the test case for CTAS not supported. Now it has been fixed in #20522 , the test case should be enabled too. ## How was this patch tested? This only re-enables the test cases of previous PR. Closes #22302 from fjh100456/compressionCodec. Authored-by: fjh100456 <fu.jinhua6@zte.com.cn> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 473f2fb) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request? Fix unused imports & outdated comments on `kafka-0-10-sql` module. (Found while I was working on [SPARK-23539](#22282)) ## How was this patch tested? Existing unit tests. Closes #22342 from dongjinleekr/feature/fix-kafka-sql-trivials. Authored-by: Lee Dongjin <dongjin@apache.org> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 458f501) Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request? Deprecate public APIs from ImageSchema. ## How was this patch tested? N/A Closes #22349 from WeichenXu123/image_api_deprecate. Authored-by: WeichenXu <weichen.xu@databricks.com> Signed-off-by: Xiangrui Meng <meng@databricks.com> (cherry picked from commit 08c02e6) Signed-off-by: Xiangrui Meng <meng@databricks.com>

…UDFSuite ## What changes were proposed in this pull request? At Spark 2.0.0, SPARK-14335 adds some [commented-out test coverages](https://github.com/apache/spark/pull/12117/files#diff-dd4b39a56fac28b1ced6184453a47358R177 ). This PR enables them because it's supported since 2.0.0. ## How was this patch tested? Pass the Jenkins with re-enabled test coverage. Closes #22363 from dongjoon-hyun/SPARK-25375. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit 26f74b7) Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request? When running TPC-DS benchmarks on 2.4 release, npoggi and winglungngai saw more than 10% performance regression on the following queries: q67, q24a and q24b. After we applying the PR #22338, the performance regression still exists. If we revert the changes in #19222, npoggi and winglungngai found the performance regression was resolved. Thus, this PR is to revert the related changes for unblocking the 2.4 release. In the future release, we still can continue the investigation and find out the root cause of the regression. ## How was this patch tested? The existing test cases Closes #22361 from gatorsmile/revertMemoryBlock. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 0b9ccd5) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request? Remove `BisectingKMeansModel.setDistanceMeasure` method. In `BisectingKMeansModel` set this param is meaningless. ## How was this patch tested? N/A Closes #22360 from WeichenXu123/bkmeans_update. Authored-by: WeichenXu <weichen.xu@databricks.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 88a930d) Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

How to reproduce:

```scala

val df1 = spark.createDataFrame(Seq(

(1, 1)

)).toDF("a", "b").withColumn("c", lit(null).cast("int"))

val df2 = df1.union(df1).withColumn("d", spark_partition_id).filter($"c".isNotNull)

df2.show

+---+---+----+---+

| a| b| c| d|

+---+---+----+---+

| 1| 1|null| 0|

| 1| 1|null| 1|

+---+---+----+---+

```

`filter($"c".isNotNull)` was transformed to `(null <=> c#10)` before #19201, but it is transformed to `(c#10 = null)` since #20155. This pr revert it to `(null <=> c#10)` to fix this issue.

## How was this patch tested?

unit tests

Closes #22368 from wangyum/SPARK-25368.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

(cherry picked from commit 77c9964)

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

… for ORC native data source table persisted in metastore ## What changes were proposed in this pull request? Apache Spark doesn't create Hive table with duplicated fields in both case-sensitive and case-insensitive mode. However, if Spark creates ORC files in case-sensitive mode first and create Hive table on that location, where it's created. In this situation, field resolution should fail in case-insensitive mode. Otherwise, we don't know which columns will be returned or filtered. Previously, SPARK-25132 fixed the same issue in Parquet. Here is a simple example: ``` val data = spark.range(5).selectExpr("id as a", "id * 2 as A") spark.conf.set("spark.sql.caseSensitive", true) data.write.format("orc").mode("overwrite").save("/user/hive/warehouse/orc_data") sql("CREATE TABLE orc_data_source (A LONG) USING orc LOCATION '/user/hive/warehouse/orc_data'") spark.conf.set("spark.sql.caseSensitive", false) sql("select A from orc_data_source").show +---+ | A| +---+ | 3| | 2| | 4| | 1| | 0| +---+ ``` See #22148 for more details about parquet data source reader. ## How was this patch tested? Unit tests added. Closes #22262 from seancxmao/SPARK-25175. Authored-by: seancxmao <seancxmao@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit a0aed47) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…ema in Parquet issue

## What changes were proposed in this pull request?

How to reproduce:

```scala

spark.sql("CREATE TABLE tbl(id long)")

spark.sql("INSERT OVERWRITE TABLE tbl VALUES 4")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"INSERT OVERWRITE LOCAL DIRECTORY '/tmp/spark/parquet' " +

"STORED AS PARQUET SELECT ID FROM view1")

spark.read.parquet("/tmp/spark/parquet").schema

scala> spark.read.parquet("/tmp/spark/parquet").schema

res10: org.apache.spark.sql.types.StructType = StructType(StructField(id,LongType,true))

```

The schema should be `StructType(StructField(ID,LongType,true))` as we `SELECT ID FROM view1`.

This pr fix this issue.

## How was this patch tested?

unit tests

Closes #22359 from wangyum/SPARK-25313-FOLLOW-UP.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

(cherry picked from commit f8b4d5a)

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…ctType to a DDL string ## What changes were proposed in this pull request? Add the version number for the new APIs. ## How was this patch tested? N/A Closes #22377 from gatorsmile/followup24849. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 6f65178) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…plan appears in the query ## What changes were proposed in this pull request? In the Planner, we collect the placeholder which need to be substituted in the query execution plan and once we plan them, we substitute the placeholder with the effective plan. In this second phase, we rely on the `==` comparison, ie. the `equals` method. This means that if two placeholder plans - which are different instances - have the same attributes (so that they are equal, according to the equal method) they are both substituted with their corresponding new physical plans. So, in such a situation, the first time we substitute both them with the first of the 2 new generated plan and the second time we substitute nothing. This is usually of no harm for the execution of the query itself, as the 2 plans are identical. But since they are the same instance, now, the local variables are shared (which is unexpected). This causes issues for the metrics collected, as the same node is executed 2 times, so the metrics are accumulated 2 times, wrongly. The PR proposes to use the `eq` method in checking which placeholder needs to be substituted,; thus in the previous situation, actually both the two different physical nodes which are created (one for each time the logical plan appears in the query plan) are used and the metrics are collected properly for each of them. ## How was this patch tested? added UT Closes #22284 from mgaido91/SPARK-25278. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 12e3e9f) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…lar with arrow udfs ## What changes were proposed in this pull request? Clarify docstring for Scalar functions ## How was this patch tested? Adds a unit test showing use similar to wordcount, there's existing unit test for array of floats as well. Closes #20908 from holdenk/SPARK-23672-document-support-for-nested-return-types-in-scalar-with-arrow-udfs. Authored-by: Holden Karau <holden@pigscanfly.ca> Signed-off-by: Bryan Cutler <cutlerb@gmail.com> (cherry picked from commit da5685b) Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request? SPARK-21281 introduced a check for the inputs of `CreateStructLike` to be non-empty. This means that `struct()`, which was previously considered valid, now throws an Exception. This behavior change was introduced in 2.3.0. The change may break users' application on upgrade and it causes `VectorAssembler` to fail when an empty `inputCols` is defined. The PR removes the added check making `struct()` valid again. ## How was this patch tested? added UT Closes #22373 from mgaido91/SPARK-25371. Authored-by: Marco Gaido <marcogaido91@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 0736e72) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…sed as null when nullValue is set. ## What changes were proposed in this pull request? In the PR, I propose new CSV option `emptyValue` and an update in the SQL Migration Guide which describes how to revert previous behavior when empty strings were not written at all. Since Spark 2.4, empty strings are saved as `""` to distinguish them from saved `null`s. Closes #22234 Closes #22367 ## How was this patch tested? It was tested by `CSVSuite` and new tests added in the PR #22234 Closes #22389 from MaxGekk/csv-empty-value-master. Lead-authored-by: Mario Molina <mmolimar@gmail.com> Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com> Signed-off-by: hyukjinkwon <gurwls223@apache.org> (cherry picked from commit c9cb393) Signed-off-by: hyukjinkwon <gurwls223@apache.org>

…t duplicate fields

## What changes were proposed in this pull request?

Like `INSERT OVERWRITE DIRECTORY USING` syntax, `INSERT OVERWRITE DIRECTORY STORED AS` should not generate files with duplicate fields because Spark cannot read those files back.

**INSERT OVERWRITE DIRECTORY USING**

```scala

scala> sql("INSERT OVERWRITE DIRECTORY 'file:///tmp/parquet' USING parquet SELECT 'id', 'id2' id")

... ERROR InsertIntoDataSourceDirCommand: Failed to write to directory ...

org.apache.spark.sql.AnalysisException: Found duplicate column(s) when inserting into file:/tmp/parquet: `id`;

```

**INSERT OVERWRITE DIRECTORY STORED AS**

```scala

scala> sql("INSERT OVERWRITE DIRECTORY 'file:///tmp/parquet' STORED AS parquet SELECT 'id', 'id2' id")

// It generates corrupted files

scala> spark.read.parquet("/tmp/parquet").show

18/09/09 22:09:57 WARN DataSource: Found duplicate column(s) in the data schema and the partition schema: `id`;

```

## How was this patch tested?

Pass the Jenkins with newly added test cases.

Closes #22378 from dongjoon-hyun/SPARK-25389.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

(cherry picked from commit 77579aa)

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…f values ## What changes were proposed in this pull request? Stop trimming values of properties loaded from a file ## How was this patch tested? Added unit test demonstrating the issue hit in production. Closes #22213 from gerashegalov/gera/SPARK-25221. Authored-by: Gera Shegalov <gera@apache.org> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit bcb9a8c) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request? We will update block info coming from executors, at the timing like caching a RDD. However, when removing RDDs with unpersisting, we don't ask to update block info. So the block info is not updated. We can fix this with few options: 1. Ask to update block info when unpersisting This is simplest but changes driver-executor communication a bit. 2. Update block info when processing the event of unpersisting RDD We send a `SparkListenerUnpersistRDD` event when unpersisting RDD. When processing this event, we can update block info of the RDD. This only changes event processing code so the risk seems to be lower. Currently this patch takes option 2 for lower risk. If we agree first option has no risk, we can change to it. ## How was this patch tested? Unit tests. Closes #22341 from viirya/SPARK-24889. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit 14f3ad2) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request? Correct some comparisons between unrelated types to what they seem to… have been trying to do ## How was this patch tested? Existing tests. Closes #22384 from srowen/SPARK-25398. Authored-by: Sean Owen <sean.owen@databricks.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit cfbdd6a) Signed-off-by: Sean Owen <sean.owen@databricks.com>

This reverts commit e58dadb.

…batch execution jobs ## What changes were proposed in this pull request? The leftover state from running a continuous processing streaming job should not affect later microbatch execution jobs. If a continuous processing job runs and the same thread gets reused for a microbatch execution job in the same environment, the microbatch job could get wrong answers because it can attempt to load the wrong version of the state. ## How was this patch tested? New and existing unit tests Closes #22386 from mukulmurthy/25399-streamthread. Authored-by: Mukul Murthy <mukul.murthy@gmail.com> Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com> (cherry picked from commit 9f5c5b4) Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request? docker-image-tool.sh uses getopts in which a colon signifies that an option takes an argument. Since -n does not take an argument it should not have a colon. ## How was this patch tested? Following the reproduction in [JIRA](https://issues.apache.org/jira/browse/SPARK-25803):- 0. Created a custom Dockerfile to use for the spark-r container image. In each of the steps below the path to this Dockerfile is passed with the '-R' option. (spark-r is used here simply as an example, the bug applies to all options) 1. Built container images without '-n'. The [result](https://gist.github.com/sel/59f0911bb1a6a485c2487cf7ca770f9d) is that the '-R' option is honoured and the hello-world image is built for spark-r, as expected. 2. Built container images with '-n' to reproduce the issue The [result](https://gist.github.com/sel/e5cabb9f3bdad5d087349e7fbed75141) is that the '-R' option is ignored and the default container image for spark-r is built 3. Applied the patch and re-built container images with '-n' and did not reproduce the issue The [result](https://gist.github.com/sel/6af14b95012ba8ff267a4fce6e3bd3bf) is that the '-R' option is honoured and the hello-world image is built for spark-r, as expected. Closes #22798 from sel/fix-docker-image-tool-nocache. Authored-by: Steve <sel@users.noreply.github.com> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit 9b98d91) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

…istoryProvider to read lastBlockBeingWritten data for logs ## What changes were proposed in this pull request? `hsync` has been added as part of SPARK-19531 to get the latest data in the history sever ui, but that is causing the performance overhead and also leading to drop many history log events. `hsync` uses the force `FileChannel.force` to sync the data to the disk and happens for the data pipeline, it is costly operation and making the application to face overhead and drop the events. I think getting the latest data in history server can be done in different way (no impact to application while writing events), there is an api `DFSInputStream.getFileLength()` which gives the file length including the `lastBlockBeingWrittenLength`(different from `FileStatus.getLen()`), this api can be used when the file status length and previously cached length are equal to verify whether any new data has been written or not, if there is any update in data length then the history server can update the in progress history log. And also I made this change as configurable with the default value false, and can be enabled for history server if users want to see the updated data in ui. ## How was this patch tested? Added new test and verified manually, with the added conf `spark.history.fs.inProgressAbsoluteLengthCheck.enabled=true`, history server is reading the logs including the last block data which is being written and updating the Web UI with the latest data. Closes #22752 from devaraj-kavali/SPARK-24787. Authored-by: Devaraj K <devaraj@apache.org> Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com> (cherry picked from commit 46d2d2c) Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

…bout extra data source options ## What changes were proposed in this pull request? Our current doc does not explain how we are passing the data source specific options to the underlying data source. According to [the review comment](#22622 (comment)), this PR aims to add more detailed information and examples. This is a backport of #22801. `orc.column.encoding.direct` is removed since it's not supported in ORC 1.5.2. ## How was this patch tested? Manual. Closes #22839 from dongjoon-hyun/SPARK-25656-2.4. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request? - Revert [SPARK-23935][SQL] Adding map_entries function: #21236 - Revert [SPARK-23937][SQL] Add map_filter SQL function: #21986 - Revert [SPARK-23940][SQL] Add transform_values SQL function: #22045 - Revert [SPARK-23939][SQL] Add transform_keys function: #22013 - Revert [SPARK-23938][SQL] Add map_zip_with function: #22017 - Revert the changes of map_entries in [SPARK-24331][SPARKR][SQL] Adding arrays_overlap, array_repeat, map_entries to SparkR: #21434 ## How was this patch tested? The existing tests. Closes #22827 from gatorsmile/revertMap2.4. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The following code in BisectingKMeansModel.load calls the wrong version of load.

```

case (SaveLoadV2_0.thisClassName, SaveLoadV2_0.thisFormatVersion) =>

val model = SaveLoadV1_0.load(sc, path)

```

Closes #22790 from huaxingao/spark-25793.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

(cherry picked from commit dc9b320)

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…ssing LICENSE-binary ## What changes were proposed in this pull request? We vote for the artifacts. All releases are in the form of the source materials needed to make changes to the software being released. (http://www.apache.org/legal/release-policy.html#artifacts) From Spark 2.4.0, the source artifact and binary artifact starts to contain own proper LICENSE files (LICENSE, LICENSE-binary). It's great to have them. However, unfortunately, `dev/make-distribution.sh` inside source artifacts start to fail because it expects `LICENSE-binary` and source artifact have only the LICENSE file. https://dist.apache.org/repos/dist/dev/spark/v2.4.0-rc4-bin/spark-2.4.0.tgz `dev/make-distribution.sh` is used during the voting phase because we are voting on that source artifact instead of GitHub repository. Individual contributors usually don't have the downstream repository and starts to try build the voting source artifacts to help the verification for the source artifact during voting phase. (Personally, I did before.) This PR aims to recover that script to work in any way. This doesn't aim for source artifacts to reproduce the compiled artifacts. ## How was this patch tested? Manual. ``` $ rm LICENSE-binary $ dev/make-distribution.sh ``` Closes #22840 from dongjoon-hyun/SPARK-25840. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 79f3bab) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request? `datetime.sql.out` is a generated golden file, but it's a little bit broken during manual [reverting](dongjoon-hyun@5d74449#diff-79dd276be45ede6f34e24ad7005b0a7cR87). This doens't cause test failure because the difference is inside `comments` and blank lines. We had better fix this minor issue before RC5. ## How was this patch tested? Pass the Jenkins. Closes #22837 from dongjoon-hyun/fix_datetime_sql_out. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…orker ## What changes were proposed in this pull request? There is a race condition when releasing a Python worker. If `ReaderIterator.handleEndOfDataSection` is not running in the task thread, when a task is early terminated (such as `take(N)`), the task completion listener may close the worker but "handleEndOfDataSection" can still put the worker into the worker pool to reuse. zsxwing@0e07b48 is a patch to reproduce this issue. I also found a user reported this in the mail list: http://mail-archives.apache.org/mod_mbox/spark-user/201610.mbox/%3CCAAUq=H+YLUEpd23nwvq13Ms5hOStkhX3ao4f4zQV6sgO5zM-xAmail.gmail.com%3E This PR fixes the issue by using `compareAndSet` to make sure we will never return a closed worker to the work pool. ## How was this patch tested? Jenkins. Closes #22816 from zsxwing/fix-socket-closed. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: Takuya UESHIN <ueshin@databricks.com> (cherry picked from commit 86d469a) Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request? See the detailed information at https://issues.apache.org/jira/browse/SPARK-25841 on why these APIs should be deprecated and redesigned. This patch also reverts 8acb51f which applies to 2.4. ## How was this patch tested? Only deprecation and doc changes. Closes #22841 from rxin/SPARK-25842. Authored-by: Reynold Xin <rxin@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 89d748b) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…by view canonicalization approach change ## What changes were proposed in this pull request? Since Spark 2.2, view definitions are stored in a different way from prior versions. This may cause Spark unable to read views created by prior versions. See [SPARK-25797](https://issues.apache.org/jira/browse/SPARK-25797) for more details. Basically, we have 2 options. 1) Make Spark 2.2+ able to get older view definitions back. Since the expanded text is buggy and unusable, we have to use original text (this is possible with [SPARK-25459](https://issues.apache.org/jira/browse/SPARK-25459)). However, because older Spark versions don't save the context for the database, we cannot always get correct view definitions without view default database. 2) Recreate the views by `ALTER VIEW AS` or `CREATE OR REPLACE VIEW AS`. This PR aims to add migration doc to help users troubleshoot this issue by above option 2. ## How was this patch tested? N/A. Docs are generated and checked locally ``` cd docs SKIP_API=1 jekyll serve --watch ``` Closes #22846 from seancxmao/SPARK-25797. Authored-by: seancxmao <seancxmao@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 6fd5ff3) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…ed SCALA_VERSION - Fixes the scala version propagation issue. - Disables the tests under the k8s profile, now we will run them manually. Adds a test specific profile otherwise tests will not run if we just remove the module from the kubernetes profile (quickest solution I can think of). Manually by running the tests with different versions of scala. Closes #22838 from skonto/propagate-scala2.12. Authored-by: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 7d44bc2) Signed-off-by: Sean Owen <sean.owen@databricks.com>

…eral only The main purpose of `schema_of_json` is the usage of combination with `from_json` (to make up the leak of schema inference) which takes its schema only as literal; however, currently `schema_of_json` allows JSON input as non-literal expressions (e.g, column). This was mistakenly allowed - we don't have to take other usages rather then the main purpose into account for now. This PR makes a followup to only allow literals for `schema_of_json`'s JSON input. We can allow non literal expressions later when it's needed or there are some usecase for it. Unit tests were added. Closes #22775 from HyukjinKwon/SPARK-25447-followup. Lead-authored-by: hyukjinkwon <gurwls223@apache.org> Co-authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 33e337c) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…hutdown ## What changes were proposed in this pull request? the final line in the mvn helper script in build/ attempts to shut down the zinc server. due to the zinc server being set up w/a 30min timeout, by the time the mvn test instantiation finishes, the server times out. this means that when the mvn script tries to shut down zinc, it returns w/an exit code of 1. this will then automatically fail the entire build (even if the build passes). ## How was this patch tested? i set up a test build: https://amplab.cs.berkeley.edu/jenkins/job/sknapp-testing-spark-branch-2.4-test-maven-hadoop-2.7/ Closes #22854 from shaneknapp/fix-mvn-helper-script. Authored-by: shane knapp <incomplete@gmail.com> Signed-off-by: Sean Owen <sean.owen@databricks.com> (cherry picked from commit 6aa5063) Signed-off-by: Sean Owen <sean.owen@databricks.com>

|

Can one of the admins verify this patch? |

|

@sarojchand close this |

## What changes were proposed in this pull request? add scala/java/python example and doc for PrefixSpan in branch 2.4 ## How was this patch tested? Manually tested Author: Huaxin Gao <huaxing@us.ibm.com> Closes #22863 from huaxingao/mydocbranch.

## What changes were proposed in this pull request? after backport #22775 to 2.4, the 2.4 sbt Jenkins QA job is broken, see https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test/job/spark-branch-2.4-test-sbt-hadoop-2.7/147/console This PR adds `if sys.version >= '3': basestring = str` which onlly exists in master. ## How was this patch tested? existing test Closes #22858 from cloud-fan/python. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request? Extractors are made of 2 expressions, one of them defines the the value to be extract from (called `child`) and the other defines the way of extraction (called `extraction`). In this term extractors have 2 children so they shouldn't be `UnaryExpression`s. `ResolveReferences` was changed in this commit: 36b826f which resulted a regression with nested extractors. An extractor need to define its children as the set of both `child` and `extraction`; and should try to resolve both in `ResolveReferences`. This PR changes `UnresolvedExtractValue` to a `BinaryExpression`. ## How was this patch tested? added UT Closes #22817 from peter-toth/SPARK-25816. Authored-by: Peter Toth <peter.toth@gmail.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit ca2fca1) Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request? Updated the doc string value for spark.sql.parquet.recordLevelFilter.enabled to indicate that spark.sql.parquet.enableVectorizedReader must be disabled. The code in ParquetFileFormat uses spark.sql.parquet.recordLevelFilter.enabled only after falling back to parquet-mr (see else for this if statement): https://github.com/apache/spark/blob/d5573c578a1eea9ee04886d9df37c7178e67bb30/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFileFormat.scala#L412 https://github.com/apache/spark/blob/d5573c578a1eea9ee04886d9df37c7178e67bb30/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFileFormat.scala#L427-L430 Tests also bear this out. ## How was this patch tested? This is just a doc string fix: I built Spark and ran a single test. Closes #22865 from bersprockets/confdocfix. Authored-by: Bruce Robbins <bersprockets@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 4e990d9) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…ersion ## What changes were proposed in this pull request? This PR targets to document binary type in "Apache Arrow in Spark". ## How was this patch tested? Manually built the documentation and checked. Closes #22871 from HyukjinKwon/SPARK-25179. Authored-by: hyukjinkwon <gurwls223@apache.org> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit fbaf150) Signed-off-by: gatorsmile <gatorsmile@gmail.com>

… generation ## What changes were proposed in this pull request? Code generation is incorrect if `outputVars` parameter of `consume` method in `CodegenSupport` contains a lazily evaluated stream of expressions. This PR fixes the issue by forcing the evaluation of `inputVars` before generating the code for UnsafeRow. ## How was this patch tested? Tested with the sample program provided in https://issues.apache.org/jira/browse/SPARK-25767 Closes #22789 from peter-toth/SPARK-25767. Authored-by: Peter Toth <peter.toth@gmail.com> Signed-off-by: Herman van Hovell <hvanhovell@databricks.com> (cherry picked from commit 7fe5cff) Signed-off-by: Herman van Hovell <hvanhovell@databricks.com>

## What changes were proposed in this pull request?

I saw CoarseGrainedSchedulerBackendSuite failed in my PR and finally reproduced the following error on a very busy machine:

```

sbt.ForkMain$ForkError: org.scalatest.exceptions.TestFailedDueToTimeoutException: The code passed to eventually never returned normally. Attempted 400 times over 10.009828643999999 seconds. Last failure message: ArrayBuffer("2", "0", "3") had length 3 instead of expected length 4.

```

The logs in this test shows executor 1 was not up when the test failed.

```

18/10/30 11:34:03.563 dispatcher-event-loop-12 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.17.0.2:43656) with ID 2

18/10/30 11:34:03.593 dispatcher-event-loop-3 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.17.0.2:43658) with ID 3

18/10/30 11:34:03.629 dispatcher-event-loop-6 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.17.0.2:43654) with ID 0

18/10/30 11:34:03.885 pool-1-thread-1-ScalaTest-running-CoarseGrainedSchedulerBackendSuite INFO CoarseGrainedSchedulerBackendSuite:

===== FINISHED o.a.s.scheduler.CoarseGrainedSchedulerBackendSuite: 'compute max number of concurrent tasks can be launched' =====

```

And the following logs in executor 1 shows it was still doing the initialization when the timeout happened (at 18/10/30 11:34:03.885).

```

18/10/30 11:34:03.463 netty-rpc-connection-0 INFO TransportClientFactory: Successfully created connection to 54b6b6217301/172.17.0.2:33741 after 37 ms (0 ms spent in bootstraps)

18/10/30 11:34:03.959 main INFO DiskBlockManager: Created local directory at /home/jenkins/workspace/core/target/tmp/spark-383518bc-53bd-4d9c-885b-d881f03875bf/executor-61c406e4-178f-40a6-ac2c-7314ee6fb142/blockmgr-03fb84a1-eedc-4055-8743-682eb3ac5c67

18/10/30 11:34:03.993 main INFO MemoryStore: MemoryStore started with capacity 546.3 MB

```

Hence, I think our current 10 seconds is not enough on a slow Jenkins machine. This PR just increases the timeout from 10 seconds to 60 seconds to make the test more stable.

## How was this patch tested?

Jenkins

Closes #22910 from zsxwing/fix-flaky-test.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

(cherry picked from commit 6be3cce)

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.