-

Notifications

You must be signed in to change notification settings - Fork 28.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-26114][CORE] ExternalSorter's readingIterator field leak #23083

Conversation

…nd not trackable on freeing up the memory

…dont need to be fired at task completion

…till the end of the task

|

Test build #4433 has finished for PR 23083 at commit

|

|

Hi @davies, @advancedxy, @rxin, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for you investigating and this is a nice catch.

However I am not sure about adding new interface to TaskContext.

cc @cloud-fan, @jiangxb1987 & @srowen for more comments

| /** | ||

| * Removes a (Java friendly) listener that is no longer needed to be executed on task completion. | ||

| */ | ||

| def remoteTaskCompletionListener(listener: TaskCompletionListener): TaskContext |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

you mean removeTaskCompletionListener? didn't you?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yep, seems that v was replaced with t on my keyboard)

Thanks a lot!

| @@ -99,6 +99,13 @@ private[spark] class TaskContextImpl( | |||

| this | |||

| } | |||

|

|

|||

| override def remoteTaskCompletionListener(listener: TaskCompletionListener) | |||

| : this.type = synchronized { | |||

| onCompleteCallbacks -= listener | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not use whether we should add removeTaskCompletionListener or not.

If we are going to add this method. Then this's an O(n) operation. Maybe we need to replace onCompletedCallbacks to a LinkedHashSet?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we do the same thing (i.e. changing ArrayBuffer to LinkedHashSet) for onFailureCallbacks too?

/** List of callback functions to execute when the task completes. */

@transient private val onCompleteCallbacks = new ArrayBuffer[TaskCompletionListener]

/** List of callback functions to execute when the task fails. */

@transient private val onFailureCallbacks = new ArrayBuffer[TaskFailureListener]There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If we are going to add the new interface, I think so.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Replaced ArrayBuffer with LinkedHashSet. Thank you!

Another interesting question is why these collections are traversed in the reverse order in invokeLisneters like the following

private def invokeListeners[T](

listeners: Seq[T],

name: String,

error: Option[Throwable])(

callback: T => Unit): Unit = {

val errorMsgs = new ArrayBuffer[String](2)

// Process callbacks in the reverse order of registration

listeners.reverse.foreach { listener =>

...

}

}I believe @hvanhovell could help to understand. @hvanhovell Could you please remind why task completion and error listeners are traversed in the reverse order (you seem to the the one who added the corresponding line)?

| // note that holding sorter references till the end of the task also holds | ||

| // references to PartitionedAppendOnlyMap and PartitionedPairBuffer too and these | ||

| // ones may consume a significant part of the available memory | ||

| context.remoteTaskCompletionListener(taskListener) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice catch.

Liked I said in the above, do we have another way to remove reference to sorter?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Great question! Honestly speaking I don't have pretty good solution right now.

TaskCompletionListener stops sorter in case of task failures, cancels, etc., i.e. in case of abnormal termination. In "happy path" case task completion listener is not needed.

| @@ -72,7 +73,8 @@ final class ShuffleBlockFetcherIterator( | |||

| maxBlocksInFlightPerAddress: Int, | |||

| maxReqSizeShuffleToMem: Long, | |||

| detectCorrupt: Boolean) | |||

| extends Iterator[(BlockId, InputStream)] with DownloadFileManager with Logging { | |||

| extends Iterator[(BlockId, InputStream)] with DownloadFileManager with TaskCompletionListener | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't it's a good idea for ShuffleBlockFetchIterator to be a subclass of TaskCompletionListener.

What's wrong with original solution?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Another field sounds reasonable.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Introduced the corresponding field.

/**

* Task completion callback to be called in both success as well as failure cases to cleanup.

* It may not be called at all in case the `cleanup` method has already been called before

* task completion.

*/

private[this] val cleanupTaskCompletionListener = (_: TaskContext) => cleanup()|

And another thing:

So do you mean CoGroupRDDs with multiple input sources will have similar problems? If so, can you create another Jira? |

Yep, but a little bit different ones

Will do it shortly. |

…g TaskCompletionListener interface - using private fields instead, using LinkedHashSets as a collection of task completition listeners to faster lookup-s during listener removals

|

Looking at the code, we are trying to fix 2 memory leaks: the task completion listener in For the task completion listener, I think it's an overkill to introduce a new API, do you know where exactly we leak the memory? and can we null it out when the |

If I understand correctly, the memory is leaked because external sorter is referenced in It's an overkill to introduce a new API. However, I think we can limited it into private[Spark] scope. |

| map = null // So that the memory can be garbage-collected | ||

| buffer = null // So that the memory can be garbage-collected | ||

| readingIterator = null // So that the memory can be garbage-collected |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @szhem , I discussed with wenchen offline. I think this is the key point. After nulling out readingIterator, ExternalSorter should released all the memories it occupied.

Yes, ExternalSorter is leaked in TaskCompletionListener, but it would already be stopped in CompletionIterator in happy path. The stopped sorter wouldn't occupy too much memory. The readingIterator

is occupying memory because it may reference map/buffer.partitionedDestructiveSortedIterator, which itself references map/buffer. So only nulling out map or buffer is not enough.

Can you try with this modification only and see whether OOM still occurs.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@advancedxy I've tried to remove all the modifications except for this one and got OutOfMemoryErrors once again. Here are the details:

- Now there are 4

ExternalSorterremained

2 of them are not closed ones ...

and 2 of them are closed ones ...

as expected - There are 2

SpillableIterators (which consume a significant part of memory) of already closedExternalSorters remained

- These

SpillableIterators are referenced byCompletionIterators ...

... which in their order seem to be referenced by thecurfield ...

... of the standardIterator'sflatMapthat is used in thecomputemethod ofCoalescedRDD

Standard Iterator's flatMap does not clean up its cur field before obtaining the next value for it which in its order will consume quite a lot of memory too

.. and in case of Spark that means that the previous iterator consuming the memory will live there while fetching the next value for it

So I've returned the changes made to the CompletionIterator to reassign the reference of its sub-iterator to the empty iterator ...

... and that has helped (updated the PR correspondingly).

P.S. Cleaning up the standard flatMap iterator's cur field before calling nextCur will help too (here is the corresponding issue but I don't know whether it will be accepted or not)

def flatMap[B](f: A => GenTraversableOnce[B]): Iterator[B] = new AbstractIterator[B] {

private var cur: Iterator[B] = empty

private def nextCur() { cur = f(self.next()).toIterator }

def hasNext: Boolean = {

// Equivalent to cur.hasNext || self.hasNext && { nextCur(); hasNext }

// but slightly shorter bytecode (better JVM inlining!)

while (!cur.hasNext) {

cur = empty

if (!self.hasNext) return false

nextCur()

}

true

}

def next(): B = (if (hasNext) cur else empty).next()

}There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice. Case well explained.

But I think you need to add corresponding test cases for CompletionIterator and ExternalSorter.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've added test case for CompletionIterator.

Regarding ExternalSorter - taking into account that only the private api has been changed and there are no similar test cases which verify that private map and buffer fields are set to null after sorter stops, don't you think that already existing tests will cover the situation with readingIterator too?

|

Hi @cloud-fan

I've updated the description and the title of this PR correspondingly. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Add corresponding unit test please.

|

ok to test |

|

LGTM, thanks for your great work! |

|

Test build #99254 has finished for PR 23083 at commit

|

|

retest this please |

|

Test build #99267 has finished for PR 23083 at commit

|

|

retest this please |

|

Test build #99309 has finished for PR 23083 at commit

|

|

retest this please |

|

Test build #99313 has finished for PR 23083 at commit

|

|

retest this please |

|

Test build #99326 has finished for PR 23083 at commit

|

|

retest this please |

|

Test build #99351 has finished for PR 23083 at commit

|

|

retest this please |

|

Test build #99360 has finished for PR 23083 at commit

|

|

thanks, merging to master/2.4! |

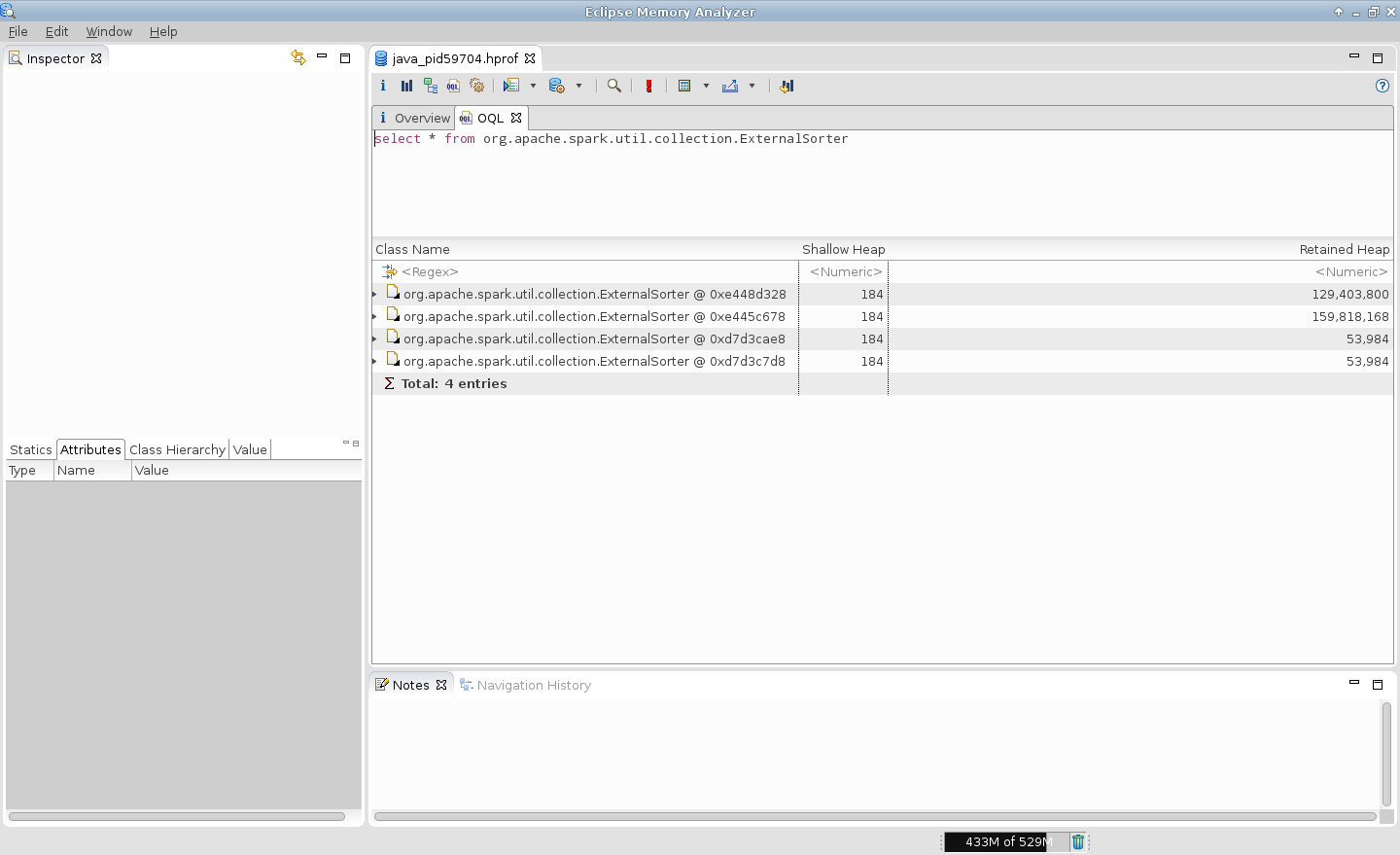

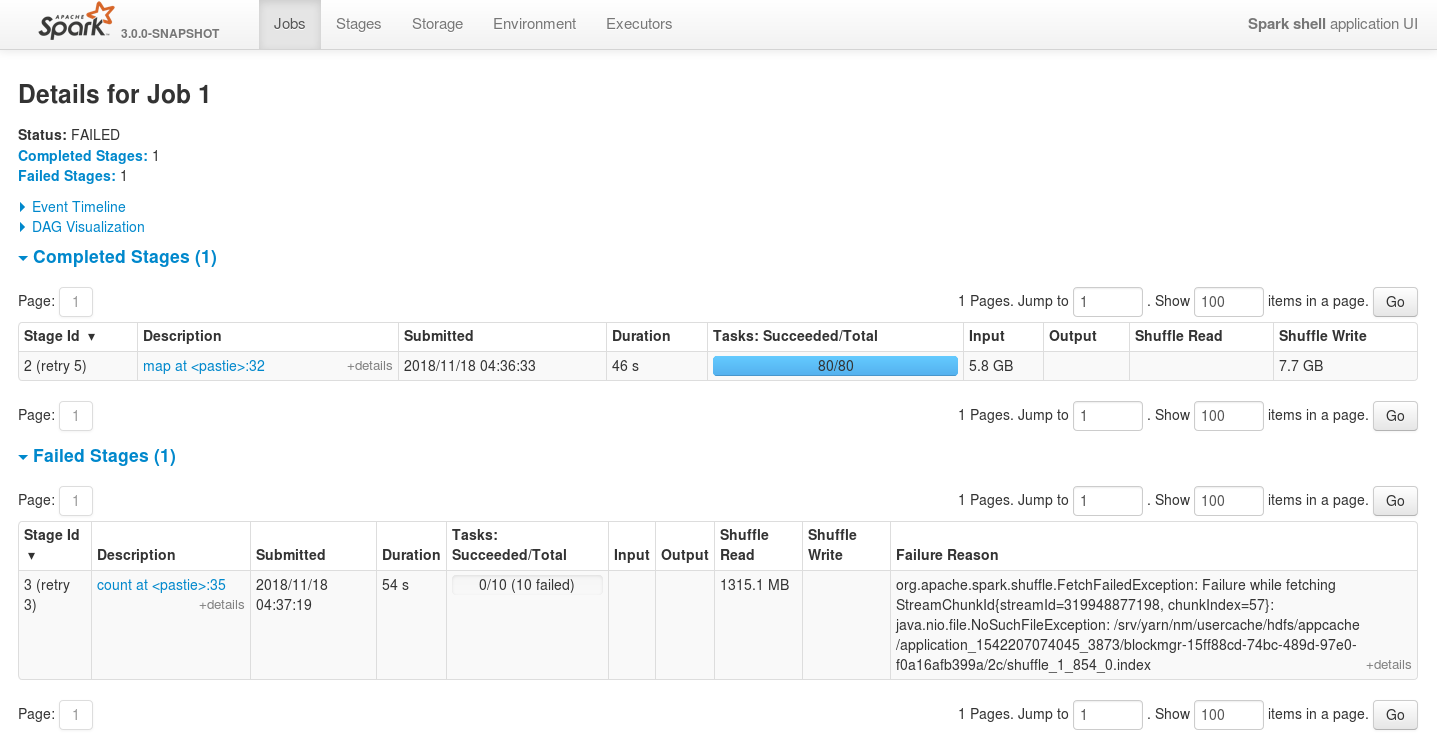

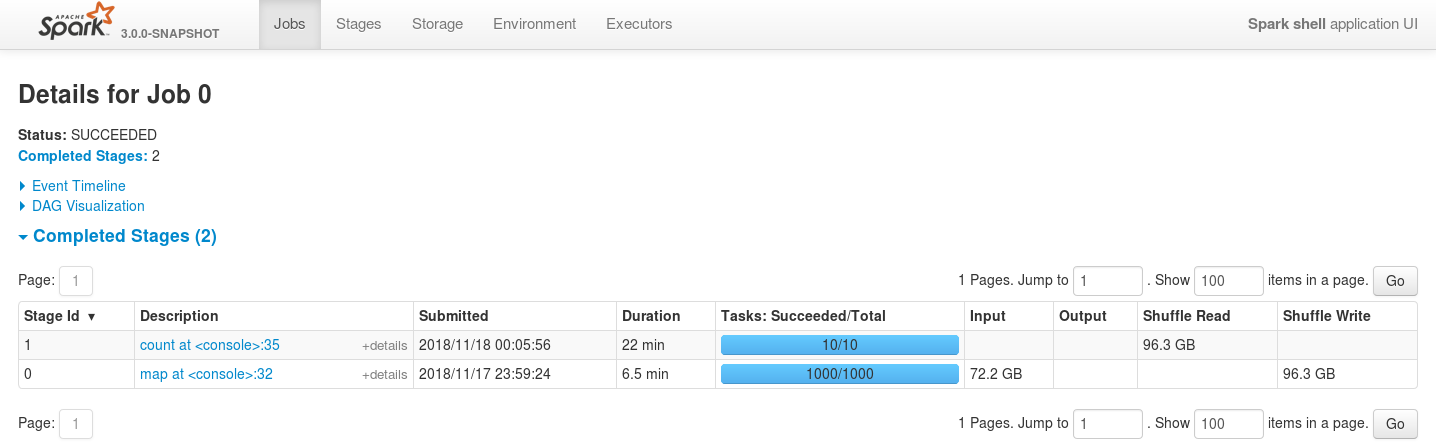

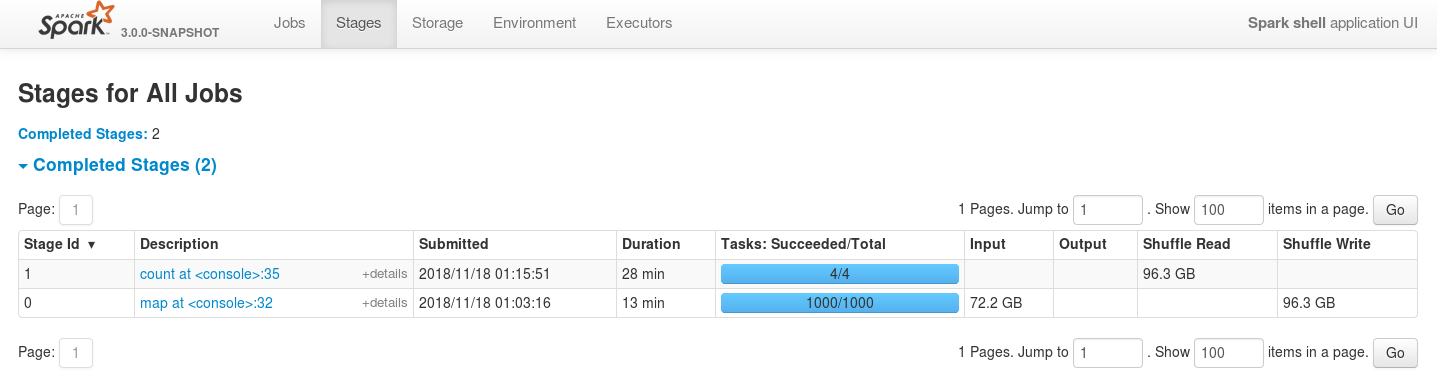

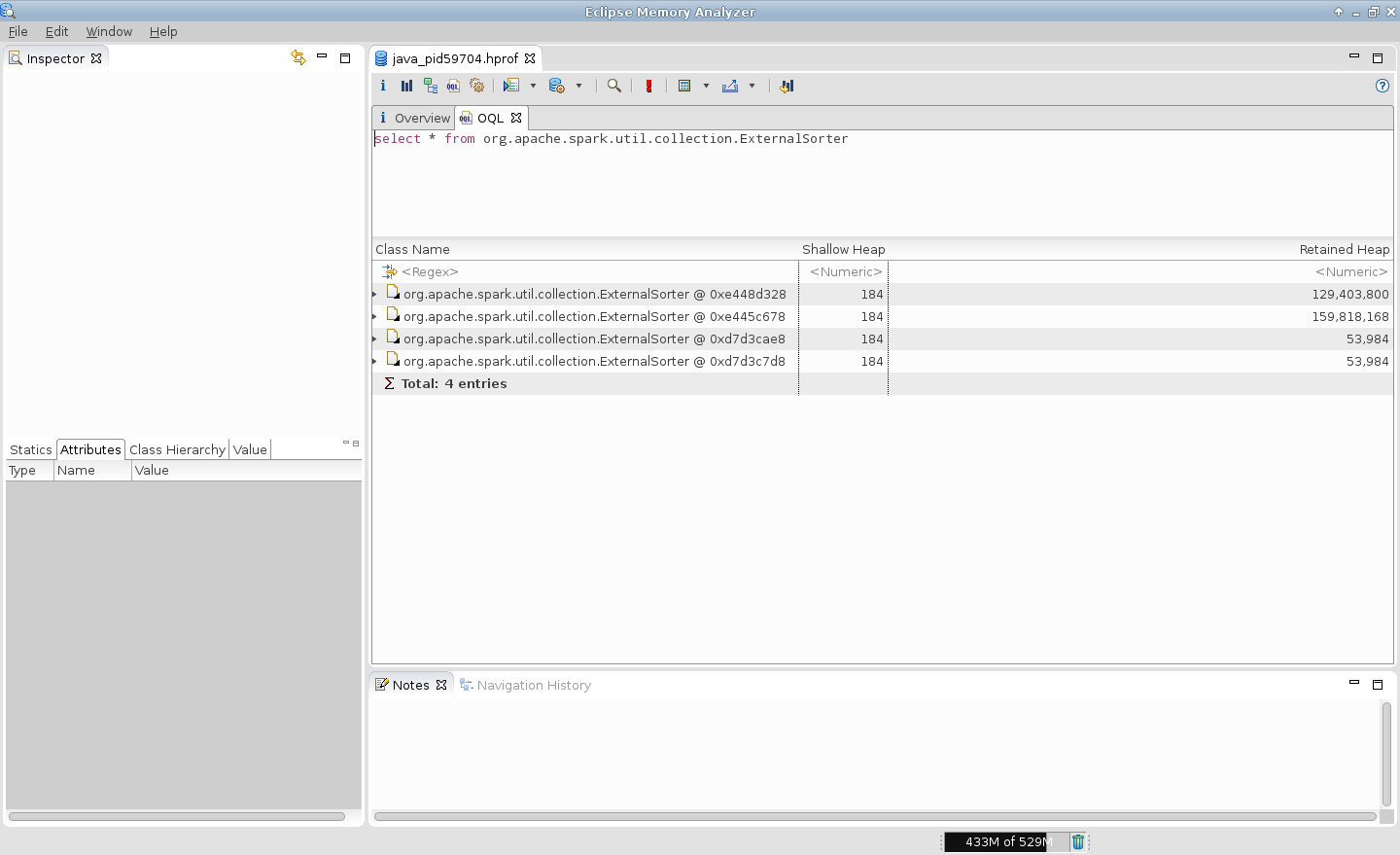

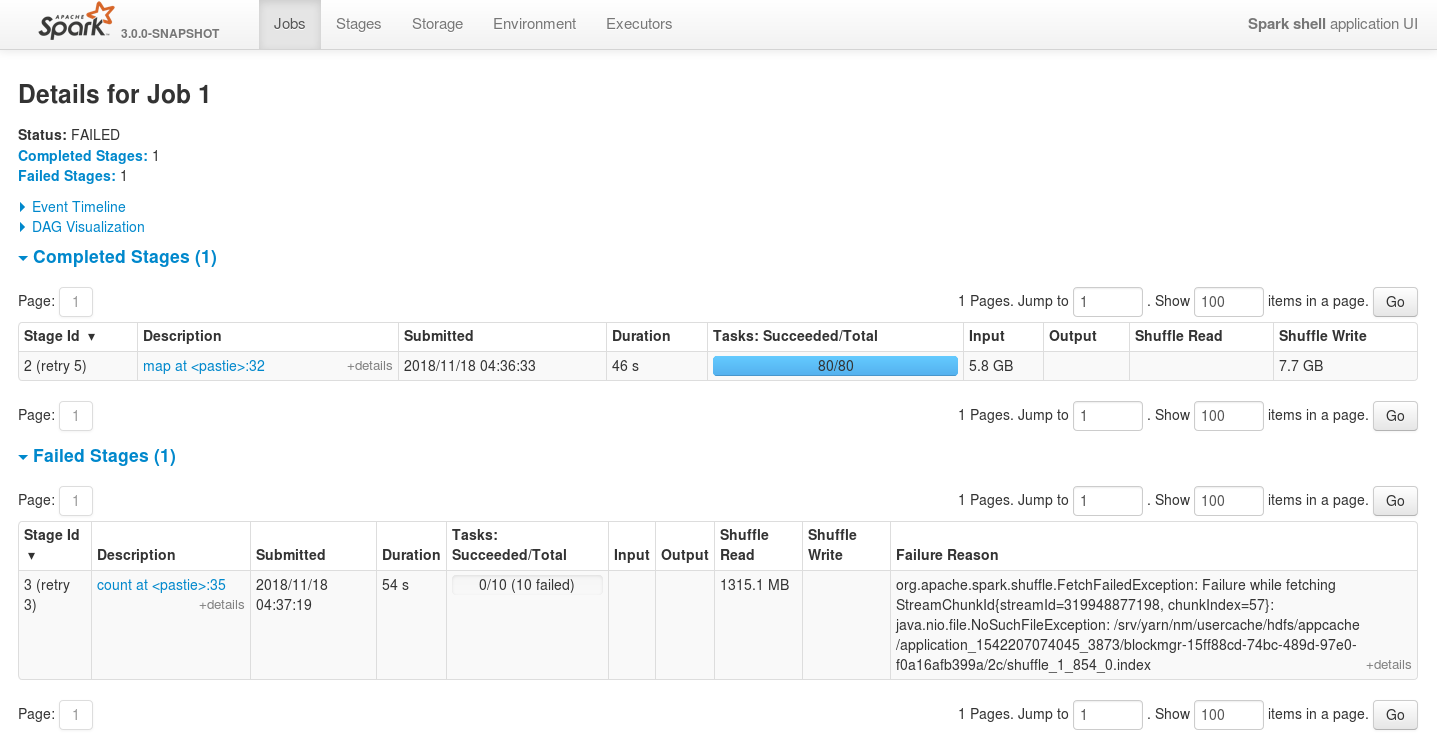

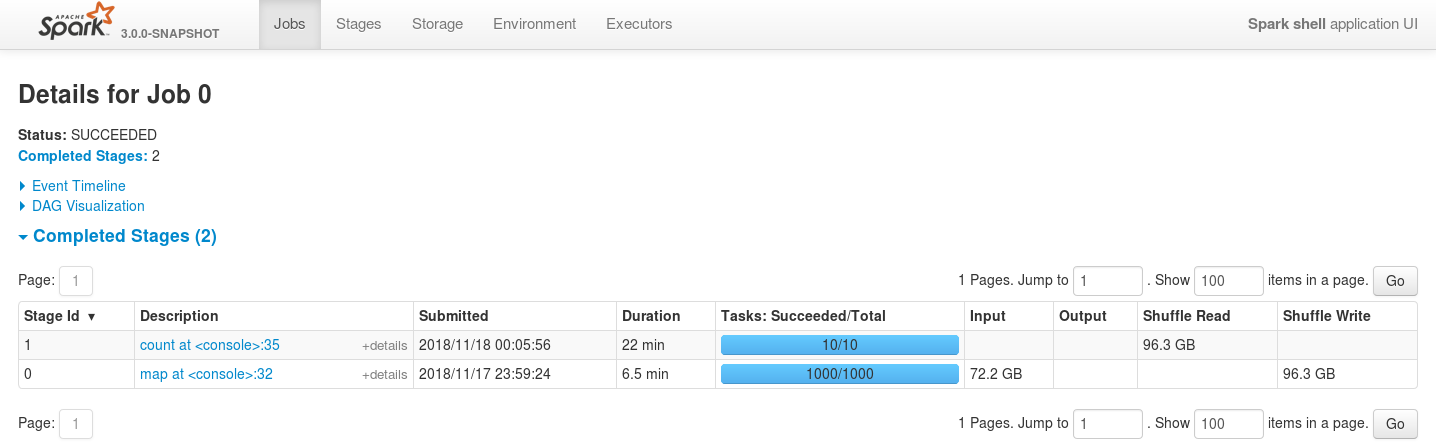

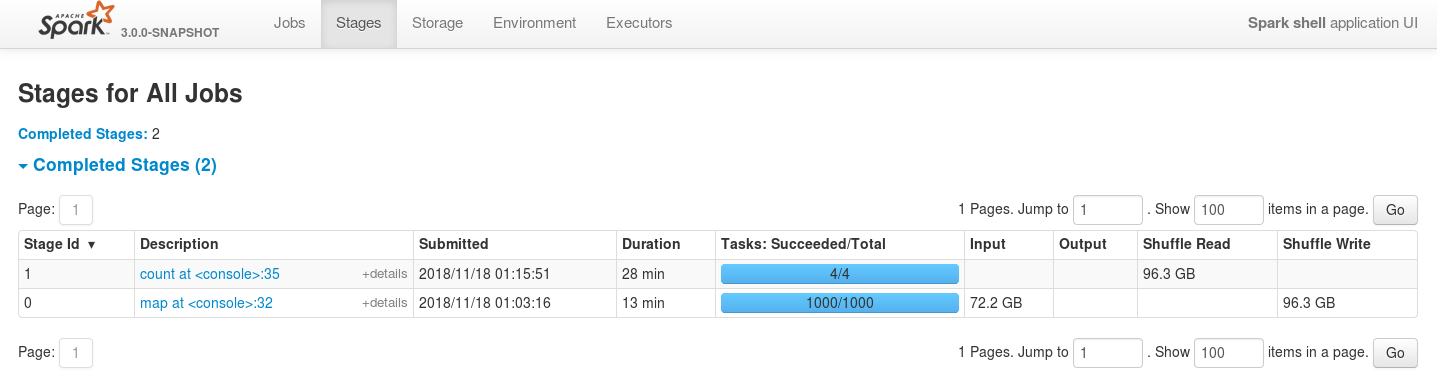

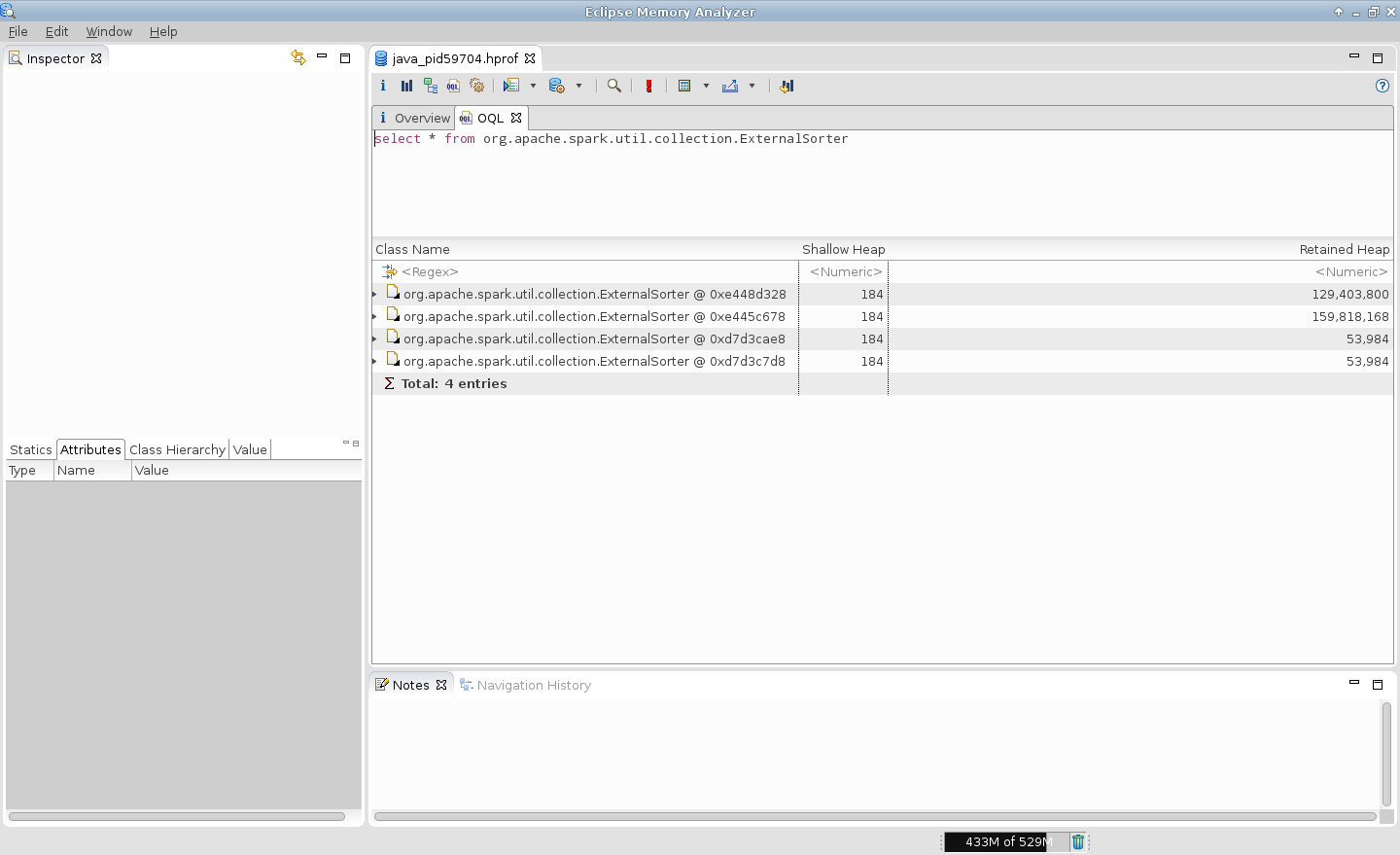

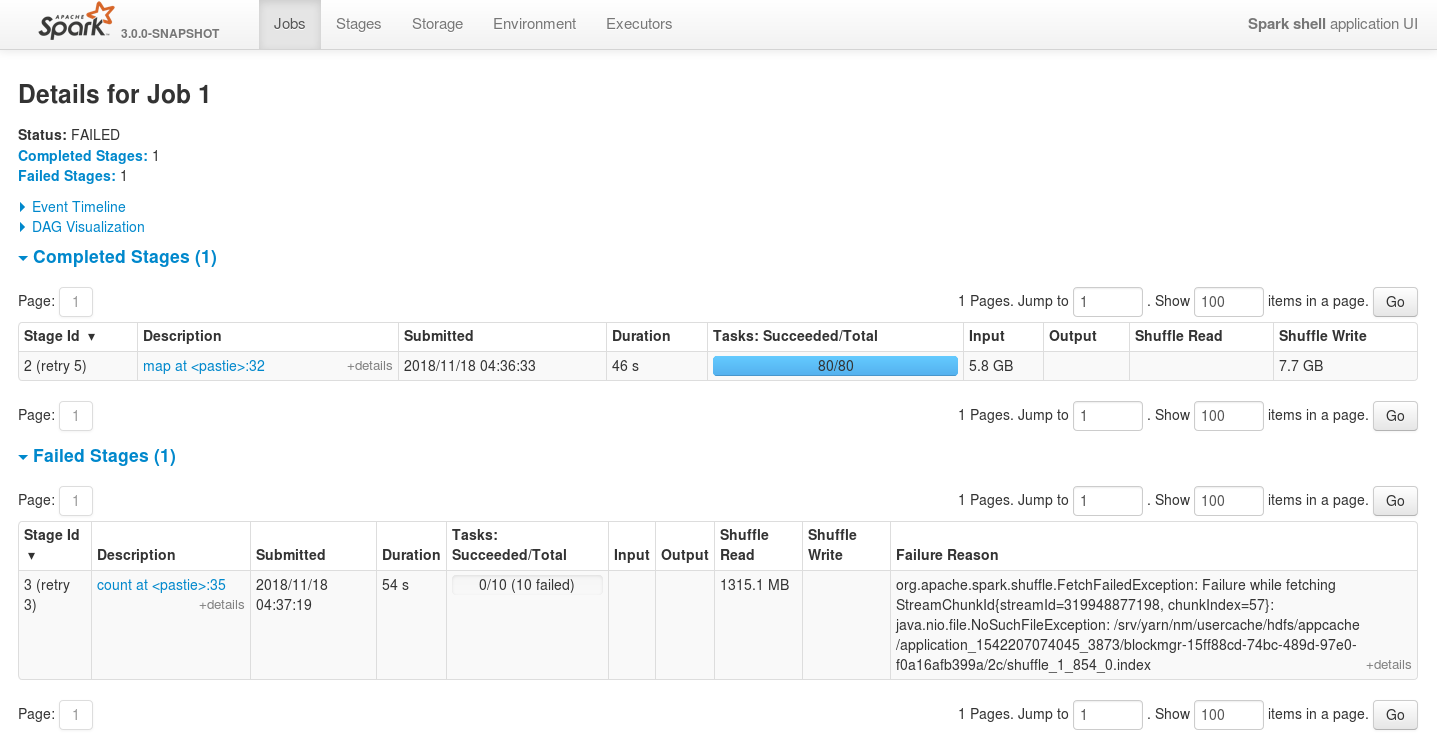

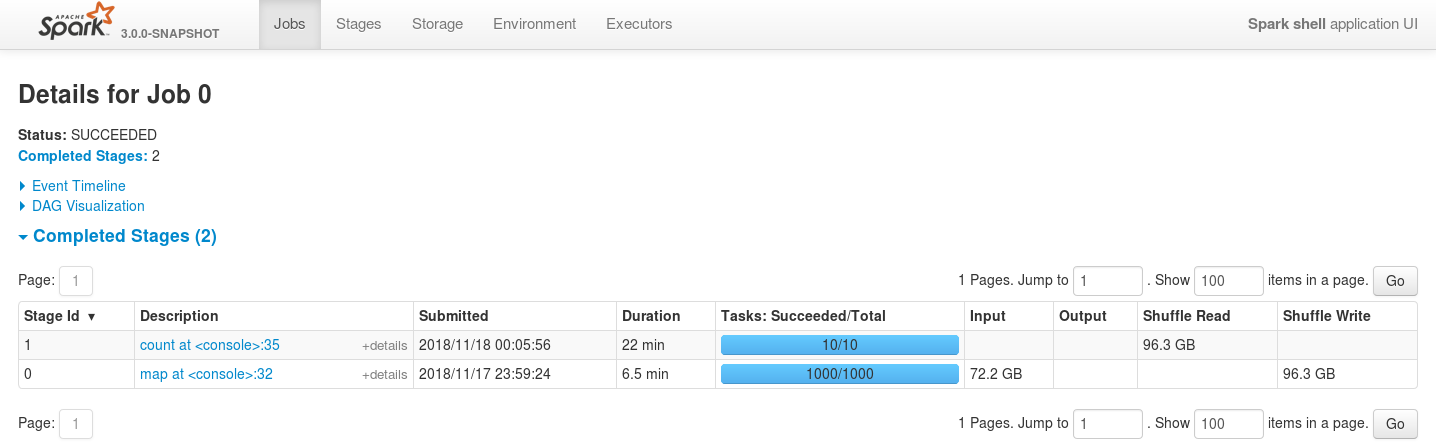

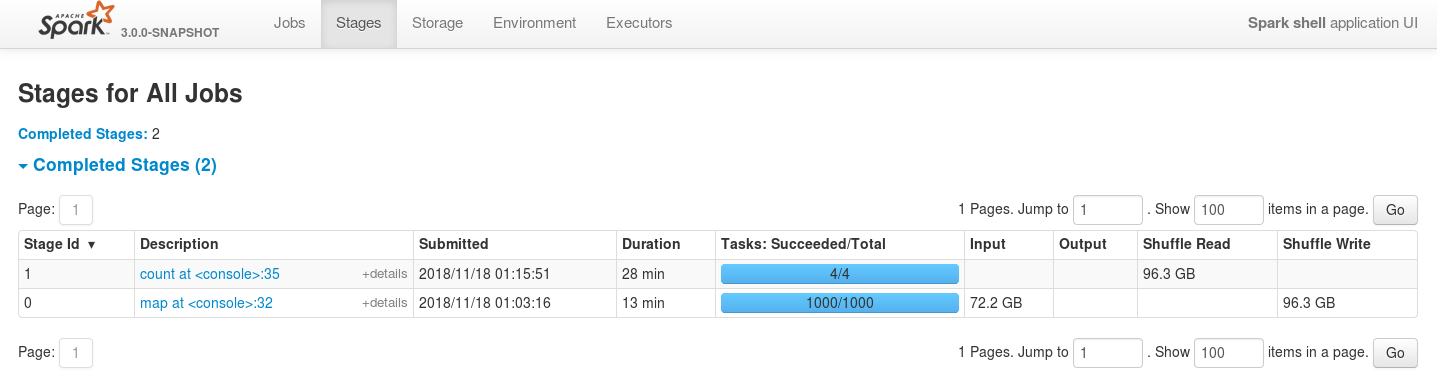

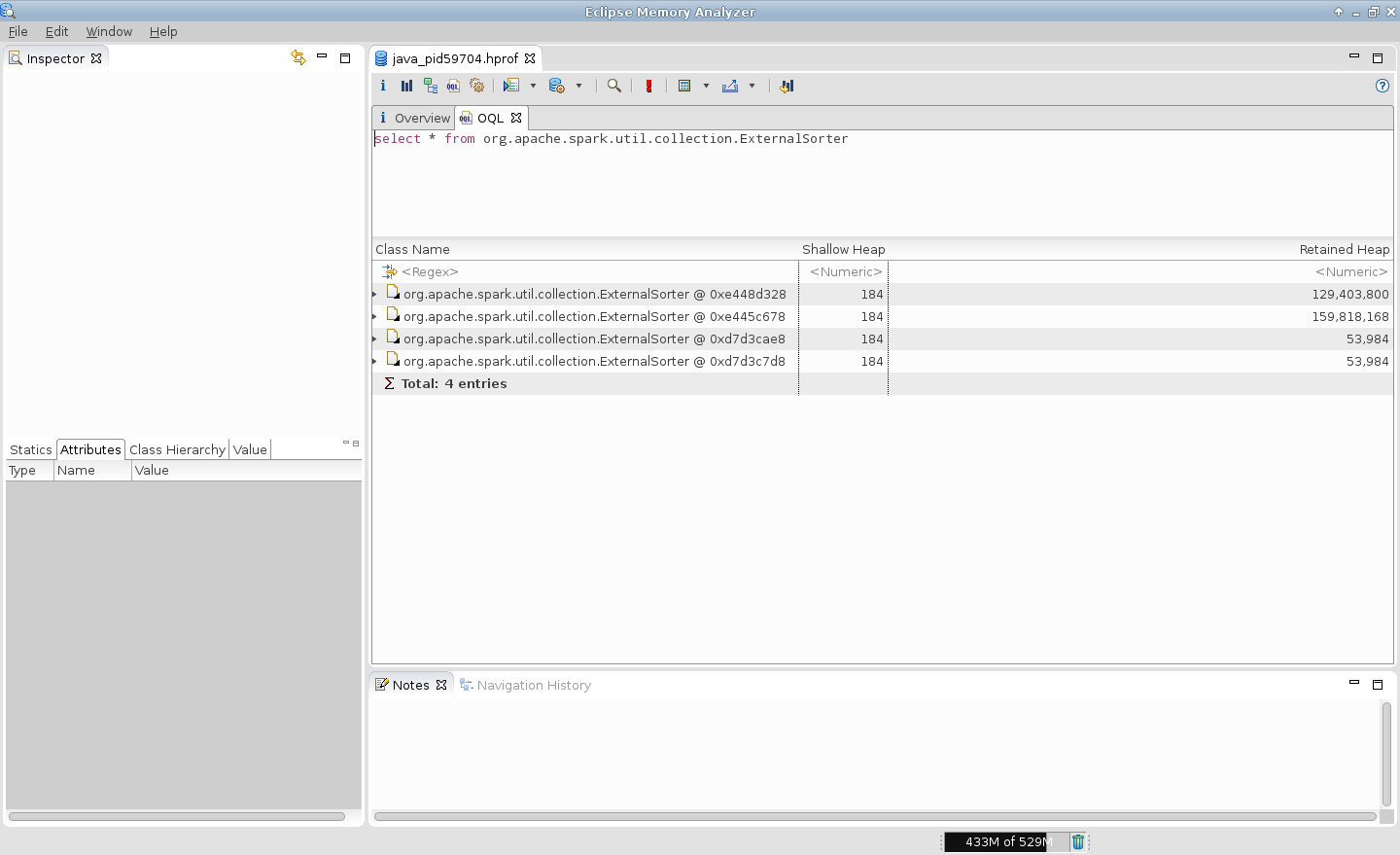

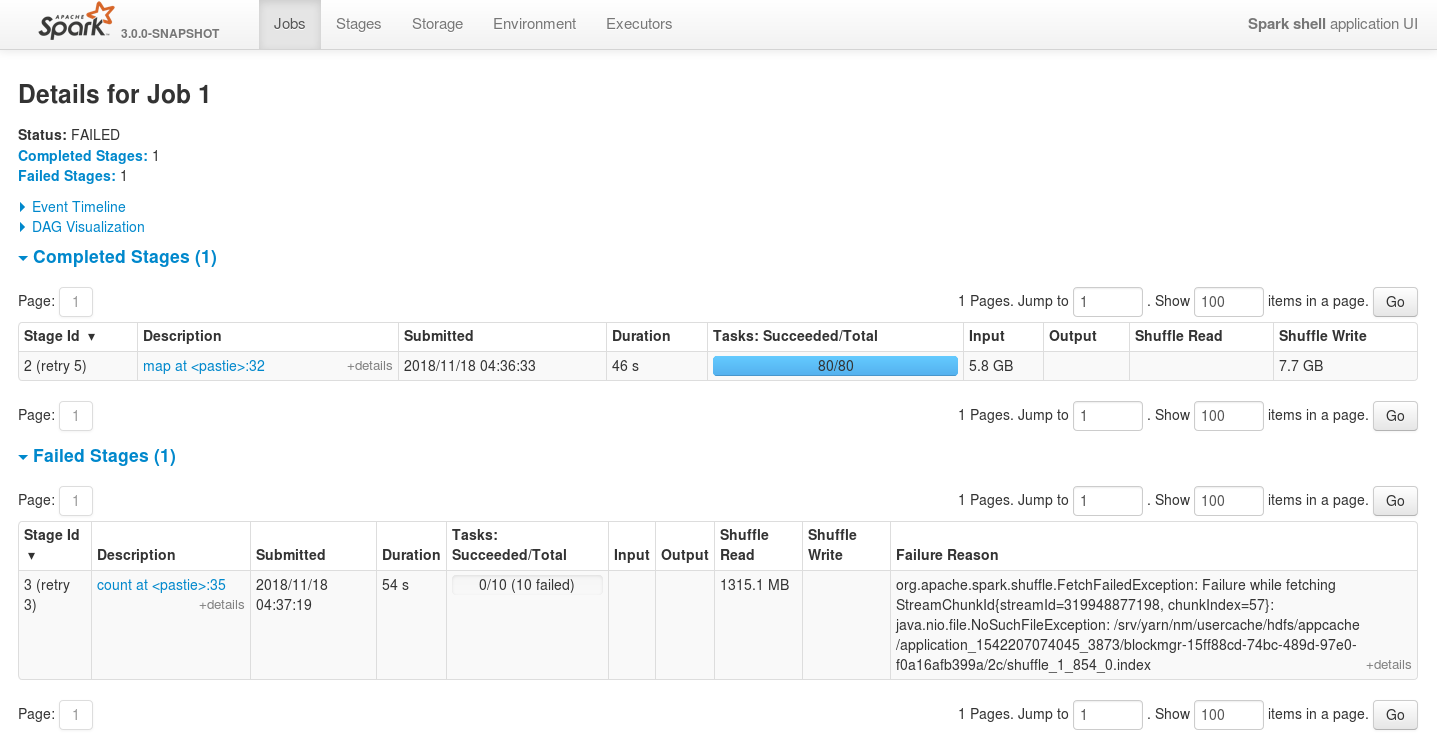

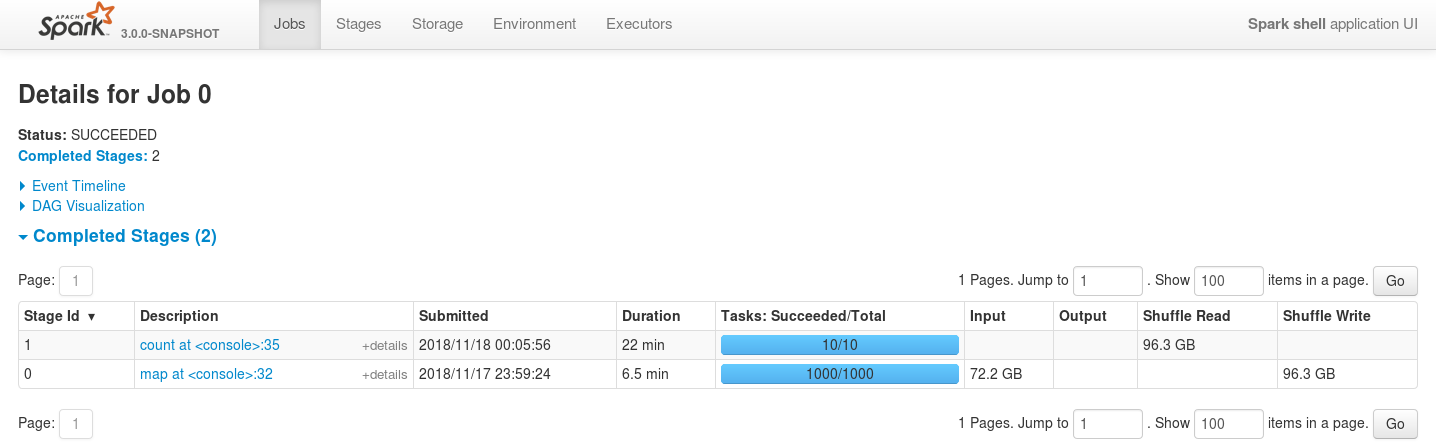

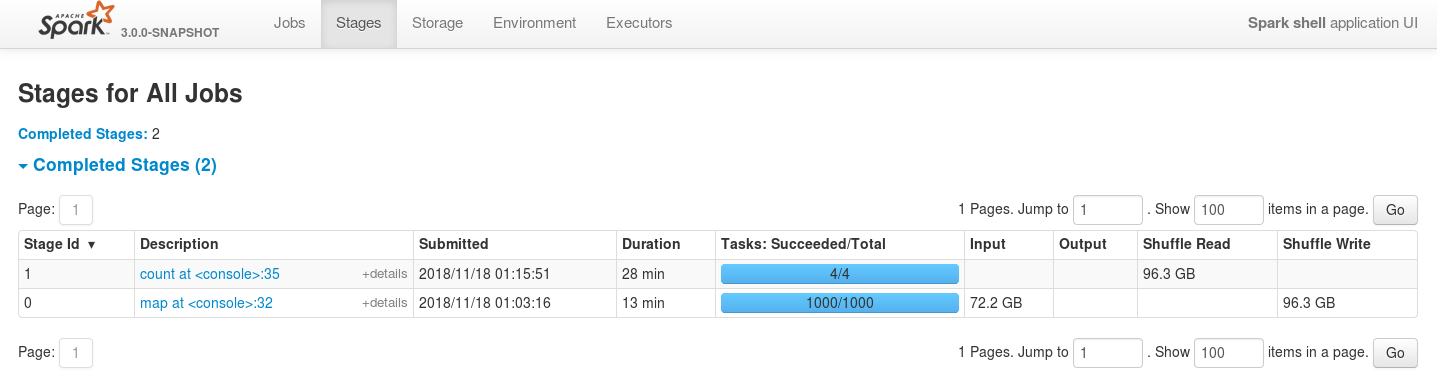

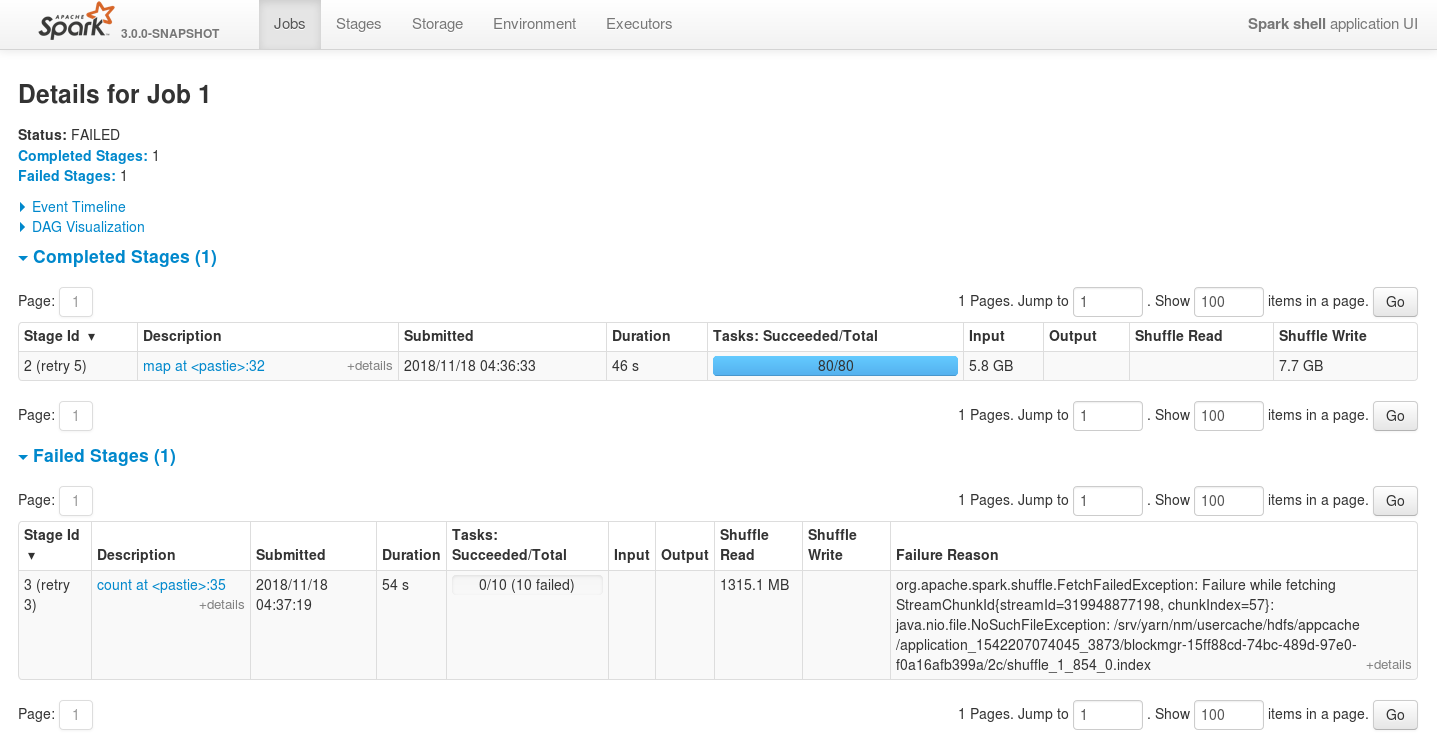

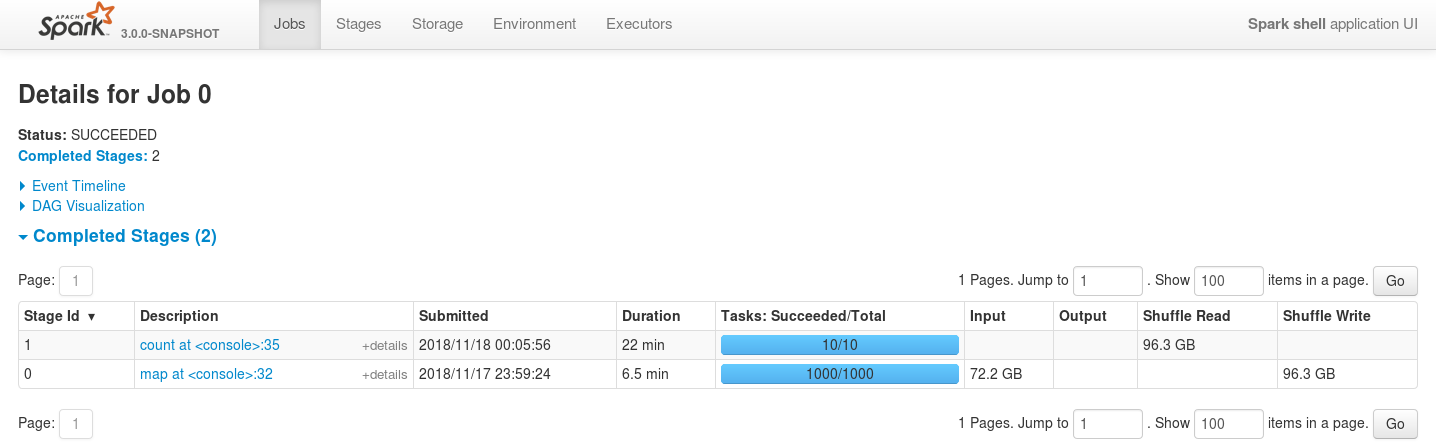

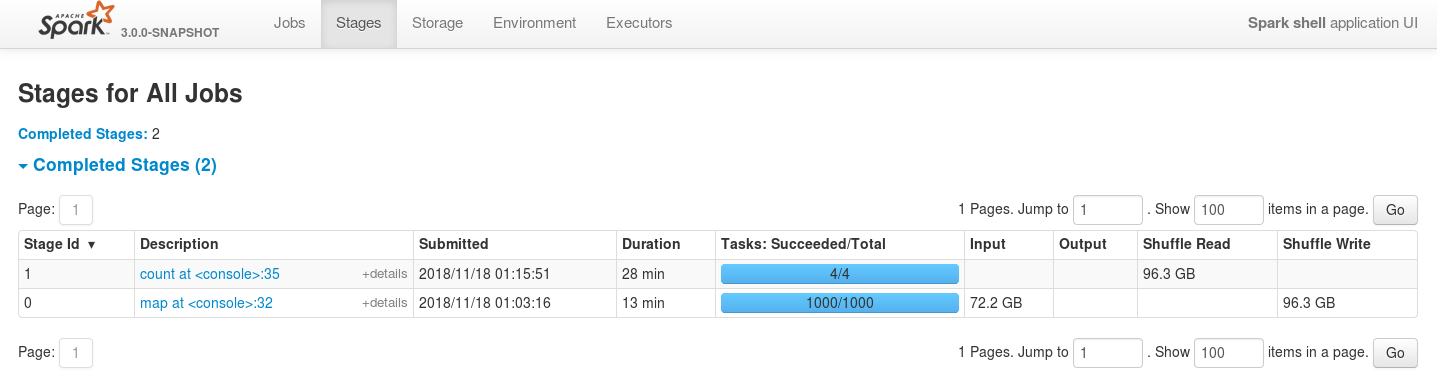

## What changes were proposed in this pull request? This pull request fixes [SPARK-26114](https://issues.apache.org/jira/browse/SPARK-26114) issue that occurs when trying to reduce the number of partitions by means of coalesce without shuffling after shuffle-based transformations. The leak occurs because of not cleaning up `ExternalSorter`'s `readingIterator` field as it's done for its `map` and `buffer` fields. Additionally there are changes to the `CompletionIterator` to prevent capturing its `sub`-iterator and holding it even after the completion iterator completes. It is necessary because in some cases, e.g. in case of standard scala's `flatMap` iterator (which is used is `CoalescedRDD`'s `compute` method) the next value of the main iterator is assigned to `flatMap`'s `cur` field only after it is available. For DAGs where ShuffledRDD is a parent of CoalescedRDD it means that the data should be fetched from the map-side of the shuffle, but the process of fetching this data consumes quite a lot of memory in addition to the memory already consumed by the iterator held by `flatMap`'s `cur` field (until it is reassigned). For the following data ```scala import org.apache.hadoop.io._ import org.apache.hadoop.io.compress._ import org.apache.commons.lang._ import org.apache.spark._ // generate 100M records of sample data sc.makeRDD(1 to 1000, 1000) .flatMap(item => (1 to 100000) .map(i => new Text(RandomStringUtils.randomAlphanumeric(3).toLowerCase) -> new Text(RandomStringUtils.randomAlphanumeric(1024)))) .saveAsSequenceFile("/tmp/random-strings", Some(classOf[GzipCodec])) ``` and the following job ```scala import org.apache.hadoop.io._ import org.apache.spark._ import org.apache.spark.storage._ val rdd = sc.sequenceFile("/tmp/random-strings", classOf[Text], classOf[Text]) rdd .map(item => item._1.toString -> item._2.toString) .repartitionAndSortWithinPartitions(new HashPartitioner(1000)) .coalesce(10,false) .count ``` ... executed like the following ```bash spark-shell \ --num-executors=5 \ --executor-cores=2 \ --master=yarn \ --deploy-mode=client \ --conf spark.executor.memoryOverhead=512 \ --conf spark.executor.memory=1g \ --conf spark.dynamicAllocation.enabled=false \ --conf spark.executor.extraJavaOptions='-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp -Dio.netty.noUnsafe=true' ``` ... executors are always failing with OutOfMemoryErrors. The main issue is multiple leaks of ExternalSorter references. For example, in case of 2 tasks per executor it is expected to be 2 simultaneous instances of ExternalSorter per executor but heap dump generated on OutOfMemoryError shows that there are more ones.  P.S. This PR does not cover cases with CoGroupedRDDs which use ExternalAppendOnlyMap internally, which itself can lead to OutOfMemoryErrors in many places. ## How was this patch tested? - Existing unit tests - New unit tests - Job executions on the live environment Here is the screenshot before applying this patch  Here is the screenshot after applying this patch  And in case of reducing the number of executors even more the job is still stable  Closes #23083 from szhem/SPARK-26114-externalsorter-leak. Authored-by: Sergey Zhemzhitsky <szhemzhitski@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 438f8fd) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request? This pull request fixes [SPARK-26114](https://issues.apache.org/jira/browse/SPARK-26114) issue that occurs when trying to reduce the number of partitions by means of coalesce without shuffling after shuffle-based transformations. The leak occurs because of not cleaning up `ExternalSorter`'s `readingIterator` field as it's done for its `map` and `buffer` fields. Additionally there are changes to the `CompletionIterator` to prevent capturing its `sub`-iterator and holding it even after the completion iterator completes. It is necessary because in some cases, e.g. in case of standard scala's `flatMap` iterator (which is used is `CoalescedRDD`'s `compute` method) the next value of the main iterator is assigned to `flatMap`'s `cur` field only after it is available. For DAGs where ShuffledRDD is a parent of CoalescedRDD it means that the data should be fetched from the map-side of the shuffle, but the process of fetching this data consumes quite a lot of memory in addition to the memory already consumed by the iterator held by `flatMap`'s `cur` field (until it is reassigned). For the following data ```scala import org.apache.hadoop.io._ import org.apache.hadoop.io.compress._ import org.apache.commons.lang._ import org.apache.spark._ // generate 100M records of sample data sc.makeRDD(1 to 1000, 1000) .flatMap(item => (1 to 100000) .map(i => new Text(RandomStringUtils.randomAlphanumeric(3).toLowerCase) -> new Text(RandomStringUtils.randomAlphanumeric(1024)))) .saveAsSequenceFile("/tmp/random-strings", Some(classOf[GzipCodec])) ``` and the following job ```scala import org.apache.hadoop.io._ import org.apache.spark._ import org.apache.spark.storage._ val rdd = sc.sequenceFile("/tmp/random-strings", classOf[Text], classOf[Text]) rdd .map(item => item._1.toString -> item._2.toString) .repartitionAndSortWithinPartitions(new HashPartitioner(1000)) .coalesce(10,false) .count ``` ... executed like the following ```bash spark-shell \ --num-executors=5 \ --executor-cores=2 \ --master=yarn \ --deploy-mode=client \ --conf spark.executor.memoryOverhead=512 \ --conf spark.executor.memory=1g \ --conf spark.dynamicAllocation.enabled=false \ --conf spark.executor.extraJavaOptions='-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp -Dio.netty.noUnsafe=true' ``` ... executors are always failing with OutOfMemoryErrors. The main issue is multiple leaks of ExternalSorter references. For example, in case of 2 tasks per executor it is expected to be 2 simultaneous instances of ExternalSorter per executor but heap dump generated on OutOfMemoryError shows that there are more ones.  P.S. This PR does not cover cases with CoGroupedRDDs which use ExternalAppendOnlyMap internally, which itself can lead to OutOfMemoryErrors in many places. ## How was this patch tested? - Existing unit tests - New unit tests - Job executions on the live environment Here is the screenshot before applying this patch  Here is the screenshot after applying this patch  And in case of reducing the number of executors even more the job is still stable  Closes apache#23083 from szhem/SPARK-26114-externalsorter-leak. Authored-by: Sergey Zhemzhitsky <szhemzhitski@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request? This pull request fixes [SPARK-26114](https://issues.apache.org/jira/browse/SPARK-26114) issue that occurs when trying to reduce the number of partitions by means of coalesce without shuffling after shuffle-based transformations. The leak occurs because of not cleaning up `ExternalSorter`'s `readingIterator` field as it's done for its `map` and `buffer` fields. Additionally there are changes to the `CompletionIterator` to prevent capturing its `sub`-iterator and holding it even after the completion iterator completes. It is necessary because in some cases, e.g. in case of standard scala's `flatMap` iterator (which is used is `CoalescedRDD`'s `compute` method) the next value of the main iterator is assigned to `flatMap`'s `cur` field only after it is available. For DAGs where ShuffledRDD is a parent of CoalescedRDD it means that the data should be fetched from the map-side of the shuffle, but the process of fetching this data consumes quite a lot of memory in addition to the memory already consumed by the iterator held by `flatMap`'s `cur` field (until it is reassigned). For the following data ```scala import org.apache.hadoop.io._ import org.apache.hadoop.io.compress._ import org.apache.commons.lang._ import org.apache.spark._ // generate 100M records of sample data sc.makeRDD(1 to 1000, 1000) .flatMap(item => (1 to 100000) .map(i => new Text(RandomStringUtils.randomAlphanumeric(3).toLowerCase) -> new Text(RandomStringUtils.randomAlphanumeric(1024)))) .saveAsSequenceFile("/tmp/random-strings", Some(classOf[GzipCodec])) ``` and the following job ```scala import org.apache.hadoop.io._ import org.apache.spark._ import org.apache.spark.storage._ val rdd = sc.sequenceFile("/tmp/random-strings", classOf[Text], classOf[Text]) rdd .map(item => item._1.toString -> item._2.toString) .repartitionAndSortWithinPartitions(new HashPartitioner(1000)) .coalesce(10,false) .count ``` ... executed like the following ```bash spark-shell \ --num-executors=5 \ --executor-cores=2 \ --master=yarn \ --deploy-mode=client \ --conf spark.executor.memoryOverhead=512 \ --conf spark.executor.memory=1g \ --conf spark.dynamicAllocation.enabled=false \ --conf spark.executor.extraJavaOptions='-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp -Dio.netty.noUnsafe=true' ``` ... executors are always failing with OutOfMemoryErrors. The main issue is multiple leaks of ExternalSorter references. For example, in case of 2 tasks per executor it is expected to be 2 simultaneous instances of ExternalSorter per executor but heap dump generated on OutOfMemoryError shows that there are more ones.  P.S. This PR does not cover cases with CoGroupedRDDs which use ExternalAppendOnlyMap internally, which itself can lead to OutOfMemoryErrors in many places. ## How was this patch tested? - Existing unit tests - New unit tests - Job executions on the live environment Here is the screenshot before applying this patch  Here is the screenshot after applying this patch  And in case of reducing the number of executors even more the job is still stable  Closes apache#23083 from szhem/SPARK-26114-externalsorter-leak. Authored-by: Sergey Zhemzhitsky <szhemzhitski@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 438f8fd) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request? This pull request fixes [SPARK-26114](https://issues.apache.org/jira/browse/SPARK-26114) issue that occurs when trying to reduce the number of partitions by means of coalesce without shuffling after shuffle-based transformations. The leak occurs because of not cleaning up `ExternalSorter`'s `readingIterator` field as it's done for its `map` and `buffer` fields. Additionally there are changes to the `CompletionIterator` to prevent capturing its `sub`-iterator and holding it even after the completion iterator completes. It is necessary because in some cases, e.g. in case of standard scala's `flatMap` iterator (which is used is `CoalescedRDD`'s `compute` method) the next value of the main iterator is assigned to `flatMap`'s `cur` field only after it is available. For DAGs where ShuffledRDD is a parent of CoalescedRDD it means that the data should be fetched from the map-side of the shuffle, but the process of fetching this data consumes quite a lot of memory in addition to the memory already consumed by the iterator held by `flatMap`'s `cur` field (until it is reassigned). For the following data ```scala import org.apache.hadoop.io._ import org.apache.hadoop.io.compress._ import org.apache.commons.lang._ import org.apache.spark._ // generate 100M records of sample data sc.makeRDD(1 to 1000, 1000) .flatMap(item => (1 to 100000) .map(i => new Text(RandomStringUtils.randomAlphanumeric(3).toLowerCase) -> new Text(RandomStringUtils.randomAlphanumeric(1024)))) .saveAsSequenceFile("/tmp/random-strings", Some(classOf[GzipCodec])) ``` and the following job ```scala import org.apache.hadoop.io._ import org.apache.spark._ import org.apache.spark.storage._ val rdd = sc.sequenceFile("/tmp/random-strings", classOf[Text], classOf[Text]) rdd .map(item => item._1.toString -> item._2.toString) .repartitionAndSortWithinPartitions(new HashPartitioner(1000)) .coalesce(10,false) .count ``` ... executed like the following ```bash spark-shell \ --num-executors=5 \ --executor-cores=2 \ --master=yarn \ --deploy-mode=client \ --conf spark.executor.memoryOverhead=512 \ --conf spark.executor.memory=1g \ --conf spark.dynamicAllocation.enabled=false \ --conf spark.executor.extraJavaOptions='-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp -Dio.netty.noUnsafe=true' ``` ... executors are always failing with OutOfMemoryErrors. The main issue is multiple leaks of ExternalSorter references. For example, in case of 2 tasks per executor it is expected to be 2 simultaneous instances of ExternalSorter per executor but heap dump generated on OutOfMemoryError shows that there are more ones.  P.S. This PR does not cover cases with CoGroupedRDDs which use ExternalAppendOnlyMap internally, which itself can lead to OutOfMemoryErrors in many places. ## How was this patch tested? - Existing unit tests - New unit tests - Job executions on the live environment Here is the screenshot before applying this patch  Here is the screenshot after applying this patch  And in case of reducing the number of executors even more the job is still stable  Closes apache#23083 from szhem/SPARK-26114-externalsorter-leak. Authored-by: Sergey Zhemzhitsky <szhemzhitski@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 438f8fd) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

| if (!r && !completed) { | ||

| completed = true | ||

| // reassign to release resources of highly resource consuming iterators early | ||

| iter = Iterator.empty.asInstanceOf[I] |

This comment was marked as resolved.

This comment was marked as resolved.

Sorry, something went wrong.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@ChenjunZou, you can find the details in this message

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, szhem :)

your UT explains all.

at first I misunderstand sub as CompletionIterator(val sub)

Hided, well done!

What changes were proposed in this pull request?

This pull request fixes SPARK-26114 issue that occurs when trying to reduce the number of partitions by means of coalesce without shuffling after shuffle-based transformations.

The leak occurs because of not cleaning up

ExternalSorter'sreadingIteratorfield as it's done for itsmapandbufferfields.Additionally there are changes to the

CompletionIteratorto prevent capturing itssub-iterator and holding it even after the completion iterator completes. It is necessary because in some cases, e.g. in case of standard scala'sflatMapiterator (which is used isCoalescedRDD'scomputemethod) the next value of the main iterator is assigned toflatMap'scurfield only after it is available.For DAGs where ShuffledRDD is a parent of CoalescedRDD it means that the data should be fetched from the map-side of the shuffle, but the process of fetching this data consumes quite a lot of memory in addition to the memory already consumed by the iterator held by

flatMap'scurfield (until it is reassigned).For the following data

and the following job

... executed like the following

... executors are always failing with OutOfMemoryErrors.

The main issue is multiple leaks of ExternalSorter references.

For example, in case of 2 tasks per executor it is expected to be 2 simultaneous instances of ExternalSorter per executor but heap dump generated on OutOfMemoryError shows that there are more ones.

P.S. This PR does not cover cases with CoGroupedRDDs which use ExternalAppendOnlyMap internally, which itself can lead to OutOfMemoryErrors in many places.

How was this patch tested?

Here is the screenshot before applying this patch

Here is the screenshot after applying this patch

And in case of reducing the number of executors even more the job is still stable