New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-28930][SQL] Last Access Time value shall display 'UNKNOWN' in all clients #25720

Conversation

|

Test build #110299 has finished for PR 25720 at commit

|

87e3f64

to

6051f0b

Compare

|

Test build #110300 has finished for PR 25720 at commit

|

6051f0b

to

51def4f

Compare

|

Test build #110353 has finished for PR 25720 at commit

|

51def4f

to

2568d50

Compare

…currently system cannot evaluate the last access time, and 'null' values will be shown in its capital form 'NULL' for SQL client to make the display format similar to spark-shell. What changes were proposed in this pull request? If there is no comment for spark scala shell shows "null" in small letters but all other places Hive beeline/Spark beeline/Spark SQL it is showing in CAPITAL "NULL". In this patch shown in its capital form 'NULL' for SQL client to make the display format similar to Hive beeline/Spark beeline/Spark SQL. Also corrected the Last Access time, the value shall display 'UNKNOWN' as currently system wont support the last access time evaluation. Issue 2 mentioned in JIRA Spark SQL "desc formatted tablename" is not showing the header # col_name,data_type,comment , seems to be the header has been removed knowingly as part of SPARK-20954. Does this PR introduce any user-facing change? No How was this patch tested? Locally and corrected a ut.

|

Test build #110362 has finished for PR 25720 at commit

|

|

retest this please |

|

Test build #110418 has finished for PR 25720 at commit

|

|

I'm not sure this is a problem. These are different formats for the same info. In the case of a Spark DataFrame, |

|

@srowen Let me know for any inputs ,Thanks for your suggestions Sean. |

…currently system cannot evaluate the last access time, and 'null' values will be shown in its capital form 'NULL' for SQL client to make the display format similar to spark-shell. What changes were proposed in this pull request? If there is no comment for spark scala shell shows "null" in small letters but all other places Hive beeline/Spark beeline/Spark SQL it is showing in CAPITAL "NULL". In this patch shown in its capital form 'NULL' for SQL client to make the display format similar to Hive beeline/Spark beeline/Spark SQL. Also corrected the Last Access time, the value shall display 'UNKNOWN' as currently system wont support the last access time evaluation. Issue 2 mentioned in JIRA Spark SQL "desc formatted tablename" is not showing the header # col_name,data_type,comment , seems to be the header has been removed knowingly as part of SPARK-20954. Does this PR introduce any user-facing change? No How was this patch tested? Locally and corrected a ut.

|

Test build #110465 has finished for PR 25720 at commit

|

|

@srowen @dongjoon-hyun - Handled the comments, let me know for any further inputs. Thanks |

|

gentle ping@srowen @dongjoon-hyun |

|

I still don't quite understand it, are you trying to fix a cosmetic issue or a bug? I don't see a test that now passes with this change but didn't before. But I am also not clear that "UNKNOWN" or "NULL" is the desired output, either. |

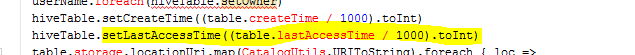

After fixing JIRA SPARK-24812 the expectation is 'Last Access' shall be displayed as 'UNKNOWN' which is not happening right now as the value is shown as [Last Access,Thu Jan 01 08:00:00 CST 1970]. As analyzed i found the current logic if (-1 == lastAccessTime) "UNKNOWN" else new Date(lastAccessTime).toString is failing as the Last Access value comes as '0', Its basically a cosmetic bug. Even in hive (verified in 1.2) the last access will be shown as 'UNKNOWN' because currently no mechanism for evaluating last access. |

|

OK I see that we already intend to display "UNKNOWN" to match Hive in some cases. This does not change? Are you saying that Spark will use -1 and Hive will use 0 in the metastore for unknown values? then why change Spark's value? You can just treat <= 0 as unknown? |

|

Yeah , that seems to be better solution . Will update the condition then.

Thanks .

…On Mon, 16 Sep 2019 at 7:44 PM, Sean Owen ***@***.***> wrote:

OK I see that we already intend to display "UNKNOWN" to match Hive in some

cases. This does not change?

Are you saying that Spark will use -1 and Hive will use 0 in the metastore

for unknown values? then why change Spark's value? You can just treat <= 0

as unknown?

—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub

<#25720?email_source=notifications&email_token=ADDFT6OJPKFH7ELF2BEDZZ3QJ6ID3A5CNFSM4IUSPP72YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD6ZI4JA#issuecomment-531795492>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/ADDFT6LTN2FADJUPUJGRCSDQJ6ID3ANCNFSM4IUSPP7Q>

.

|

…currently system cannot evaluate the last access time, and 'null' values will be shown in its capital form 'NULL' for SQL client to make the display format similar to spark-shell. What changes were proposed in this pull request? If there is no comment for spark scala shell shows "null" in small letters but all other places Hive beeline/Spark beeline/Spark SQL it is showing in CAPITAL "NULL". In this patch shown in its capital form 'NULL' for SQL client to make the display format similar to Hive beeline/Spark beeline/Spark SQL. Also corrected the Last Access time, the value shall display 'UNKNOWN' as currently system wont support the last access time evaluation. Issue 2 mentioned in JIRA Spark SQL "desc formatted tablename" is not showing the header # col_name,data_type,comment , seems to be the header has been removed knowingly as part of SPARK-20954. Does this PR introduce any user-facing change? No How was this patch tested? Locally and corrected a ut.

|

Updated as per suggestion. Thanks for the input Sean.

On Mon, 16 Sep 2019 at 7:57 PM, sujith chacko <sujithchacko.2010@gmail.com>

wrote:

… Yeah , that seems to be better solution . Will update the condition then.

Thanks .

On Mon, 16 Sep 2019 at 7:44 PM, Sean Owen ***@***.***>

wrote:

> OK I see that we already intend to display "UNKNOWN" to match Hive in

> some cases. This does not change?

>

> Are you saying that Spark will use -1 and Hive will use 0 in the

> metastore for unknown values? then why change Spark's value? You can just

> treat <= 0 as unknown?

>

> —

> You are receiving this because you authored the thread.

> Reply to this email directly, view it on GitHub

> <#25720?email_source=notifications&email_token=ADDFT6OJPKFH7ELF2BEDZZ3QJ6ID3A5CNFSM4IUSPP72YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD6ZI4JA#issuecomment-531795492>,

> or mute the thread

> <https://github.com/notifications/unsubscribe-auth/ADDFT6LTN2FADJUPUJGRCSDQJ6ID3ANCNFSM4IUSPP7Q>

> .

>

|

|

Given review of #21775 do you have an opinion @gatorsmile ? |

|

Test build #110647 has finished for PR 25720 at commit

|

|

retest this please |

|

Test build #110736 has finished for PR 25720 at commit

|

|

retest this please |

|

Test build #110767 has finished for PR 25720 at commit

|

|

Seems to be failures are not relevant to my changes, will trigger once again (: |

|

retest this please |

|

Test build #110776 has finished for PR 25720 at commit

|

|

Merged to master. |

What changes were proposed in this pull request?

Issue 1 : modifications not required as these are different formats for the same info. In the case of a Spark DataFrame, null is correct.

Issue 2 mentioned in JIRA Spark SQL "desc formatted tablename" is not showing the header # col_name,data_type,comment , seems to be the header has been removed knowingly as part of SPARK-20954.

Issue 3:

Corrected the Last Access time, the value shall display 'UNKNOWN' as currently system wont support the last access time evaluation, since hive was setting Last access time as '0' in metastore even though spark CatalogTable last access time value set as -1. this will make the validation logic of LasAccessTime where spark sets 'UNKNOWN' value if last access time value set as -1 (means not evaluated).

Does this PR introduce any user-facing change?

No

How was this patch tested?

Locally and corrected a ut.

Attaching the test report below