[SPARK-33033][WEBUI] display time series view for task metrics in history server #29908

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

What changes were proposed in this pull request?

Why are the changes needed?

Event log contains all tasks' metrics data, which are useful for performance debugging. By now, spark UI only displays final aggregation results, much information is hidden by this way. If spark UI could provide time series data view, it would be more helpful to performance debugging problems. We would like to build application statistics page in history server based on task metrics to provide more straight forward insight for spark application.

Below are views in application statistics page:

Execution Throughput: sum of completed tasks, stages, and jobs per minute. (associated stage Ids can be viewed in tool tip message.)

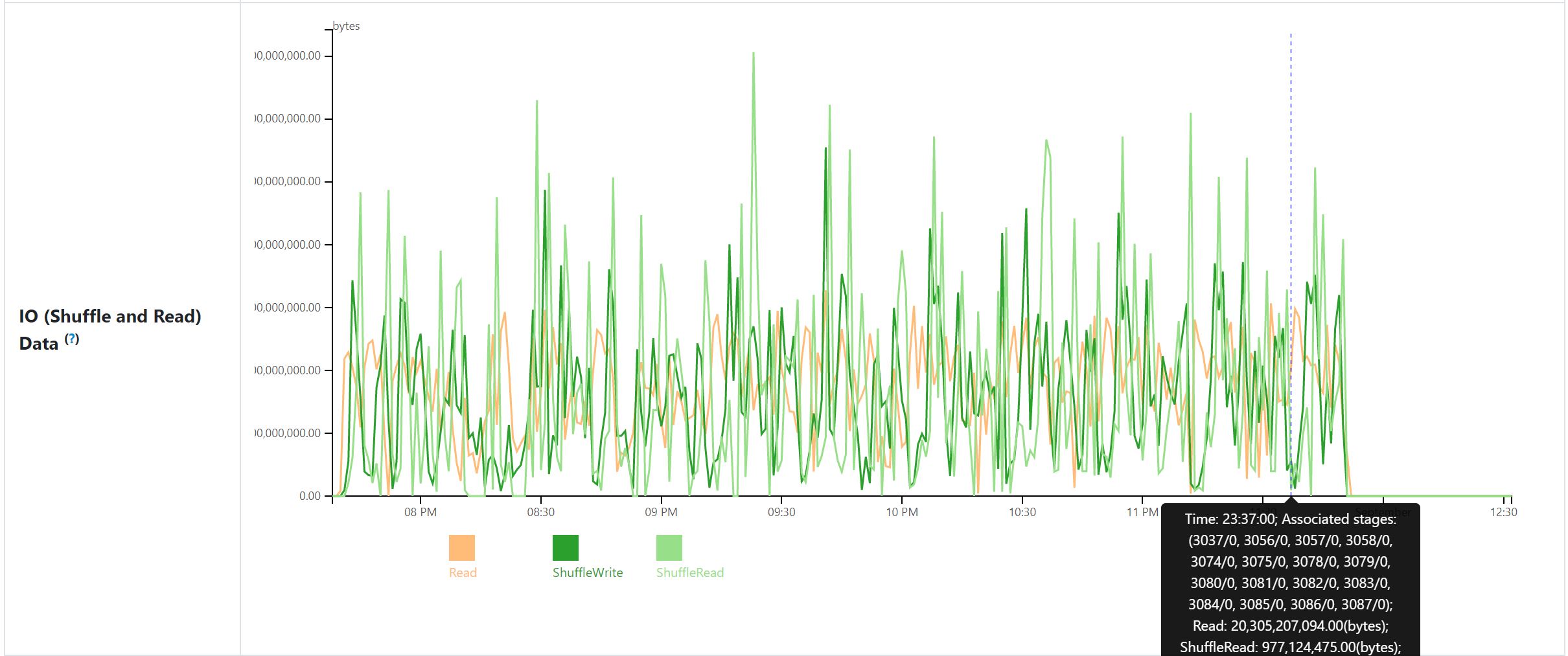

IO (Shuffle and Read) Data: sum of total shuffle read bytes, shuffle write bytes and read bytes per minute.

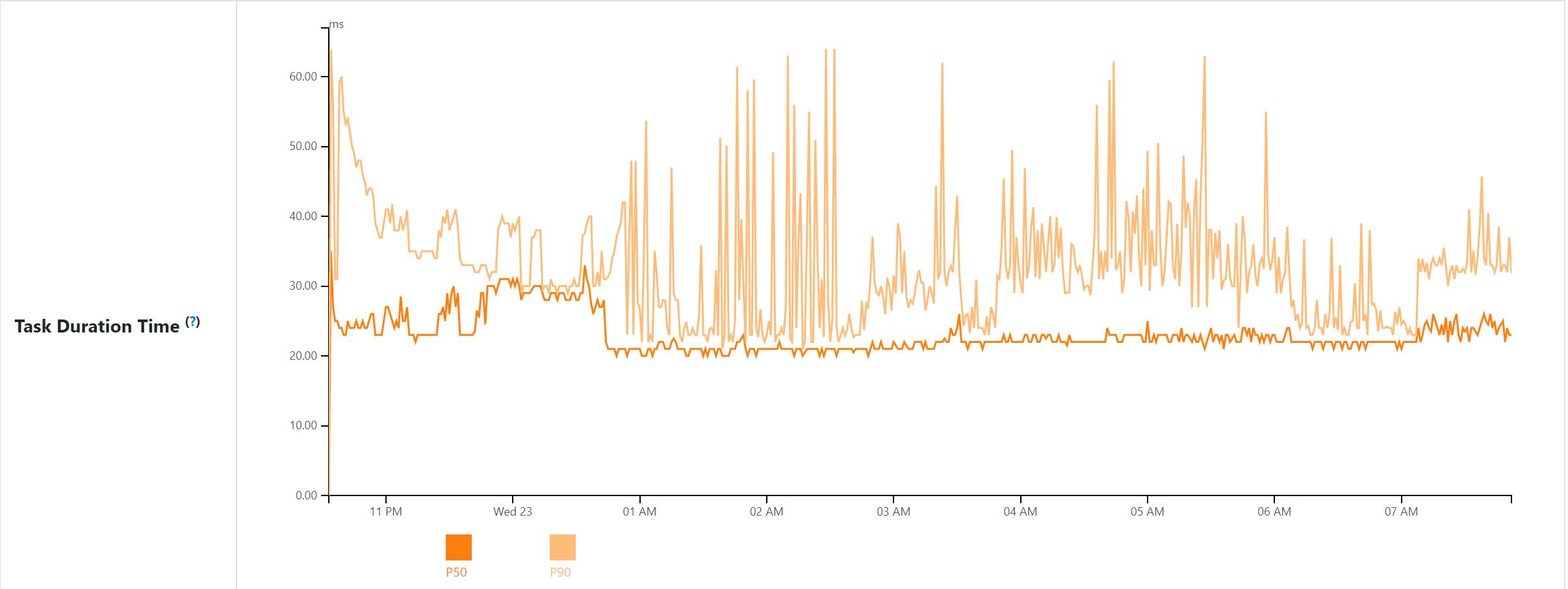

Task Duration Time: application level 50% and 90% percentile of task duration per minute.

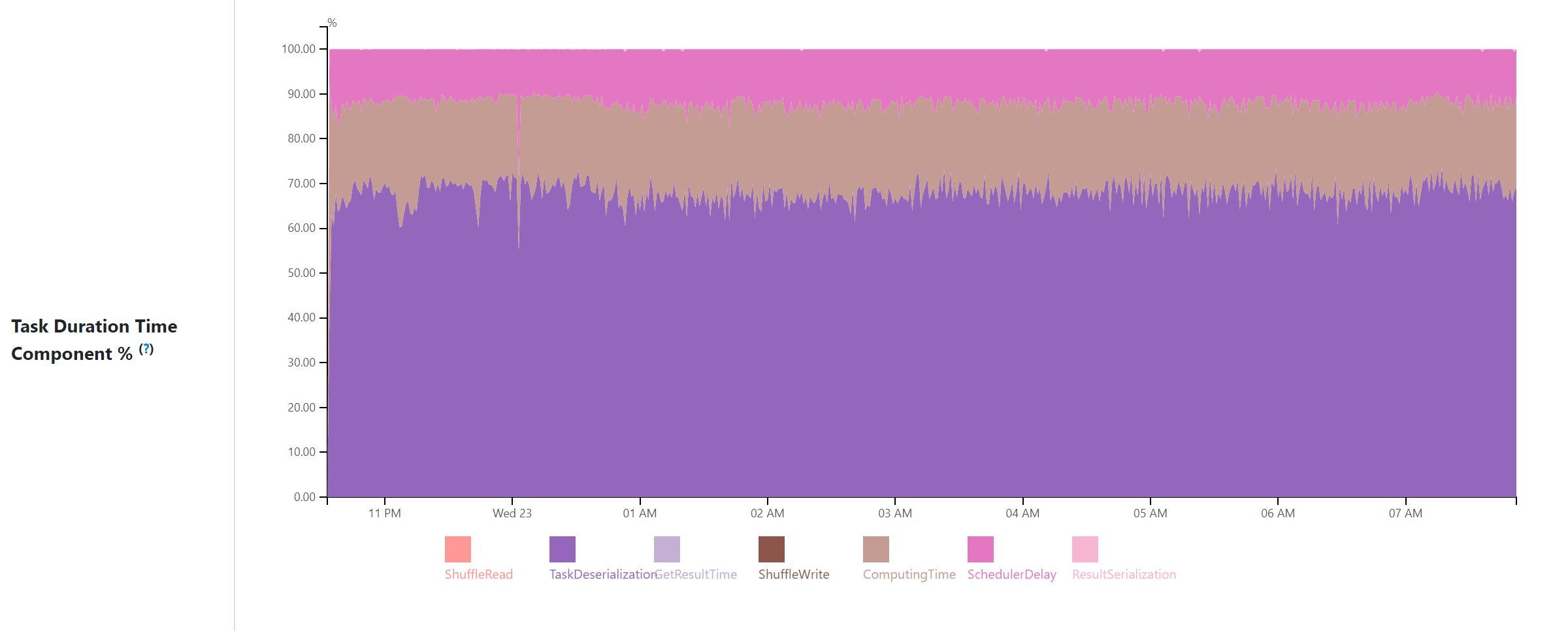

Task Duration Time Component %: percentage of scheduler delay, computing time, shuffle read, task deserialization, result serialization, shuffle write, get result time by task duration per minute.

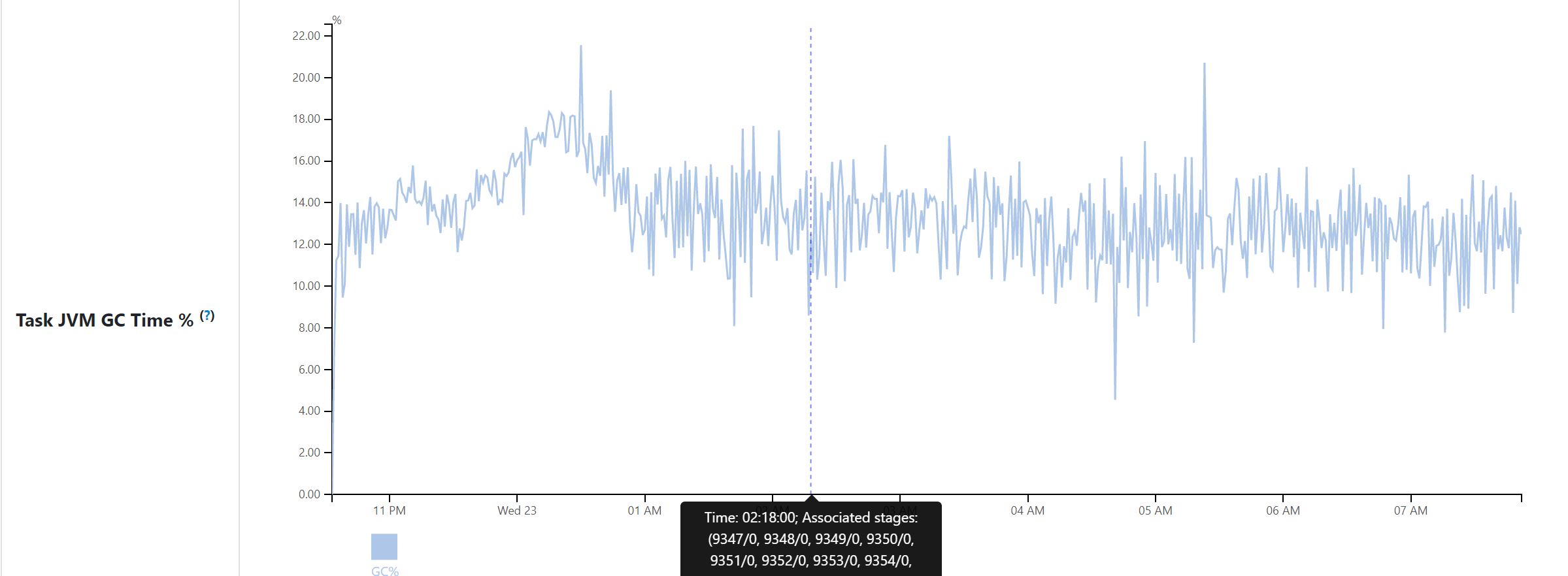

Task JVM GC Time %: percentage of task JVM GC time by task duration per minute.

Application statistics page is only available in spark history server. Aggregated data is generated when parsing event log file and store in KVStore. Metrics data is aggregated to one data instance per minute (based on task finish time). For example, if task a finish time is in (t1 - 1minute, t1],a's data is added to data instance t1. This follows same approach of executors metrics.

From my test there is no much increasing for kv store size and replaying time. Here is my local test result. Impact to replay time may be little different for different applications, but it should be too big.

Does this PR introduce any user-facing change?

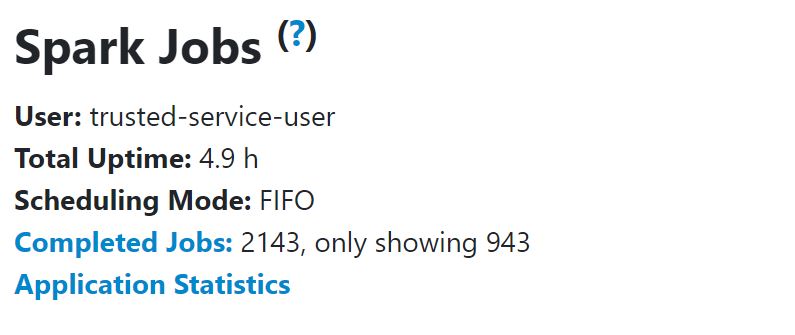

User facing change compared to master: Add application statistics page under jobs tab and new page link in jobs page.

Entry point:

Application statistics page:

How was this patch tested?