New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

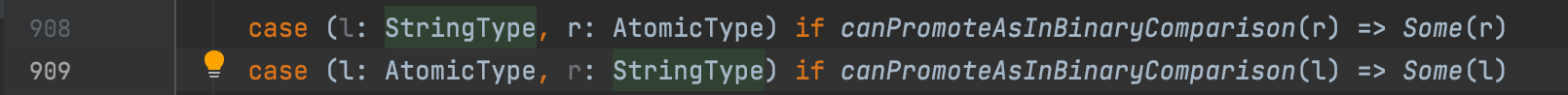

[SPARK-30471][SQL]Fix issue when comparing String and IntegerType #34862

Conversation

|

Can one of the admins verify this patch? |

|

@Kwafoor Please enable |

| buildCast[UTF8String](_, s => if (s.toInt(result)) result.value else null) | ||

| buildCast[UTF8String](_, s => { | ||

| if (s.toInt(result)) { | ||

| result.value} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Brace is in the wrong place.

Do we have any reference for what the behavior should be in this case? I'm not sure if null or an exception is correct

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This case is some users use HiveSQL in SparkSQL, when cast the value of string exceed Int.MaxValue to integer,SparkSQL return null.It will make big trouble, because user didn't get any exception, but the result is wrong.I think SparkSQL should thrown exception at least.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm still not clear what you are saying. What does Hive do, for example?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

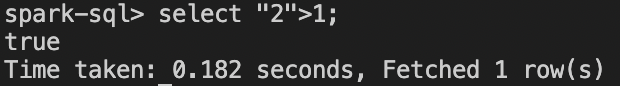

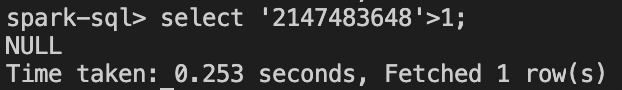

When user comparing String and IntegerType in sparkSQL with spark.sql.ansi.enabled=false, and the default value of spark.sql.ansi.enabled is false, user didn't get any remind but get a wrong result.

User runs a big and complex sql, it is very hard to find where the result is wrong,So they is hard to fix the wrong sql.

Actually I think may be there is some problem without any check to cast String to int when user comparing String and AtomicType.

And with spark.sql.ansi.enabled=true, SparkSQL disallowed compare String and DecimalType, user from hive to SparkSQL unwilling to change their code.

So I think It should remind User where your sql is not support in SparkSQL even without ansi mode, Because this case is exception, and user should to be reminded.

spark.sql.ansi.enabled about this case is right, but user always didn't open ansi, because they want use hiveSQL in sparkSQL without SQL change. |

the problem here is that it will break existing users app when they migrate to higher versions of spark. e.g.) it worked before but now it fails. So it's better to cover it with the ANSI configuration which users can explicitly enable. cc @cloud-fan, @gengliangwang and @MaxGekk FYI |

|

@Kwafoor, Before we go further, I think it should be best to double check how ANSI defines the behaviour on the overflow of strings <> other types. |

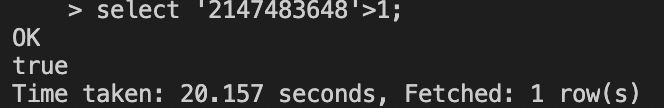

| @@ -652,7 +652,13 @@ abstract class CastBase extends UnaryExpression with TimeZoneAwareExpression wit | |||

| buildCast[UTF8String](_, UTF8StringUtils.toIntExact) | |||

| case StringType => | |||

| val result = new IntWrapper() | |||

| buildCast[UTF8String](_, s => if (s.toInt(result)) result.value else null) | |||

| buildCast[UTF8String](_, s => { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How about other types like short, etc?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I find this case in user change their HiveSQL to SparkSQL, user always filter column with comparing String and IntegerType.With ANSI this case is right, SparkSQL will thrown Exception, but user unwilling to change their code, so ANSI is often false.And in this case, it is actually return wrong result, I think SparkSQL should remind user where your sql is wrong.If user runs a big and complex query, without thrown exception it is hard to find where the sql is wrong.

|

@Kwafoor the default behavior exists for many years. It is hard to change it now. |

With spark.sql.ansi.enabled enable it is right, but ANSI is too strict with cast, user often didn't enable. |

I agree with you, but I think SparkSQL should remind user your sql is wrong rather return null. |

|

If the value is expected to be large, is it not better to explicitly cast to a larger integer type? |

I am actually trying to make the ANSI mode more lenient. Can you provide some scenarios that you think are too strict? |

The problem has been there for many years, and many operations/functions are affected (math operations, cast, etc.). I don't think it's justified to change string and integer comparison only (and introduce inconsistency to the system). ANSI mode is the way to go. If ANSI mode is too strict, we can relax it a bit to make it fit the real-world use cases better. |

Explicitly cast string to a larger integer type is right, but user may implicit cast and he didn't find any exception( it is possible for a big query, and I have encountered). |

I agree with ansi, but in current place user didn't get any remind is a problem. |

We can turn on ANSI by default someday, which fixes all the problems. I don't agree to randomly pick string integer comparison and make it ANSI. How about string bigint/float/double/decimal comparisons? How about string +-*/ numbers? How about cast string to int/bigint/float/double/decimal/date/timestamp? |

I have test ANSI some simple case, and I will think it later.If I have some scenarios, I would like to share with you. |

ANSI is a solution to fix all this problems.But I still think SparkSQL should remind user where you wrong.User from other platform accept ANSI need time to change their sql but they don't want change.So a remind to tell where your sql wrong rather null result is need. |

This doesn't answer the question of why you only fix string integer comparison here.

This works for me. We should discourage relying on implicit cast. |

I think string integer comparison is common and easier to encounter,I haven't meet the other case.I have just tried, and also encounter problem(long, short, etc). May be I make a confusion title.I found the issue in comparing String and IntegerType, and the reason is string2int cast overflow. |

What changes were proposed in this pull request?

For comparing StringType and IntegerType, the value of string exceed Int.MaxValue, then should thrown exception.

Other wise, the result is null.

For example:

SQL "select cast('2147483648' as int);",which return is null.

Why are the changes needed?

User needs to know when an exception occurs.

Does this PR introduce any user-facing change?

No.

How was this patch tested?

Added Unit test.