New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-39176][PYSPARK] Fixed a problem with pyspark serializing pre-1970 datetime in windows #36566

Conversation

…970 datetime in windows [SPARK-39176][PYSPARK] Fixed a problem with pyspark serializing pre-1970 datetime in windows

[SPARK-39176][PYSPARK] Pre - 1970 time serialization test

[SPARK-39176][PYSPARK] Pre - 1970 time serialization test

|

@HyukjinKwon I closed the RP in the 3.0 branch(#36537) and raised a new RP in the master branch. |

[SPARK-39176][PYSPARK] Pre - 1970 time serialization test

…970 datetime in windows [SPARK-39176][PYSPARK] Fixed a problem with pyspark serializing pre-1970 datetime in windows

…970 datetime in windows [SPARK-39176][PYSPARK] Fixed a problem with pyspark serializing pre-1970 datetime in windows

python/pyspark/sql/types.py

Outdated

| seconds = ( | ||

| calendar.timegm(dt.utctimetuple()) if dt.tzinfo else time.mktime(dt.timetuple()) | ||

| ) | ||

| if platform.system().lower() == 'windows': |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we also check if the value is negative?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Don't need, this method is still available when the negative.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How about performance? This is hot path that's executed every value, and the performance here is pretty critical.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are there professional performance testers?

|

@AnywalkerGiser BTW, it would be easier to follow and review if you have any link about describing the OS difference in that Python library. |

|

@HyukjinKwon Do you mean the platform library, added to the code comments? |

|

I am surprised that the same Python library returns a different value depending on OS. I know some libraries are dependent on C library implementation but didn't expect such drastic difference. Code comment or documentation anything is fine. I would like to see if this is an official difference in Python. |

|

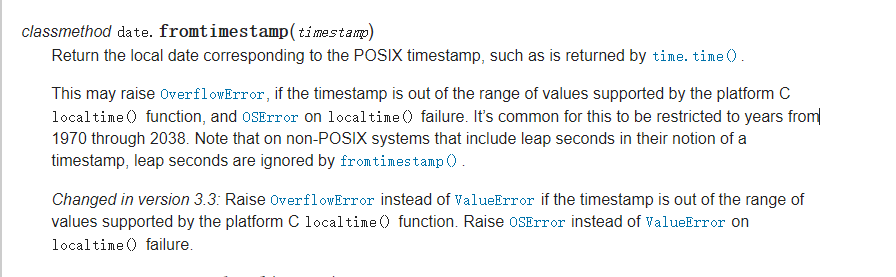

@HyukjinKwon Python3 datetime interface documentation |

|

So is C localtime function has a different behaviour in OS? that returns negative values? |

|

The localtime function does not differ in OS, the fromtimestamp function does, and datetime many functions have problems resolving dates before 1970 in windows. |

|

@HyukjinKwon Can this solution be merged into master? |

|

Can one of the admins verify this patch? |

|

I would like to understand the problem first, and see if this behaviour difference is intentional or official. This is core code path so would have to expect more reviews and time. |

…970 datetime in windows [SPARK-39176][PYSPARK] Fixed a problem with pyspark serializing pre-1970 datetime in windows

[SPARK-39176][PYSPARK] Pre - 1970 time serialization test

|

Understood, looking forward to your reply later. |

|

@AnywalkerGiser mind pointing out any documentation that states this negative value? |

|

Here are some blogs on related issues: |

|

I think the Spark project can look for a solution if Python doesn't fix this bug. |

|

Does that happen in all Windows with all Python versions? |

|

is this a bug in Python? |

|

I thought it was a bug in python, but the documentation said it would report an error if it was out of time range. I tested python 3.6, 3.7, and 3.8 on windows. |

…970 datetime in windows [SPARK-39176][PYSPARK] Fixed a problem with pyspark serializing pre-1970 datetime in windows

| seconds = ( | ||

| calendar.timegm(dt.utctimetuple()) if dt.tzinfo else time.mktime(dt.timetuple()) | ||

| ) | ||

| if platform.system().lower() == "windows": |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm just super surprised this is Windows specific?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, and I want you to check it out for yourself.

|

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

|

This problem hasn't been solved yet? |

|

@HyukjinKwon This problem still exists in the new version. Can it be merged? |

What changes were proposed in this pull request?

Fix problems with pyspark in Windows:

Why are the changes needed?

Pyspark has problems serializing pre-1970 times in Windows.

An exception occurs when executing the following code under Windows:

and

After updating the code, the above code was run successfully!

Does this PR introduce any user-facing change?

No

How was this patch tested?

New and existing test suites