New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-39541][YARN]Diagnostics of yarn UI did not display the exception of driver when driver exit before regiserAM #36952

Conversation

|

Can one of the admins verify this patch? |

|

@tgravescs Can you review this patch,thanks. |

| if (registered) { | ||

| amClient.unregisterApplicationMaster(status, diagnostics, uiHistoryAddress) | ||

| def unregister(status: FinalApplicationStatus, | ||

| conf: YarnConfiguration, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

indentation should be 4 spaces

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

done

| val inShutdown = ShutdownHookManager.inShutdown() | ||

| if (registered || !isClusterMode) { | ||

| exitCode = code | ||

| finalStatus = status | ||

| } else { | ||

| finalStatus = FinalApplicationStatus.FAILED | ||

| exitCode = ApplicationMaster.EXIT_SC_NOT_INITED | ||

| if(msg == null) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

should have space after if

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

my failed.Fixed it.

| val inShutdown = ShutdownHookManager.inShutdown() | ||

| if (registered || !isClusterMode) { | ||

| exitCode = code | ||

| finalStatus = status | ||

| } else { | ||

| finalStatus = FinalApplicationStatus.FAILED | ||

| exitCode = ApplicationMaster.EXIT_SC_NOT_INITED | ||

| if(msg == null) { | ||

| errorMsg = "User did not initialize spark context!" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

how do you know user didn't initialize spark context vs something bad happening and it failing to initialize? this could be more confusing. maybe "Failed to initialize Spark context"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right.I has changed it.

| conf: YarnConfiguration, | ||

| diagnostics: String = ""): Unit = synchronized { | ||

| if (!registered) { | ||

| amClient = AMRMClient.createAMRMClient() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this is very odd to register during unregister, what if it was the register or start that failed?

if it timed out and you have a very high timeout I assume you would wait that again.

I'm definitely a bit hesitant about this change.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should a timeout control be added?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the question for me is, is this really needed vs possibly introducing other issues. while it may be convenient, user can just go look in the log to find the error. You tested a bunch of scenarios which is great, but I'm sure there are others. The main one I mention here is what if registration failed or RM is not responsive? Can you test out that scenario and see what happens?

|

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

What changes were proposed in this pull request?

If commit a job in yarn cluster mode and driver exited before registerAM,Diagnostics of yarn UI will show the actual reason instead of "EXCODE 13"

Why are the changes needed?

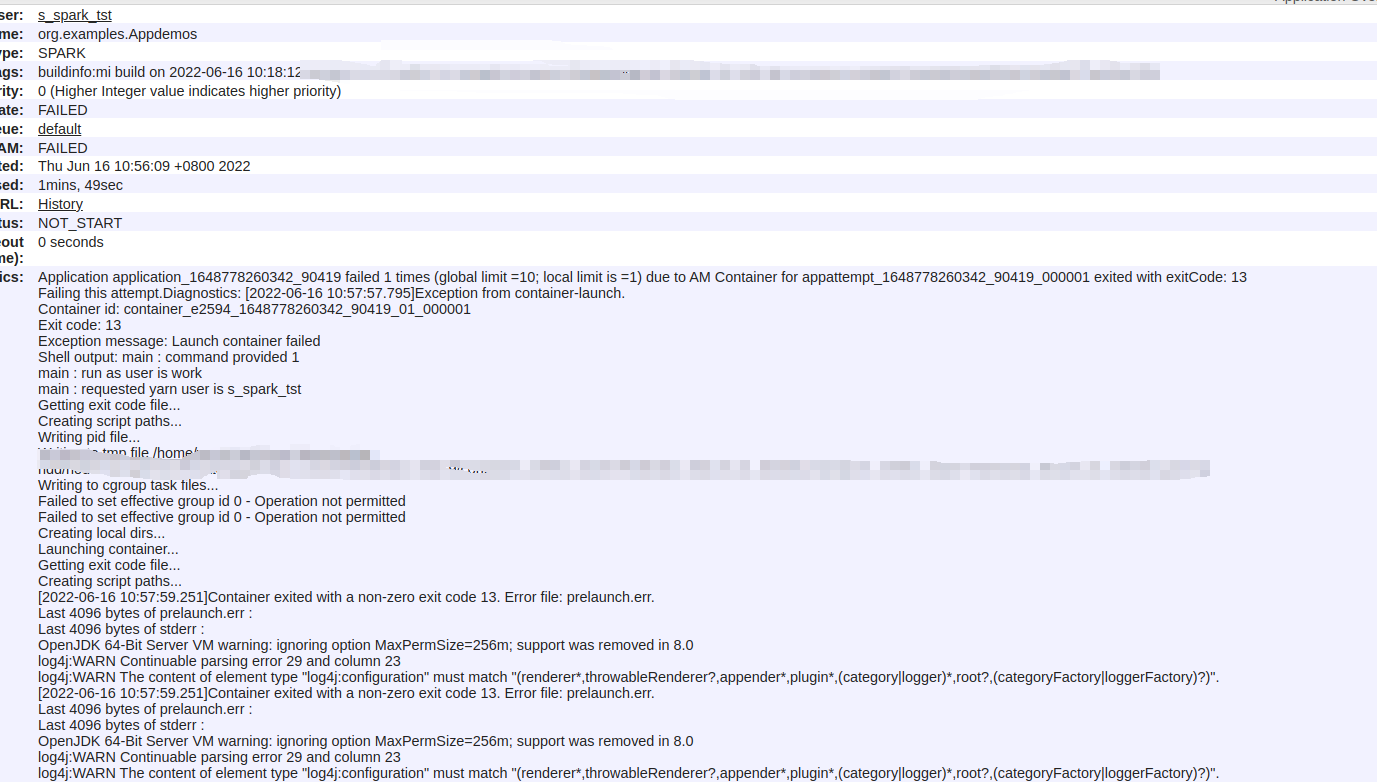

If commit a job in yarn cluster mode and driver exited before registerAM,Diagnostics of yarn UI did not show the exception that was throwed by driver .Yarn UI only show :

Application application_xxx failed 1 times (global limit =10; local limit is =1) due to AM Container for appattempt_xxx_000001 exited with exitCode: 13

We must find the real reason by spark log :

The issue is caused by when dirver did not registerAM before throwing exception,it would not call unregisterAM,then yarn ui could not show the real reason.For example,user did not initialize sc or the class of user could not be found.

By this changes ,the diagnostics will show the real reason when this happen,like:

Does this PR introduce any user-facing change?

No

How was this patch tested?

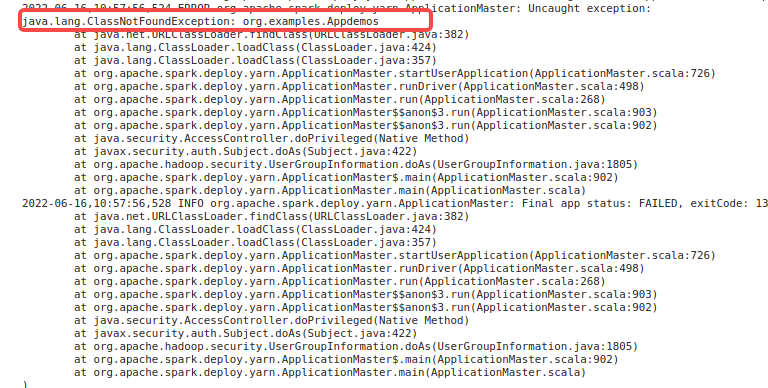

User jars packaging problem, throwing ClassNotFoundException

the diagnosis show the exception throwed by driver.

Exception during operation

the diagnosis show the exception throwed by driver.

44c94d2455f7.png)

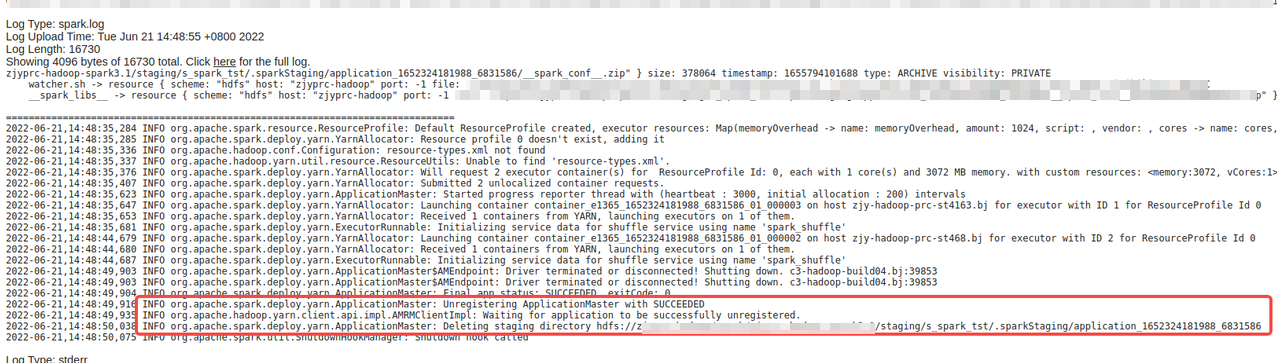

3. Properly run jobs

the diagnosis show the exception throwed by driver.

Test for this problem:[SPARK-21541][YARN]: Spark Logs show incorrect job status for a job that does not create SparkContext #18741

only th last time will unregisterAM and delete staging the spark staging directory.

spark log of the last time:

others:

And for normal jobs,they make no difference.

7.Interrupt exit in client mode

Test for this problem:#18788

yarn UI show SUCCEEDED,spark log shows that it unregisterAM and delete staging the spark staging directory.

And for normal jobs,they make no difference.