New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-40006][PYTHON][DOCS] Make pyspark.sql.group examples self-contained #37437

Conversation

|

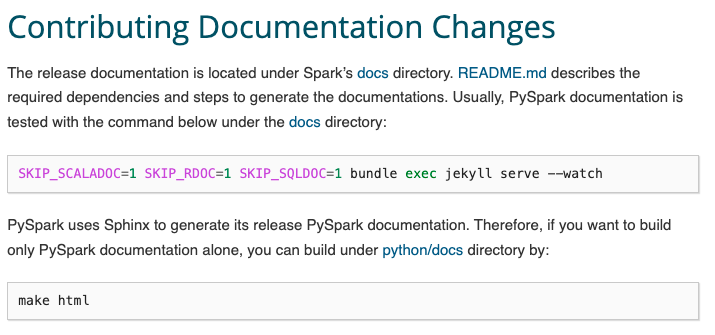

Good morning Hyukjin, I saw your email from the mailing list and I can try to help. Does self-contained simply mean that we need to initialise the dataframe in each shell example? Just to make sure for building the docs, I need to run these two commands right? |

|

Hey @Transurgeon, thanks for taking a look.

Yes. Plus, we should add some description for each example. Basiclaly I would like to follow what the pandas do.

Something meaningful. The operation has to do something. For example,

Yes. As long as the format is consistent, you might not need to build and validate it by yourself though. |

|

cc @zero323 @ueshin @viirya @zhengruifeng @xinrong-meng WDYT? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good to me.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It looks good to have them serve as examples and tests at the same time.

|

Thanks all. Merged to master. |

|

Late LGTM + 1 |

| >>> df5.groupBy("sales.year").pivot("sales.course").sum("sales.earnings").collect() | ||

| [Row(year=2012, Java=20000, dotNET=15000), Row(year=2013, Java=30000, dotNET=48000)] | ||

| >>> from pyspark.sql import Row | ||

| >>> spark = SparkSession.builder.master("local[4]").appName("sql.group tests").getOrCreate() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry to post review, just curious why not use the spark directly in here? I think this example is a little bit different with others.

For all PRs in this series, I think sc (Spark context) and spark (SparkSession) can define in the bottom, and use it directly in every doctest (just like pyspark shell, sc and spark already available), right?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Oh yeah, we should remove this. It was my mistake.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

…d duplicated Spark session initialization ### What changes were proposed in this pull request? This PR is a followup of #37437 which missed to remove unused `sc` and duplicated Spark session initialization. ### Why are the changes needed? To make the consistent example, and remove unused variables. ### Does this PR introduce _any_ user-facing change? No. It's a documentation change. However, the previous PR is not released yet. ### How was this patch tested? Ci in this PR should test it out. Closes #37457 from HyukjinKwon/SPARK-40006-followup. Authored-by: Hyukjin Kwon <gurwls223@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

What changes were proposed in this pull request?

This PR proposes to improve the examples in

pyspark.sql.groupby making each example self-contained with a brief explanation and a bit more realistic example.Why are the changes needed?

To make the documentation more readable and able to copy and paste directly in PySpark shell.

Does this PR introduce any user-facing change?

Yes, it changes the documentation

How was this patch tested?

Manually ran each doctest.