New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-33605][BUILD] Add gcs-connector to hadoop-cloud module

#37745

Conversation

gcs-connector to hadoop-cloud module

|

cc @sunchao , @steveloughran , @srowen |

|

Seems OK, but what does it buy us? GCS storage support? Only downside is increasing the sea of JARs in the project, I guess. |

|

|

The failure is irrelevant to this PR. It seems that the base image publishing is broken again. cc @Yikun |

|

@dongjoon-hyun Thanks to ping me, this due to github action ghcr By default, write permission already included to your GITHUB_TOKEN, but if you set it manually (see also [1], [2]), it will failed, current CI will first build infra and push to your ghcr so write permission is required (see also [3]). [1] https://github.blog/changelog/2021-04-20-github-actions-control-permissions-for-github_token/ |

|

@dongjoon-hyun I just saw your recreate the spark repo, so might default permisson has some changes on Github Action? You could first set permission for your dongjoon-hyun/spark repo: https://github.blog/changelog/2021-04-20-github-actions-control-permissions-for-github_token/#setting-the-default-permissions-for-the-organization-or-repository and we might need a separate pr to set spark permission for new created repo: https://github.blog/changelog/2021-04-20-github-actions-control-permissions-for-github_token/#setting-permissions-in-the-workflow |

|

It's weird. IIRC, I didn't change anything from my previous repo either when your PR applied this change. |

|

Could you check this link about the permisson and the belong repo? https://github.com/users/dongjoon-hyun/packages/container/package/apache-spark-ci-image/settings or ghcr.io/dongjoon-hyun/apache-spark-ci-image The potential issue might be the old repo is removed, but the related images is not be deleted, then when create the new repo, the write permisson of this old image are not configured to new repo. If it's still not work, you might need to delete the |

|

https://github.com/users/dongjoon-hyun/packages/container/apache-spark-ci-image/settings You can also remove it in page ^, pls let me know if still not working... |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The change makes sense to me, especially since we are already bundling jars from other cloud vendors.

| <classifier>shaded</classifier> | ||

| <exclusions> | ||

| <exclusion> | ||

| <groupId>*</groupId> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

curious why do we exclude everything from the shaded jar

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for review, @sunchao . According to the shaded pattern,

We have all we needed for Hadoop3 and Hadoop2.

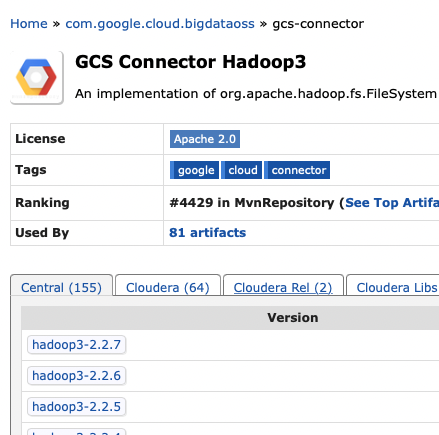

- For Hadoop3, https://mvnrepository.com/artifact/com.google.cloud.bigdataoss/gcs-connector/hadoop3-2.2.7

- For Hadoop2, https://mvnrepository.com/artifact/com.google.cloud.bigdataoss/gcs-connector/hadoop2-2.2.7

I intentionally exclude everything. We will add Spark's version if there is additional missing transitive dependency (if exists).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

issue is that there's a history of the shaded connector still declaring a dependence on things which are now shaded, so breaking convergence.

|

Thank you so much, @Yikun . Now, it seems to work on my three PRs. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

looks fine.

note that the gcs connector (at leasts the builds off their master) are java 11 only; not sure where that stands w.r.t older releases

actually, it might be nice to have the option of excluding the gcs connector. why so? well, i will end up commenting this code out on our internal builds as it will come from hadoop instead

| <classifier>shaded</classifier> | ||

| <exclusions> | ||

| <exclusion> | ||

| <groupId>*</groupId> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

issue is that there's a history of the shaded connector still declaring a dependence on things which are now shaded, so breaking convergence.

|

Thank you for review, @steveloughran .

I didn't realize this because I've been using Java 11+. If then, I had better close this PR and the JIRA officially. Thank you, @srowen , @sunchao and @steveloughran ! |

|

Ur, wait. @steveloughran . It's Java 8, isn't it? |

|

This PR passed CI here. |

|

3.0.0 is java 11 that is hadoop-3.3.1, which simplifies my life a lot too...the streams even support IOStatistics. I've been testing my manifest committer through it and have to bump the test shell up to java11 for all to work |

|

anyway, the version you are looking at is probably safe; it switched in feb 2022 (pr 726) |

|

Thank you for your review, comments, and help, @srowen , @Yikun , @sunchao , @steveloughran . It seems that there is no other concerns, I'll merge this. |

What changes were proposed in this pull request?

This PR aims to add

gcs-connectorshaded jar tohadoop-cloudmodule.Why are the changes needed?

To support Google Cloud Storage more easily.

Does this PR introduce any user-facing change?

Only one shaded jar file is added when the distribution is built with

-Phadoop-cloud.How was this patch tested?

BUILD

RUN