New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-42430][SQL][DOC] Add documentation for TimestampNTZ type #40005

Closed

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

gengliangwang

commented

Feb 14, 2023

| @@ -185,6 +191,7 @@ from pyspark.sql.types import * | |||

| |**BinaryType**|bytearray|BinaryType()| | |||

| |**BooleanType**|bool|BooleanType()| | |||

| |**TimestampType**|datetime.datetime|TimestampType()| | |||

| |**TimestampNTZType**|datetime.datetime|TimestampNTZType()| | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

cc @HyukjinKwon on this one.

HyukjinKwon

approved these changes

Feb 14, 2023

|

Merging to master/3.4 |

gengliangwang

added a commit

that referenced

this pull request

Feb 14, 2023

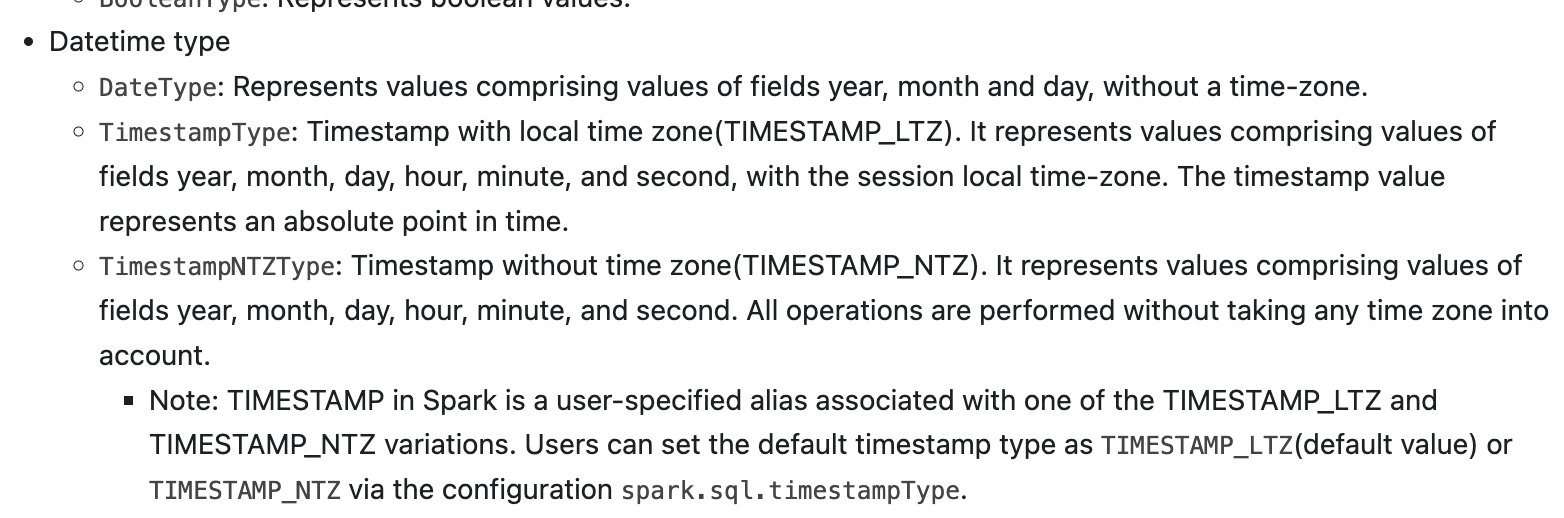

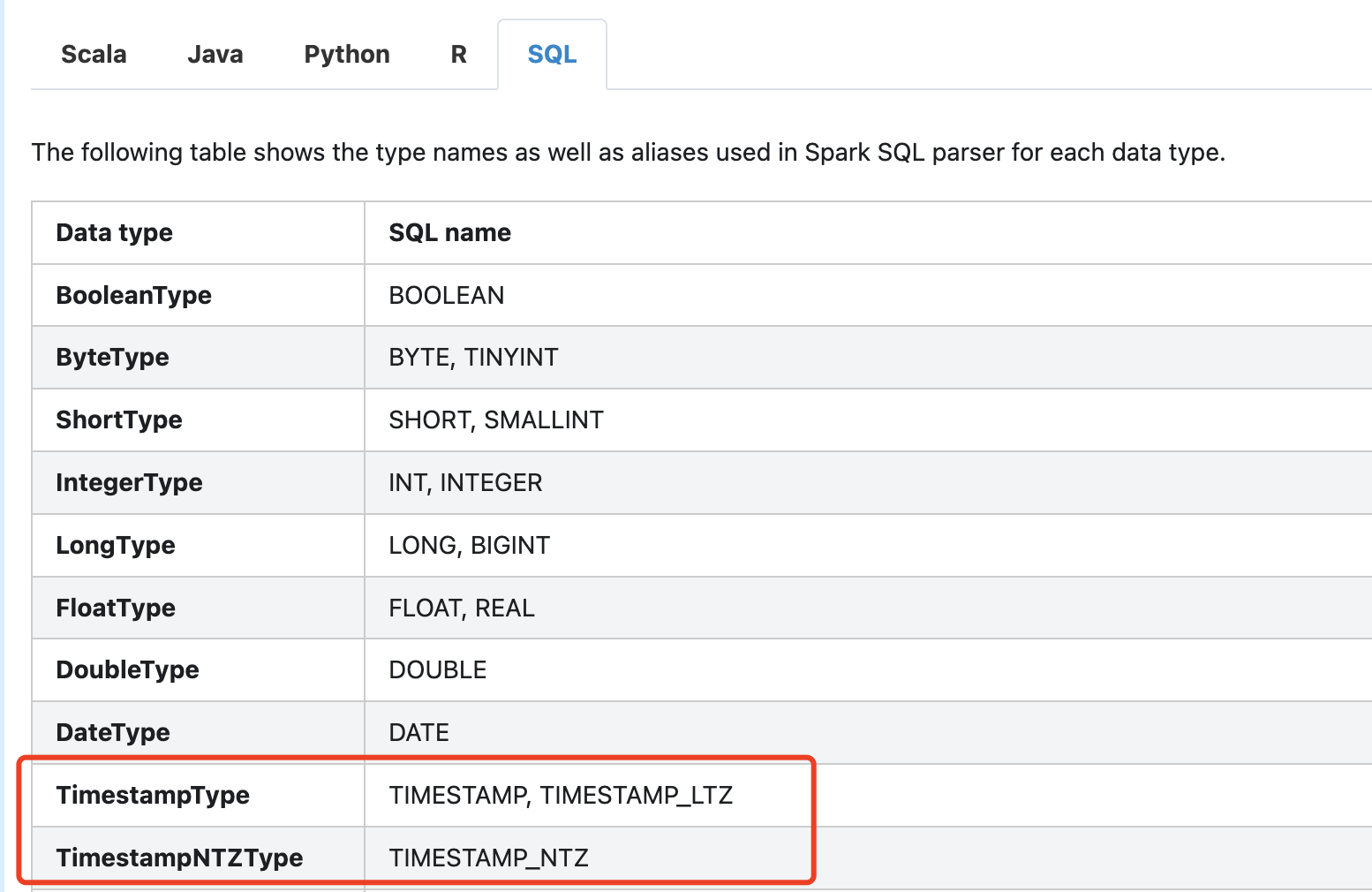

### What changes were proposed in this pull request? Add documentation for TimestampNTZ type ### Why are the changes needed? Add documentation for the new data type TimestampNTZ. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Build docs and preview: <img width="782" alt="image" src="https://user-images.githubusercontent.com/1097932/218656254-096df429-851d-4046-8a6f-f368819c405b.png"> <img width="777" alt="image" src="https://user-images.githubusercontent.com/1097932/218656277-e8cfe747-2c45-476d-b70f-83c654e0b0f2.png"> Closes #40005 from gengliangwang/ntzDoc. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Gengliang Wang <gengliang@apache.org> (cherry picked from commit 46a2341) Signed-off-by: Gengliang Wang <gengliang@apache.org>

cloud-fan

reviewed

Feb 15, 2023

| @@ -154,6 +159,7 @@ please use factory methods provided in | |||

| |**BinaryType**|byte[]|DataTypes.BinaryType| | |||

| |**BooleanType**|boolean or Boolean|DataTypes.BooleanType| | |||

| |**TimestampType**|java.sql.Timestamp|DataTypes.TimestampType| | |||

| |**TimestampNTZType**|java.time.LocalDateTime| TimestampNTZType| | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: seems all others are prefixed by DataTypes.

HyukjinKwon

pushed a commit

that referenced

this pull request

Feb 15, 2023

### What changes were proposed in this pull request? With the configuration `spark.sql.timestampType`, TIMESTAMP in Spark is a user-specified alias associated with one of the TIMESTAMP_LTZ and TIMESTAMP_NTZ variations. This is quite complicated to Spark users. There is another option `spark.sql.sources.timestampNTZTypeInference.enabled` for schema inference. I would like to introduce it in #40005 but having two flags seems too much. After thoughts, I decide to merge `spark.sql.sources.timestampNTZTypeInference.enabled` into `spark.sql.timestampType` and let `spark.sql.timestampType` control the schema inference behavior. We can have followups to add data source options "inferTimestampNTZType" for CSV/JSON/partiton column like JDBC data source did. ### Why are the changes needed? Make the new feature simpler. ### Does this PR introduce _any_ user-facing change? No, the feature is not released yet. ### How was this patch tested? Existing UT I also tried ``` git grep spark.sql.sources.timestampNTZTypeInference.enabled git grep INFER_TIMESTAMP_NTZ_IN_DATA_SOURCES ``` to make sure the flag INFER_TIMESTAMP_NTZ_IN_DATA_SOURCES is removed. Closes #40022 from gengliangwang/unifyInference. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

HyukjinKwon

pushed a commit

that referenced

this pull request

Feb 15, 2023

With the configuration `spark.sql.timestampType`, TIMESTAMP in Spark is a user-specified alias associated with one of the TIMESTAMP_LTZ and TIMESTAMP_NTZ variations. This is quite complicated to Spark users. There is another option `spark.sql.sources.timestampNTZTypeInference.enabled` for schema inference. I would like to introduce it in #40005 but having two flags seems too much. After thoughts, I decide to merge `spark.sql.sources.timestampNTZTypeInference.enabled` into `spark.sql.timestampType` and let `spark.sql.timestampType` control the schema inference behavior. We can have followups to add data source options "inferTimestampNTZType" for CSV/JSON/partiton column like JDBC data source did. Make the new feature simpler. No, the feature is not released yet. Existing UT I also tried ``` git grep spark.sql.sources.timestampNTZTypeInference.enabled git grep INFER_TIMESTAMP_NTZ_IN_DATA_SOURCES ``` to make sure the flag INFER_TIMESTAMP_NTZ_IN_DATA_SOURCES is removed. Closes #40022 from gengliangwang/unifyInference. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 46226c2) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

MaxGekk

pushed a commit

that referenced

this pull request

Feb 18, 2023

…ANSI interval types ### What changes were proposed in this pull request? As #40005 (review) pointed out, the java doc for data type recommends using factory methods provided in org.apache.spark.sql.types.DataTypes. Since the ANSI interval types missed the `DataTypes` as well, this PR also revise their doc. ### Why are the changes needed? Unify the data type doc ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Local preview <img width="826" alt="image" src="https://user-images.githubusercontent.com/1097932/219821685-321c2fd1-6248-4930-9c61-eec68f0dcb50.png"> Closes #40074 from gengliangwang/reviseNTZDoc. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Max Gekk <max.gekk@gmail.com>

MaxGekk

pushed a commit

that referenced

this pull request

Feb 18, 2023

…ANSI interval types ### What changes were proposed in this pull request? As #40005 (review) pointed out, the java doc for data type recommends using factory methods provided in org.apache.spark.sql.types.DataTypes. Since the ANSI interval types missed the `DataTypes` as well, this PR also revise their doc. ### Why are the changes needed? Unify the data type doc ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Local preview <img width="826" alt="image" src="https://user-images.githubusercontent.com/1097932/219821685-321c2fd1-6248-4930-9c61-eec68f0dcb50.png"> Closes #40074 from gengliangwang/reviseNTZDoc. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Max Gekk <max.gekk@gmail.com> (cherry picked from commit 8cfd5bf) Signed-off-by: Max Gekk <max.gekk@gmail.com>

a0x8o

added a commit

to a0x8o/spark

that referenced

this pull request

Feb 18, 2023

…ANSI interval types ### What changes were proposed in this pull request? As apache/spark#40005 (review) pointed out, the java doc for data type recommends using factory methods provided in org.apache.spark.sql.types.DataTypes. Since the ANSI interval types missed the `DataTypes` as well, this PR also revise their doc. ### Why are the changes needed? Unify the data type doc ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Local preview <img width="826" alt="image" src="https://user-images.githubusercontent.com/1097932/219821685-321c2fd1-6248-4930-9c61-eec68f0dcb50.png"> Closes #40074 from gengliangwang/reviseNTZDoc. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Max Gekk <max.gekk@gmail.com>

snmvaughan

pushed a commit

to snmvaughan/spark

that referenced

this pull request

Jun 20, 2023

### What changes were proposed in this pull request? Add documentation for TimestampNTZ type ### Why are the changes needed? Add documentation for the new data type TimestampNTZ. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Build docs and preview: <img width="782" alt="image" src="https://user-images.githubusercontent.com/1097932/218656254-096df429-851d-4046-8a6f-f368819c405b.png"> <img width="777" alt="image" src="https://user-images.githubusercontent.com/1097932/218656277-e8cfe747-2c45-476d-b70f-83c654e0b0f2.png"> Closes apache#40005 from gengliangwang/ntzDoc. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Gengliang Wang <gengliang@apache.org> (cherry picked from commit 46a2341) Signed-off-by: Gengliang Wang <gengliang@apache.org>

snmvaughan

pushed a commit

to snmvaughan/spark

that referenced

this pull request

Jun 20, 2023

With the configuration `spark.sql.timestampType`, TIMESTAMP in Spark is a user-specified alias associated with one of the TIMESTAMP_LTZ and TIMESTAMP_NTZ variations. This is quite complicated to Spark users. There is another option `spark.sql.sources.timestampNTZTypeInference.enabled` for schema inference. I would like to introduce it in apache#40005 but having two flags seems too much. After thoughts, I decide to merge `spark.sql.sources.timestampNTZTypeInference.enabled` into `spark.sql.timestampType` and let `spark.sql.timestampType` control the schema inference behavior. We can have followups to add data source options "inferTimestampNTZType" for CSV/JSON/partiton column like JDBC data source did. Make the new feature simpler. No, the feature is not released yet. Existing UT I also tried ``` git grep spark.sql.sources.timestampNTZTypeInference.enabled git grep INFER_TIMESTAMP_NTZ_IN_DATA_SOURCES ``` to make sure the flag INFER_TIMESTAMP_NTZ_IN_DATA_SOURCES is removed. Closes apache#40022 from gengliangwang/unifyInference. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org> (cherry picked from commit 46226c2) Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

snmvaughan

pushed a commit

to snmvaughan/spark

that referenced

this pull request

Jun 20, 2023

…ANSI interval types ### What changes were proposed in this pull request? As apache#40005 (review) pointed out, the java doc for data type recommends using factory methods provided in org.apache.spark.sql.types.DataTypes. Since the ANSI interval types missed the `DataTypes` as well, this PR also revise their doc. ### Why are the changes needed? Unify the data type doc ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Local preview <img width="826" alt="image" src="https://user-images.githubusercontent.com/1097932/219821685-321c2fd1-6248-4930-9c61-eec68f0dcb50.png"> Closes apache#40074 from gengliangwang/reviseNTZDoc. Authored-by: Gengliang Wang <gengliang@apache.org> Signed-off-by: Max Gekk <max.gekk@gmail.com> (cherry picked from commit 8cfd5bf) Signed-off-by: Max Gekk <max.gekk@gmail.com>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

What changes were proposed in this pull request?

Add documentation for TimestampNTZ type

Why are the changes needed?

Add documentation for the new data type TimestampNTZ.

Does this PR introduce any user-facing change?

No

How was this patch tested?

Build docs and preview: