Background Check

GitHub App

Background Check

GitHub App

A GitHub App built with probot that performs a "background check" to identify users who have been toxic in the past, and shares their toxic activity in the maintainer’s repo.

How to Use

- Go to the github app page.

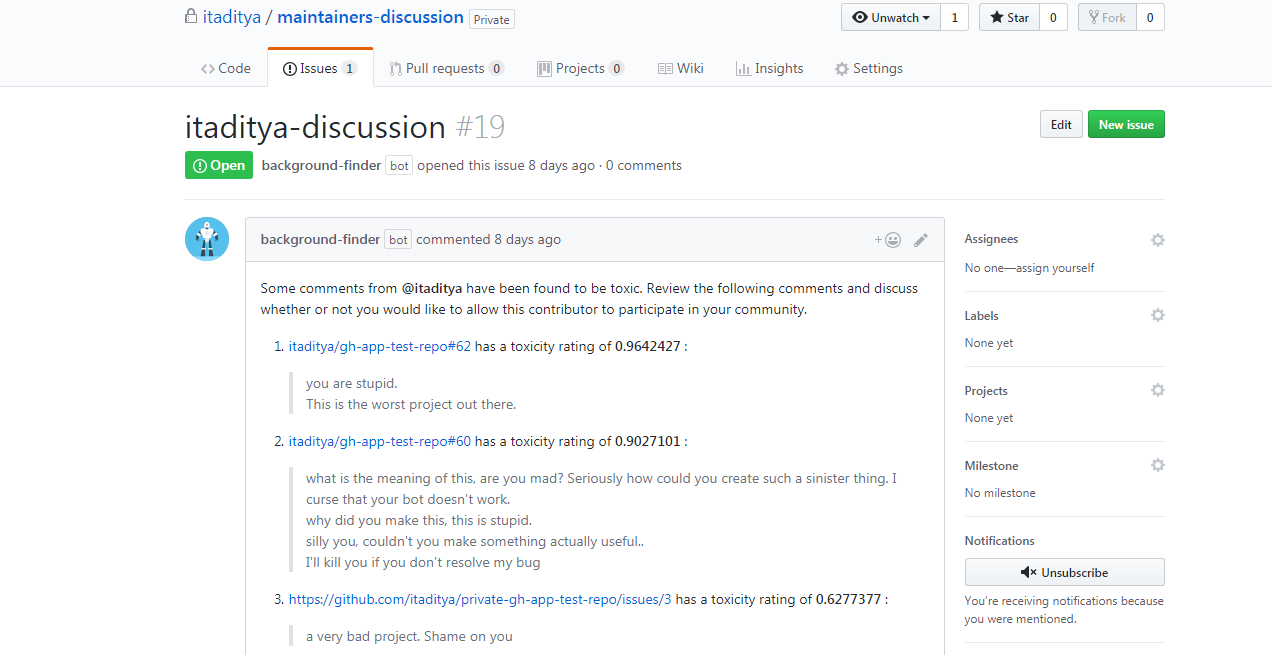

- Install the github app on your repos and a maintainers-discussion private repo.

FAQ

1. How does the bot finds the background?

The bot listens to comments on repos in which the bot is installed. When a new user comments, the bot fetches public comments of this user and run sentiment analyser on them. If 5 or more comments stand out as toxic, then the bot concludes that the user is of hostile background and an issue is opened for this user in maintainers-discussion private repo so that the maintainers can review these toxic comments and discuss whether or not they will like to allow this hostile user to participate in their community.

2. What happens if the sentiment analysis is incorrect?

In case of false positives where the sentiment analysis flags certain comments as toxic while they are not, the issue in maintainers-discussion would still be created. As the bot posts the toxic comments in the issue description, the maintainers can then verify the toxicity and then close the issue if they find the sentiment analysis incorrect.

Developer

Background Check is provided by a third-party and is governed by separate terms of service, privacy policy, and support documentation.

Report abuse