-

Notifications

You must be signed in to change notification settings - Fork 1.7k

Problem with lobste.rs (and possibly other search engines): timeout #1738

Comments

|

No need to activate lobste.rs in the prefs, just use |

|

I tested from different nodes from DE, never got response under 6sec. Set timeout to 10sec and the got error fixed I will stop here, maybe there's someone who wants to pick up the baton. |

|

@return42 why don't you push your commit into the master branch of searx? |

|

because it is only a partial bugfix, not the solution .. read again ..

|

|

I could not reproduce the timeout problem you are describing. Whenever I changed the timeout and restarted searx, the UI displayed the correct value and the search was either done or not depending on the setting. I have submitted a PR with an XPATH fix which closes the issue. @x-0n if the timeout problem still persists, feel free to reopen this issue. |

Issue:

When running a search, lobste.rs fails for me. In the results page, I get notified that

in /var/log/uwsgi/uwsgi.log it looks like this:

In my settings.yml, I have the following settings:

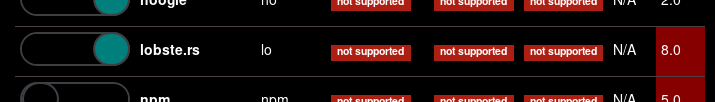

But checking prefs from web UI looks like this:

Expected result

Steps to reproduce

Version

The text was updated successfully, but these errors were encountered: