New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

feature: Allow for New relic integration #3463

Comments

|

@kkkarthik6 thanks for sharing the use case. Are you using the Update: New Relic's Python Agent turns out to be the incorrect integration point with BentoML. See conversation later in this thread, #3463 (comment) |

|

Hi @ssheng , Thanks for the reply. We have the following setup and also tried mounting fastAPI as our asgi middleware. Seems like it always fails to boot with the above warning. Start the app using this command Error log.

|

|

I'll share an experiment I ran: # run-newrelic-instrumented-bento.sh

pip install newrelic

NEW_RELIC_LICENSE_KEY="MY KEY" \

NEW_RELIC_APP_NAME="bento-tensorflow-poc" \

NEW_RELIC_APPLICATION_LOGGING_ENABLED=true \

NEW_RELIC_APPLICATION_LOGGING_LOCAL_DECORATING_ENABLED=true \

NEW_RELIC_APPLICATION_LOGGING_FORWARDING_ENABLED=true \

newrelic-admin run-program uvicorn poc_api.service:svc.asgi_app --host 0.0.0.0 --port 5000 --workers 1Requirements I used the # src/poc_model/service.py

import numpy as np

from numpy.typing import NDArray

from typing import List

from PIL.Image import Image as PILImage

from textwrap import dedent

import bentoml

from bentoml.io import Image, JSON

from bentoml.io import NumpyNdarray

from bentoml.exceptions import InternalServerError

from pydantic import BaseModel, Field

# endpoint that inputs and outputs a string

from bentoml.io import Text

from loguru import logger as LOGGER

mnist_classifier: bentoml.Model = bentoml.tensorflow.get("tensorflow_mnist:latest")

mnist_classifier_runner: bentoml.Runner = mnist_classifier.to_runner()

svc = bentoml.Service(

name="tensorflow_mnist_service",

runners=[mnist_classifier_runner],

)

from rich import inspect

print(inspect(svc, methods=True))

class ClassifyDigitResponse(BaseModel):

confidences: List[float] = Field(..., description=dedent("""\

Confidence scores for each digit class.

The `0`th element is the confidence score for digit `0`,

the `1`st element is the confidence score for digit `1`,

the `2`st element is the confidence score for digit `2`,

and so on.

"""))

"""

Request:

curl -X 'POST' \

'http://0.0.0.0:5000/classify-digit' \

-H 'accept: application/json' \

-H 'Content-Type: image/jp2' \

--data-binary '@7.png'

Response:

{

"confidences": [

-3.8217179775238037,

1.1907492876052856,

-1.0903446674346924,

-0.28582924604415894,

-3.335996627807617,

-5.478107929229736,

-13.418190956115723,

14.586523056030273,

-1.2501418590545654,

1.6832548379898071

]

}

Response headers:

content-length: 213

content-type: application/json

date: Tue,31 Jan 2023 07:55:31 GMT

server: uvicorn

x-bentoml-request-id: 1925001177826861353

"""

@svc.api(

route="/classify-digit",

input=Image(),

output=JSON(pydantic_model=ClassifyDigitResponse),

)

async def classify_digit(f: PILImage) -> "np.ndarray":

assert isinstance(f, PILImage)

arr = np.array(f) / 255.0

assert arr.shape == (28, 28)

# We are using greyscale image and our PyTorch model expect one extra channel dimension

arr = np.expand_dims(arr, (0, 3)).astype("float32") # reshape to [1, 28, 28, 1]

confidences_arr: NDArray = await mnist_classifier_runner.async_run(arr) # [1, 10]

confidences = list(confidences_arr[0])

return ClassifyDigitResponse(confidences=confidences)

"""

Request:

curl -X 'POST' \

'http://0.0.0.0:5000/newrelic-log' \

-H 'accept: text/plain' \

-H 'Content-Type: text/plain' \

-d 'None'

Response: "None"

Response Headers:

content-length: 4

content-type: text/plain; charset=utf-8

date: Tue,31 Jan 2023 07:50:05 GMT

server: uvicorn

x-bentoml-request-id: 283141936243368829

"""

@svc.api(input=Text(), output=Text(), route="/newrelic-log")

def health(text: str):

LOGGER.info(f"This is an INFO log. {text}")

return text

"""

Request:

curl -X 'POST' \

'http://0.0.0.0:5000/newrelic-error' \

-H 'accept: text/plain' \

-H 'Content-Type: text/plain' \

-d 'None'

Response: error

Response headers:

content-length: 2

content-type: application/json

date: Tue,31 Jan 2023 07:48:40 GMT

server: uvicorn

Note: the bento trace id wasn't returned here ^^^. That'd be bad if I were trying to debug this error.

Also, the NewRelic trace ID header isn't here in both endpoints.

"""

@svc.api(input=Text(), output=Text(), route="/newrelic-error")

def error(text: str):

LOGGER.error(f"This is an ERROR log. {text}")

exc = InternalServerError(message="Fail!")

LOGGER.exception(exc)

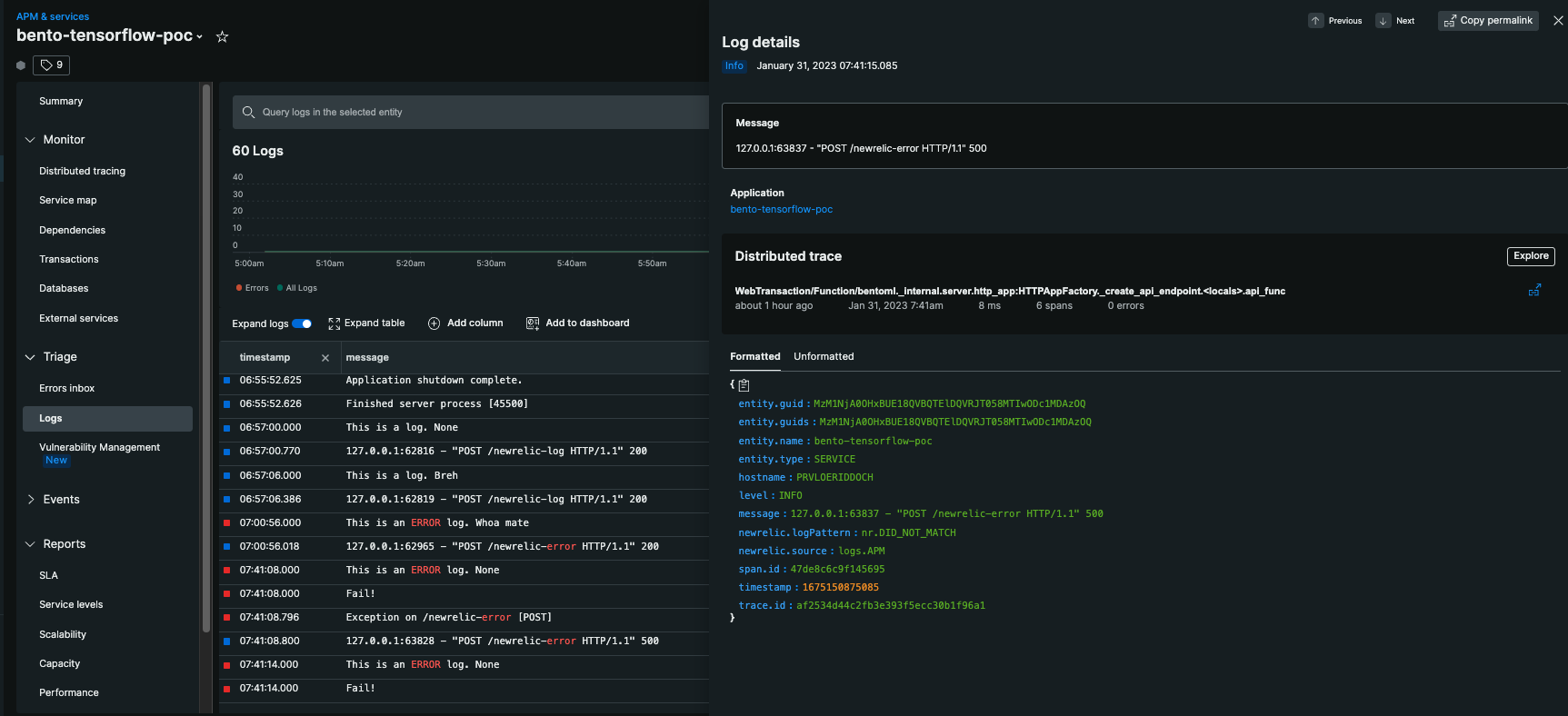

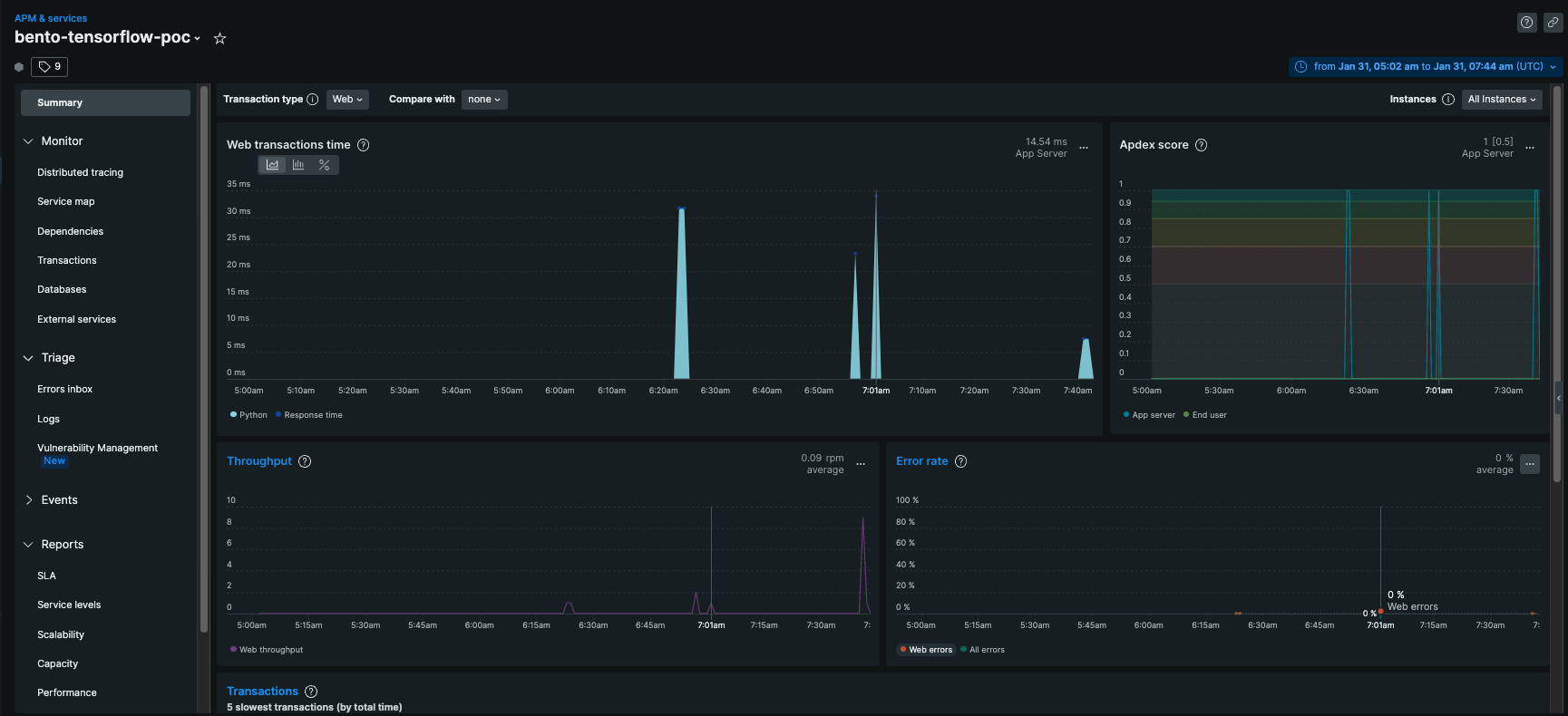

raise excHere are some of the logs I got. You can see that the instrumentation affected Here's the logs page in NewRelicHere's the tracing page:Summary pageI've done this same setup with FastAPI before. Unlike FastAPI, with Bento I'm not seeing

Can you think of a way I could properly configure NewRelic to have these features? These may be deal breakers for us. At the end of the day, we need to be able to:

And it's a major plus if:

|

|

There's an ongoing NewRelic support thread here, though as of right now, there isn't much there. |

|

Moving some of the conversation we had with @phitoduck here. Hopefully they can be helpful to @kkkarthik6. Thanks for the insightful post, @phitoduck. It seems like you are integrating with newrelic through the Python Agent. Haven’t read a lot into the implementation, it seems like the instrumentation is automatically injected into the ASGI application if the application was started with Treating BentoML as a simple ASGI application will not work out-of-the-box. BentoML’s runner architecture spawns multiple processes to optimize system resource utilization. Instead of a single uvicorn process, BentoML consists of multiple ASGI processes. I will need to look into New Relic further to investigate a better integration. Since BentoML is fully compliant to OpenTelemetry and Prometheus standards, I’m hopeful that New Relic has proper supports for those standards. New Relic supports OpenTelemetry Protocol for exporting trace data. In BentoML, you can customize the OTLP exporter in the Tracing configuration with New Relic’s endpoint. New Relic supports exporting Prometheus metrics through their Prometheus OpenMetrics integration for Docker. |

|

I opened a ticket with the NewRelic support team. Here's an email I just got from them:

|

Feature request

We are using bentoML for our model serving. We found that it is a great library. However when integrating with new relic we are facing issues.

Motivation

We have been using bentoML for our work. It is a great repository thank you for building this. However, when we try to integrate bentoML with new relic monitoring we are getting the following error. We tried mounting a fastapi app/ custom middleware but seems like these efforts are not fruitful. Please help us to integrate newrelic with bentoML

Debug mode from bentoML:

Complete error Message

Other

No response

The text was updated successfully, but these errors were encountered: