New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Round up for the batch size to improve par_iter performance #9814

Conversation

The default usize division rounds down which leads to faulty batch sizes when the max_size isn't exactly divisible.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Great PR description and clear demonstration of the effect. Thanks!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actually, can you please update the docs to describe this behaviour?

|

Thanks! I added a small clarification to the docs to be explicit about the rounding. Is there anywhere else the rough maths is mentioned? That is the docs the bevy 0.10 release notes point to and the only place I could find it mentioned. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've been trying to construct a degenerate case where this might cause a perf regression but cannot think of one at this moment.

…ne#9814) # Objective The default division for a `usize` rounds down which means the batch sizes were too small when the `max_size` isn't exactly divisible by the batch count. ## Solution Changing the division to round up fixes this which can dramatically improve performance when using `par_iter`. I created a small example to proof this out and measured some results. I don't know if it's worth committing this permanently so I left it out of the PR for now. ```rust use std::{thread, time::Duration}; use bevy::{ prelude::*, window::{PresentMode, WindowPlugin}, }; fn main() { App::new() .add_plugins((DefaultPlugins.set(WindowPlugin { primary_window: Some(Window { present_mode: PresentMode::AutoNoVsync, ..default() }), ..default() }),)) .add_systems(Startup, spawn) .add_systems(Update, update_counts) .run(); } #[derive(Component, Default, Debug, Clone, Reflect)] pub struct Count(u32); fn spawn(mut commands: Commands) { // Worst case let tasks = bevy::tasks::available_parallelism() * 5 - 1; // Best case // let tasks = bevy::tasks::available_parallelism() * 5 + 1; for _ in 0..tasks { commands.spawn(Count(0)); } } // changing the bounds of the text will cause a recomputation fn update_counts(mut count_query: Query<&mut Count>) { count_query.par_iter_mut().for_each(|mut count| { count.0 += 1; thread::sleep(Duration::from_millis(10)) }); } ``` ## Results I ran this four times, with and without the change, with best case (should favour the old maths) and worst case (should favour the new maths) task numbers. ### Worst case Before the change the batches were 9 on each thread, plus the 5 remainder ran on one of the threads in addition. With the change its 10 on each thread apart from one which has 9. The results show a decrease from ~140ms to ~100ms which matches what you would expect from the maths (`10 * 10ms` vs `(9 + 4) * 10ms`).  ### Best case Before the change the batches were 10 on each thread, plus the 1 remainder ran on one of the threads in addition. With the change its 11 on each thread apart from one which has 5. The results slightly favour the new change but are basically identical as the total time is determined by the worse case which is `11 * 10ms` for both tests.

Objective

The default division for a

usizerounds down which means the batch sizes were too small when themax_sizeisn't exactly divisible by the batch count.Solution

Changing the division to round up fixes this which can dramatically improve performance when using

par_iter.I created a small example to proof this out and measured some results. I don't know if it's worth committing this permanently so I left it out of the PR for now.

Results

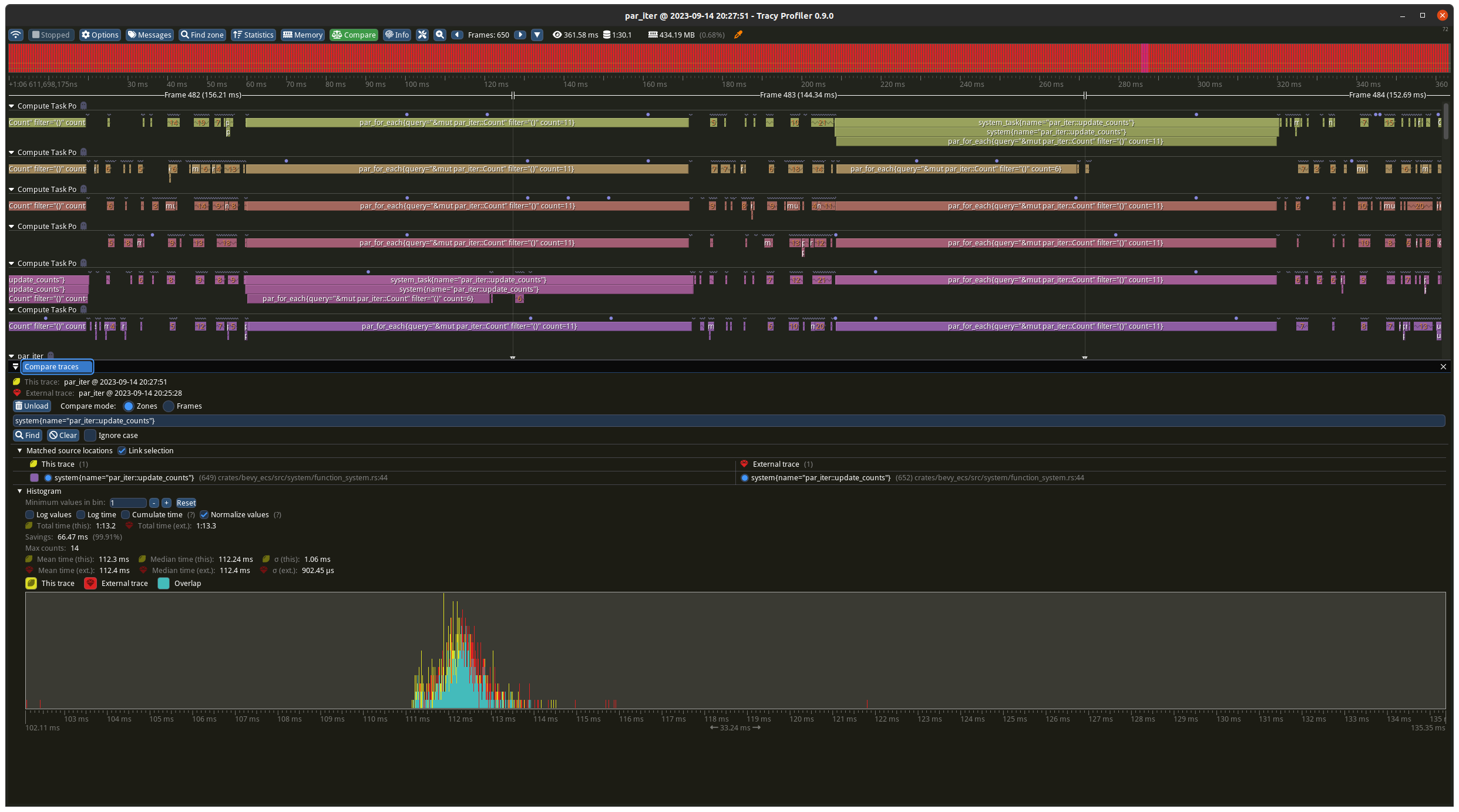

I ran this four times, with and without the change, with best case (should favour the old maths) and worst case (should favour the new maths) task numbers.

Worst case

Before the change the batches were 9 on each thread, plus the 5 remainder ran on one of the threads in addition. With the change its 10 on each thread apart from one which has 9. The results show a decrease from ~140ms to ~100ms which matches what you would expect from the maths (

10 * 10msvs(9 + 4) * 10ms).Best case

Before the change the batches were 10 on each thread, plus the 1 remainder ran on one of the threads in addition. With the change its 11 on each thread apart from one which has 5. The results slightly favour the new change but are basically identical as the total time is determined by the worse case which is

11 * 10msfor both tests.