-

Notifications

You must be signed in to change notification settings - Fork 30

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Feature request - Ambiophonics effect #54

Comments

|

The basic algorithm looks fairly simple and easy to implement. The only part that's a bit complicated is the delay block. I assume that subsample delay is desirable; otherwise the delay could only be adjusted in 1 sample increments (~22.7µs at 44.1kHz). Something you could do in the meantime is create a "true stereo" (4 channel) impulse of an existing implementation and apply it with the |

|

Hi, thanks for the response.

Yeah, those steps are probably too large to account for all possible configurations; 5-10us or less would be ideal.

From what I understand, using convolution wouldn't work with this algorithm. There is a discussion on Hydrogen Audio from someone that tried to do this. Convolution can be used for applying "concert hall ambience" through some additional surround speakers, but that's not part of RACE. |

|

I suspect that he didn't do it correctly. Based on the block diagram that you posted, convolution will work just fine provided that 1) The impulse is long enough, and 2) The impulse is four channels, not just two. This is what allows the required "ping-pong" between channels. A two channel impulse would only work correctly for monophonic source material. The correct procedure for creating an FIR filter for this purpose is to send an impulse though the left input only and record both left and right outputs, then send an impulse through the right input and record both left and right outputs again. These two stereo outputs should then be merged to produce a four channel filter with the following channel mapping: LL, LR, RL, RR. (LL is left input / left output; LR is left input / right output; RL is right input / left output; RR is right input / right output). |

|

Okay, I'll try that out and see how it works. |

|

Hey, I finally got around to recording a true stereo IR, and indeed it does work, thanks! For others that want to try it, I roughly followed the HeSuVi IR recording guide except I used a pure impulse tone, rather than a 7.1 channel test file, and recorded L -> L/R and R -> L/R of ambio.one VST as separate two channel recordings, which are then exported to a 4 channel IR wav (after matching the two stereo IRs exactly at a sample level). I'm currently using a 90ms length IR which appears to be long enough to correctly reproduce the algorithm; my initial test of a 1 sec long IR seems to be too cpu intensive when run on my phone with JamesDSP, but there may be a sweet spot in between those two, if there is an actual need for a longer IR. |

|

Thanks for the update. Always nice to hear that I was right :P I may still try to implement this algorithm at some point since subsample delay could be useful for other things too. No promises on when that might happen though. |

|

No problem. |

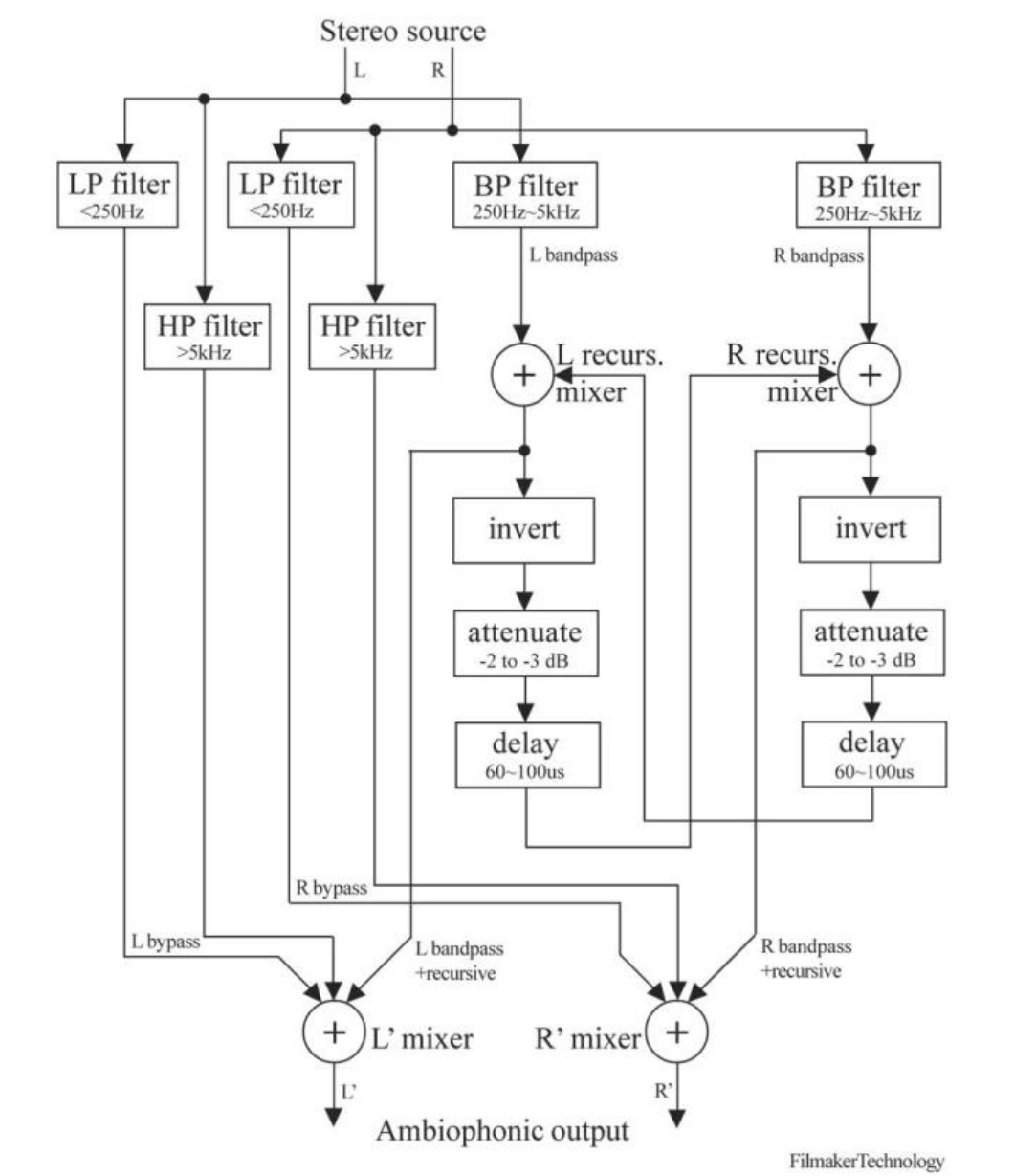

Ambiophonics is a method of sound reproduction that involves crosstalk

cancellation between 2 or 4 speakers (Panambiophonics), in order to result in

more realistic imaging including a wider soundstage, and a stronger central

image (no phantom image). Unlike other methods, this is intended to be used with

existing stereo and multichannel material. This effect can be achieved in

a passive way by positioning the two speakers with a separation of less than 30

degrees, and separating them by an acoustic barrier. Obviously, this is rather

impractical for most, so this effect can also be achieved through DSP combined

with the appropriate level of speaker separation (< 30 degrees).

Consequently, Recursive Ambiophonic Crosstalk Elimination (RACE) was

invented. Here's a block diagram that can likely explain it better than I can:

And an extract from this paper Glasgal, 2007:

The same article also includes equations from implementing the recursive

portion. I suspect it would be fairly straightforward for someone with an

in-depth knowledge of C and audio DSP, but I could be wrong.

In any case, it would be nice to see an FOSS implementation of Ambiophonics,

since, to my knowledge, there is no such implementation at this time. There's

not even anything directly supported by Linux (there was a Java transcoder that

has since disappeared and never worked that well). You can use Windows VST

plugins such as: Filmaker Ambiophonics DSP (paid) or Ambio-One (free as in beer, but abandoned) but that's a little inconvenient. There's also a hardware implementation with a

MiniDSP 2x4 which I'm currently using, but starts getting expensive if you want to do 4 channel panambiophonics (requiring two miniDSP 2x4). That aspect should be doable with two instances of dsp, and routing the source material correctly it can work with stereo material as well by sending the same signal to two RACE processors.

Key features:

Recommended features:

Nice to have:

Further reading:

Thank you for reading this, and I hope I don't come across as too demanding here given it's a unpaid project in your free time. I just really enjoy using this effect in my system. I would have a crack at it myself, but I suspect it's a bit beyond my knowledge level at this point.

The text was updated successfully, but these errors were encountered: