New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[bug] conan upload -r fails to resolve python_requires from other remotes

#6034

Comments

|

I understand what you mean and this could be considered as a bug. Do you experience the same behavior with older Conan versions? Maybe @memsharded has some clue about this |

|

This is mostly by design. Because of the evil inversion of control, it is really difficult to control that, and the upload doesn't have that logic to install from other remotes. The new python_requires approach we are working, with delayed loading of python _requires as attributes should probably remove this issue. Lets add tests to #5804 |

|

I didn't try with older conan versions - I've worked around that problem on Jenkins side by manipulating the order of remotes and then invoking the |

|

Thanks for the info about the workaround. That should be the best solution right now. Could you explain why the python required is deleted from the cache before doing the upload? I guess it should matter in your current CI flow and would like to understand |

|

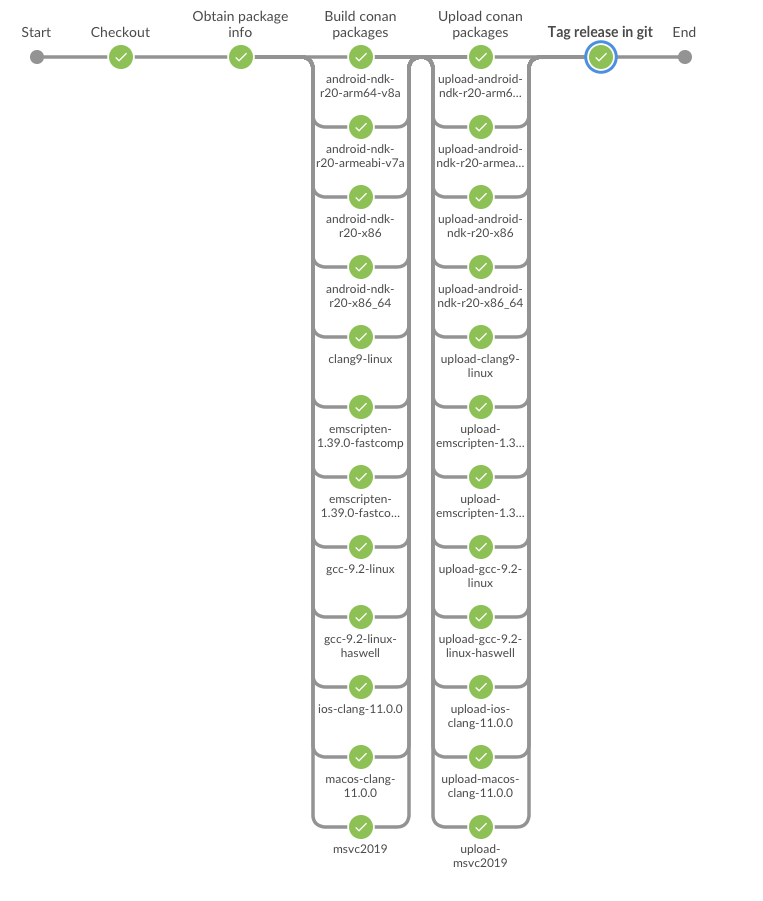

It's not deleted - it never existed in the first place :) The reason for this is that I have a separate package build stage in CI which builds conan packages in parallel on different CI nodes for different platforms. However, I do not want any of the builders to upload their packages to the Artifactory server until all builders have successfully built their package. Without that, I would end up with broken packages on my Artifactory server. So, each builder, after it finishes building packages. first calls a dry run of upload to make sure that When all builders finish, it's safe to upload the built packages. For that matter Jenkins spawns new N parallel tasks, where each unstashes the stashed conan cache build in previous stage and simply uploads everything to the artifactory. Thus, when building packages, our CI makes sure to upload all built packages, including the packages built by dependency. However, the Here is the screenshot of our jenkins process (this job is triggered on every repository after merging PR to |

The current recommended practice is to upload them to a temporary or a "builds" Artifactory repository. You can use properties like the build-number. When all the job finish, you can run a "promotion" and move/copy the artifacts labelled with that property to the "production" Artifactory repository. That is, using Artifactory as the place to put packages instead of staging them in Jenkins. This has a few advantages, like much easier inspection of packages of a build directly from Artifactory. Or something that we do a lot, that is if a pipeline file because some build server failed (memory, network..) it is not necessary to re-build everything, but only the failed job, saving a lot of resources. We use Artifactory as the database of the CI. I'd recommend to consider this approach. Also, for your comments, it seems that you could leverage the To gather the So, at the moment, I think your workaround is good enough, but please consider these hints. Cheers! |

|

Thank you for the hints. I will consider them and see if those fit our workflow (e.g. I will need to find a way to delete temporary artefacts from the "build" repository if some branch fails - I definitely do not want its storage to grow indefinitely nor clean it manually; also to find a way to automatically promote packages when all build branches are OK). I like the idea of using Artifactory as the database for Jenkins. It has a good value also for non-C++/Conan related stuff. Thank you for that! |

Environment Details (include every applicable attribute)

Steps to reproduce (Include if Applicable)

conan-firstandconan-second)conan-first(let's call itPyPack)python_requiresthePyPackpackage (let's call itMyPack)PyPackfrom your local~/.conan/datacacheMyPacktoconan-secondThe problem is that

-r conan-secondis interpreted as both upload target and as the filter from whichpython_requiresdependencies are to be resolved.In my use case, I have

conan-firstas primary conan repository containing stable packages for production use. Now I want my CI to be able to build testing/staging packages and upload them toconan-second, which is a kind of temporary conan repository for testing the code with unreleased packages. This repository has way more administrators that can delete/change already built packages.The text was updated successfully, but these errors were encountered: