-

Notifications

You must be signed in to change notification settings - Fork 840

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

"no running task found" issue after upgrade to 7.7.0 #8172

Comments

|

Hi, where is this error happening e.g. resource check, task step, put step? |

|

@xtremerui resource check. |

|

FWIW, pausing the pipeline then un-pausing it after something longer than |

|

Workaround did help to fix the issue I expired with regular S3 storage checks for new deployment artifacts that run into I got this issue by upgrading from 7.7.0 to 7.7.1 shutting down worker node, updating worker node, starting worker node, shutting down web node, upgrading web node, starting web node (that was done manually on a development system). On production system running the same pipelines I first did the upgrade dance on web node and afterwards on worker node resulting in no issues with |

|

Is there any other information that you can give us? Does this happen occasionally but keeps flaking with this error? Or did it only happen at the beginning when you upgraded and never again? Was everyone that saw this error using |

|

Same issue. It was happen after |

|

I'm seeing this issue on 7.7.1 using sudo service k3s stop

/usr/local/bin/k3s-killall.sh

sudo service k3s startand now I'm in that state for most(all?) of my checks. Hopeing some kind of TTL will kick in... Looks like renaming a resource in a pipeline is enough to unstick it, but not a terribly satisfying workaround. |

I wanted to try this, but I'd cunningly set some of these resources to |

|

Problem cleared itself up overnight with no intervention. |

|

FYI: Just upgraded from 7.7.1 to 7.8.0 without any issues (non-clusterer deployment, just plain docker-compose). |

|

We are also seeing these issues being on version As for the circumstances:

|

|

Just an update on this one. We updated to Concourse I tried a lot of stuff to analyze/overcome this, but nothing helped in the first place. Facts:

I've extracted the following log stuff so far: {"timestamp":"2022-06-23T11:53:49.986269148Z","level":"info","source":"worker","message":"worker.garden.garden-server.create.created","data":{"request":{"Handle":"387c694c-3556-4d43-495c-834610e7f343","GraceTime":0,"RootFSPath":"raw:///worker-state/volumes/live/a4a75891-4d12-43f0-45bd-56c0c3a9fe65/volume","BindMounts":[{"src_path":"/worker-state/volumes/live/09de2f61-be17-45b7-7023-4e572565d203/volume","dst_path":"scratch","mode":1}],"Network":"","Privileged":false,"Limits":{"bandwidth_limits":{},"cpu_limits":{},"disk_limits":{},"memory_limits":{},"pid_limits":{}}},"session":"1.2.24457"}}

{"timestamp":"2022-06-23T11:53:50.019615220Z","level":"info","source":"worker","message":"worker.garden.garden-server.get-properties.got-properties","data":{"handle":"387c694c-3556-4d43-495c-834610e7f343","session":"1.2.24460"}}

{"timestamp":"2022-06-23T11:53:50.179611267Z","level":"info","source":"worker","message":"worker.garden.garden-server.run.spawned","data":{"handle":"387c694c-3556-4d43-495c-834610e7f343","id":"8c64794d-51d5-4bad-52d2-387b4375c6bb","session":"1.2.24461","spec":{"Path":"/opt/resource/check","Dir":"","User":"","Limits":{},"TTY":null}}}

{"timestamp":"2022-06-23T11:53:58.440124752Z","level":"info","source":"worker","message":"worker.garden.garden-server.run.exited","data":{"handle":"387c694c-3556-4d43-495c-834610e7f343","id":"8c64794d-51d5-4bad-52d2-387b4375c6bb","session":"1.2.24461","status":0}}

{"timestamp":"2022-06-23T11:57:05.835467090Z","level":"info","source":"worker","message":"worker.garden.garden-server.get-properties.got-properties","data":{"handle":"387c694c-3556-4d43-495c-834610e7f343","session":"1.2.24979"}}

{"timestamp":"2022-06-23T11:57:06.067347438Z","level":"info","source":"worker","message":"worker.garden.garden-server.run.spawned","data":{"handle":"387c694c-3556-4d43-495c-834610e7f343","id":"49be3c07-64e5-4f62-6715-54427d31a925","session":"1.2.24980","spec":{"Path":"/opt/resource/check","Dir":"","User":"","Limits":{},"TTY":null}}}

{"timestamp":"2022-06-23T11:58:54.559256144Z","level":"error","source":"worker","message":"worker.garden.garden-server.attach.failed","data":{"error":"task: no running task found: task 387c694c-3556-4d43-495c-834610e7f343 not found: not found","handle":"387c694c-3556-4d43-495c-834610e7f343","session":"1.2.13"}}

{"timestamp":"2022-06-23T12:05:32.874380615Z","level":"info","source":"worker","message":"worker.garden.garden-server.get-properties.got-properties","data":{"handle":"387c694c-3556-4d43-495c-834610e7f343","session":"1.2.927"}}

{"timestamp":"2022-06-23T12:05:32.879314608Z","level":"error","source":"worker","message":"worker.garden.garden-server.run.failed","data":{"error":"task retrieval: no running task found: task 387c694c-3556-4d43-495c-834610e7f343 not found: not found","handle":"387c694c-3556-4d43-495c-834610e7f343","session":"1.2.928"}}A colleague of mine stumbled upon this issue and we suspected this to be a possible reason: So we updated to the latest focal kernel and deactivated the hugepages memory stuff with no success. Then I tried to tweak some of the resource check/GC/reaping time settings as I suspected some type of race condition with no success. In the last resort we had the idea to not use Still I think this needs investigation. The volumes being just gone with |

|

@holgerstolzenberg thx for the updates. I appreciate the time you spent on it. So far there is no good way to reproduce this issue and in our own CI we haven't noticed such error. We'd like to see if there are more similar case reported and hoping for next containerd update. |

|

We tried to run the Concourse Helm Chart within OKD. Here we are forced to use the We migrated a test pipeline and see the exact same problematic on the vanilla cluster in the OKD. This time this is a real show stopper, as we cannot switch to |

|

Also seeing the following for resource checks and task executions: It seems to only affect deployments with lots of activity and becomes worse under high load. Deployed versions:

|

|

This issue still exist on Concourse v7.8.1 and containerd v1.6.6. Is there any ETA when could expect fix for this issue? Thanks. |

|

@xtremerui I would suggest to look into abandoned PR #7042 as this explains the issues many of us have. |

|

@nekrondev thx for the info. Thats helpful. However I still don't understand why things becomes worse after upgrading from 7.6. In #7042, the error shows after worker restarted as a task has gone while the container is persisted (here we know exactly why the task was gone). Combining the comments above (and also containerd/containerd#2202), a possible case is containerd needs more resources (or worker memory comsuption becomes higher) to run task (a namespaced process) in Concourse > v7.6 while itself is sensitive to memory heavy tasks, which might got reaped without ATC's awareness. Note that, #7042 shows attemps to either better GC those persisted container if the task is dead or recreate the task from the persisted container. But neither of them could solve the problem i.e. preventing the task to be reaped. ATM I could only suggest to try increasing the worker memory and see if it helps the situation. Also, after going through the diffs between 7.6 and 7.7, I think #8048 might have a play in this issue that it might just spawn many check containers for a resource type that will overload the worker. So please keep an eye on the resources type one resource is using when you see the error happens in its check. We'd like to know if the check errors happen to resources that have same resource type (or several resource types that are very commonly used). |

|

Hit this same issue while using the containerd runtime. Set up a secondary worker and restarted the primary / web nodes to get it working again. From what I saw, if you only have one worker and it gets in this state it will never recover. |

|

Facing same error on Concourse 7.5.0 deployed to GKE K8s cluster using HELM. We run 5 worker nodes.

At the same time following log entries can be observed in one of concourse-worker pods { |

|

Got this issue with v7.8.3 after my laptop blue screened and rebooted. I'm running with docker-compose locally, a web+worker container and a postgres container. |

|

Seeing this issue in v7.9.0 ( |

|

Seeing this error as well, on both 7.6.0 and 7.9.0. It happens very quickly on relatively simple pipelines (almost instantly, sometimes). It is just a resource check for a small git repo. I cannot imagine this is a CPU or memory loading issue. It has happened with the same pipelines running on 2 different machines so far. Single worker, containerd, running from a carbon copy of the quickstart docker-compose. It does occasionally seem to clear itself up, but it might sit there broken for a while. That's not really a remedy. |

|

We're also getting this error at 7.9.0 |

|

also experiencing this in 7.9.0 - @xtremerui can we have another look at this one? its very prevalent |

|

Also seeing this issue in v7.9.1 |

|

Also seeing this issue in v7.9.1 |

|

We hit this with an in-place worker restart. No Concourse upgrade nor downgrade. Concourse v7.10.0. Dashboard was full of orange triangles. We run our workers on over-provisioned bare metal. The environment is usually very stable, and is nowhere near capacity. I only restarted the workers today while trying to debug an unrelated problem. We also use the containerd runtime. (I did not land the workers prior to restart. No worker state was lost between restarts, so I did not think it would be necessary to land them.) Edit: Yes, reliably reproducible on in-place worker restart. Essentially, it seems one must wait for Concourse to GC out the errant containers ( |

Summary

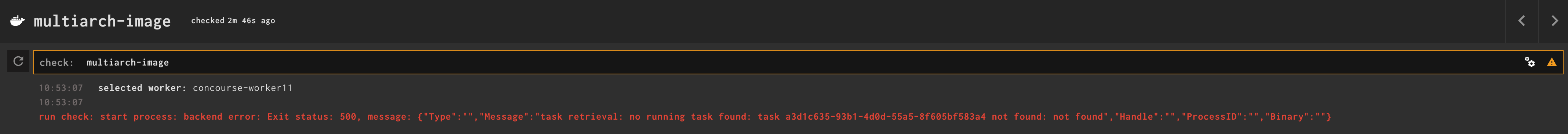

After upgrading to 7.7.0 from 7.6.0 we started facing many errors in resources like following:

run check: start process: backend error: Exit status: 500, message: {"Type":"","Message":"task retrieval: no running task found: task d7139440-721d-4258-79bb-25ad9760bab9 not found: not found","Handle":"","ProcessID":"","Binary":""}We are using containerd runtime and running Concourse on Kubernetes. After revert back to 7.6.0 all errors disappeared.

Steps to reproduce

Upgrade 7.6.0 to 7.7.0

Expected results

No errors :)

Actual results

See above.

Triaging info

The text was updated successfully, but these errors were encountered: