-

Notifications

You must be signed in to change notification settings - Fork 9.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

clientv3 sdk will send /etcdserverpb.Lease/LeaseKeepAlive twice every second for lease id after over time. #9911

Comments

|

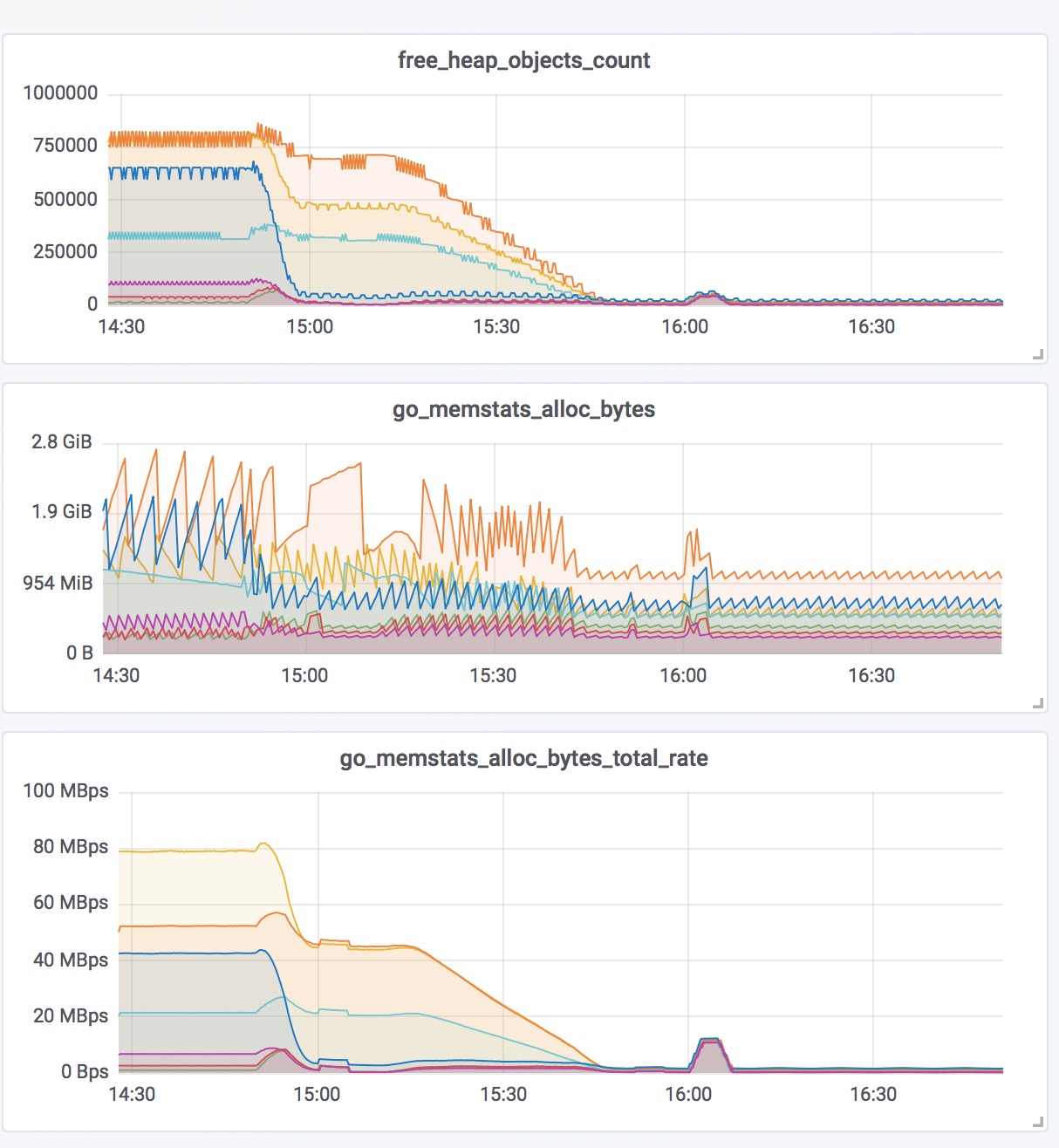

in this case (server memory highly load due to too many connections):

so, It happened... hi, @gyuho @xiang90 Is that right? I deploy a new version without If that is.

|

|

@cfc4n Could you also try latest 3.3? |

|

OK,I'll try it today. |

|

hi, @gyuho I test it in v3.3.8 ,and in my test-case , TTL always was 29 after 160s ( |

|

@cfc4n Ok, will take a look. Currently busy with fixing some other issues in client. Thanks! |

|

waiting for your good news... |

Can you send a PR to fix it? Please also add an integration test. Thanks! |

|

@gyuho This is a bug. We need to backport the fix. |

|

@cfc4n I can reproduce. Let me fix soon. |

|

@cfc4n We have released https://github.com/coreos/etcd/blob/master/CHANGELOG-3.3.md#v339-2018-07-24 with the fix. Please try. |

|

@gyuho It is fixed.Thanks. |

Version:

All v3 client

Result:

reproduce:

code:

run result

nextKeepAlive := time.Now().Add((time.Duration(karesp.TTL) * time.Second) / 3.0)cause:

leaseResponseChSize = 16,at clientv3/lease.go line 222ch := make(chan *LeaseKeepAliveResponse, leaseResponseChSize)ka.nextKeepAlivenot to be assigned, Its value is still the last value, an expiration time . and matchif ka.nextKeepAlive.Before(now), It will add totosend slice.fix

nextKeepAliveshould be assigned toka.nextKeepAlivealways,The text was updated successfully, but these errors were encountered: