New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Library does't see GPU #1212

Comments

|

Hi, @ostreech1997 |

|

Hi, @IgnatovFedor |

|

@ostreech1997, do you have nvidia-docker installed? You could use gpu in container only if you run it with nvidia runtime and if image contains CUDA, CUDNN. To check if nvidia-docker installed correctly use After building image with |

|

@IgnatovFedor thanks a lot! I build image using your dockerfile, and now I can use GPU to train models. But, it seems, that train process uses only one video card. Is it possible to use all video cards for training process? |

|

@ostreech1997, you welcome. Unfortunately, now DeepPavlov does not support the use of more than one GPU. |

|

Okey, I got it. |

|

Hi, I have new problem with GPU. I want to train several models one after another. But after first train, second model uses CPU to train. |

|

@ostreech1997, could you show your code used to train several models one after another? |

|

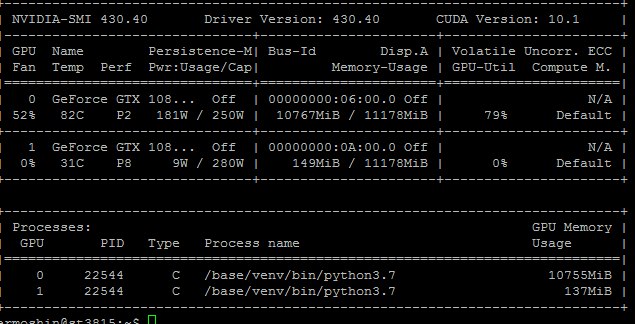

@IgnatovFedor Now, I test training models in Jupyter. Example of intent classifier with configs.classifiers.rusentiment_bert.open(encoding='utf8') as f: config_classifier['metadata']['variables']['MODEL_PATH'] = '/base/.deeppavlov/models/classification_task/classification_intent/' model_clf = train_model(config_classifier, download=False) When training was finished, I check nvidia-smi and see this: |

|

I found that restarting Jupyter kernel fix this. May be there is no problem, if I train models with .py file. |

|

Bad news, when I train models using .py file, I have the same problem. For some reason, gpu continues to be loaded... |

|

I think this problem for another issue. Thanks a lot for your help @IgnatovFedor, now I can use GPU for train. |

Hi everyone, thanks for your library!

I use several BERT models, but I can't train them using GPU. I describe all process:

But when I train model, it uses CPU.

I try to check access to GPU using this command: tf.test_is_gpu_avalaible. It returns me False(

May be there is a mistake in this sequence of actions?

The text was updated successfully, but these errors were encountered: