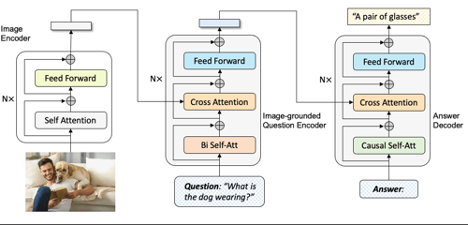

This implementation applies the BLIP pre-trained model to solve the icon domain task.

|

|

|---|---|

| How many dots are there? | 36 |

**Note: The test dataset does not have labels. I evaluated the model via Kaggle competition and got 96% in accuracy manner. Obviously, you can use a partition of the training set as a testing set.

Copy all data following the example form You can download data here

pip install -r requirements.txt

python finetuning.py

python predicting.py

Nguyen Van Tuan (2023). JAIST_Advanced Machine Learning_Visual_Question_Answering