-

Notifications

You must be signed in to change notification settings - Fork 4.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Higher CPU usage since update from 5.0.13 to 6.0.1+ #66827

Comments

|

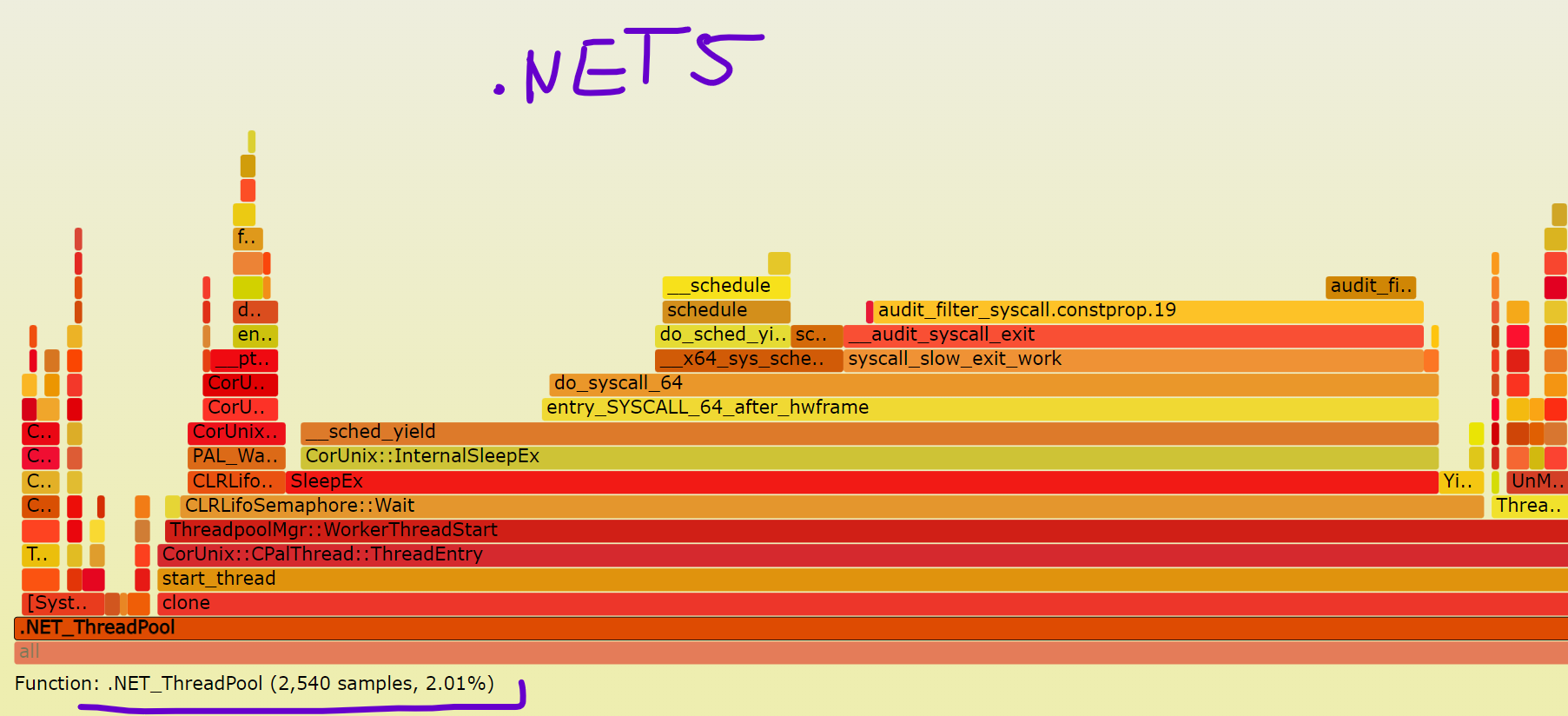

Tagging subscribers to this area: @mangod9 Issue DetailsDescriptionWe manage a number of Regression?Before the update to 6.0.1 our application was running on .NET 5.0.13 without this issue. DataAzure instance type in question: Here's a screenshot from our monitoring showing the CPU credits slowly going down on those idle servers: AnalysisWe tried to narrow down the issue by using our tooling that grabs stack traces, which showed Moving further we used Application running on .NET 6.0 is taking aproximately 1% more CPU than 5.0, which makes a big difference for

|

|

cc: @kouvel as the comment above says:

|

|

There's a config setting to reduce/disable the spin-waiting in

A tradeoff of applying the above config would be that if the service gets frequent moderately-sized bursts of work, the perf may end up being lower. If you expect the service to either be heavily loaded or barely loaded, and want to limit CPU usage in those cases, the above config may be appropriate. There may also be other tradeoffs, I'd suggest checking the perf to see how it works out. There has not been any difference in the actual spin-waiting heuristics between .NET 5 and 6, but there may be some natual timing changes that may end up contributing to the difference. I can't attempt a guess at what that difference might be. |

|

@kouvel Thank you. I will try to experiment with different settings and see if it gets better. |

|

The value specified for config variable above is interpreted as hexadecimal. |

Description

We manage a number of

B1lsinstances on Azure and ever since we updated our application to .NET 6.0.1 (still happening on 6.0.3) they started to get out of CPU credits. Even completely idle instances slowly consumed the CPU credits pool - onB1lsthis starts when the CPU usage is over the baseline 5%.Regression?

Before the update to 6.0.1 our application was running on .NET 5.0.13 without this issue.

Data

Azure instance type in question:

B1ls- 1 vCPU, 0.5 GB RAM, 5% CPU usage baselinehttps://azure.microsoft.com/en-us/updates/b-series-update-b1ls-is-now-available/

Here's a screenshot from our monitoring showing the CPU credits slowly going down on those idle servers:

Analysis

We tried to narrow down the issue by using our tooling that grabs stack traces, which showed

LowLevelLIFOSemaphoregenerating the CPU load:Moving further we used

perfto see if we can check what's been using the CPU. We tried to find differences between versions 5.3.2 (running .NET 5.0.13) and 5.3.101 (running on .NET 6.0.1) of our application. Attached are the interactive flamegraphs made on those versions.Application running on .NET 6.0 is taking aproximately 1% more CPU than 5.0, which makes a big difference for

B1lsburstable machines running 1 vCPU. The difference seems to lie in.NET ThreadPoolthreads and their usage ofsched_yieldsyscalls.flamegraphs.zip

The text was updated successfully, but these errors were encountered: