- Generating meta features

- Statistical features : TsFresh, User defined features

- Automated feature extraction using Deep Unsupervised Learning : Deep AutoEncoder (MLP, LSTM, GRU, ot custom model)

- Supporting sktime and darts libraries for base-forecasters

- Providing a Meta-Learning pipeline

pip install metats

from metats.datasets import ETSDataset

ets_generator = ETSDataset({'A,N,N': 512,

'M,M,M': 512}, length=30, freq=4)

data, labels = ets_generator.load(return_family=True)

colors = list(map(lambda x: (x=='A,N,N')*1, labels))from sklearn.preprocessing import StandardScaler

scaled_data = StandardScaler().fit_transform(data.T)

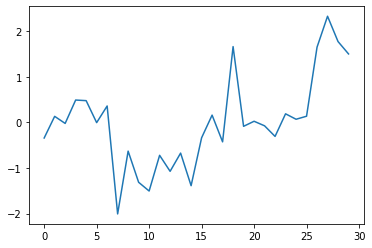

data = scaled_data.T[:, :, None]import matplotlib.pyplot as plt

_ = plt.plot(data[10, :, 0])from metats.features.statistical import TsFresh

stat_features = TsFresh().transform(data)from metats.features.unsupervised import DeepAutoEncoder

from metats.features.deep import AutoEncoder, MLPEncoder, MLPDecoder

enc = MLPEncoder(input_size=1, input_length=30, latent_size=8, hidden_layers=(16,))

dec = MLPDecoder(input_size=1, input_length=30, latent_size=8, hidden_layers=(16,))

ae = AutoEncoder(encoder=enc, decoder=dec)

ae_feature = DeepAutoEncoder(auto_encoder=ae, epochs=150, verbose=True)

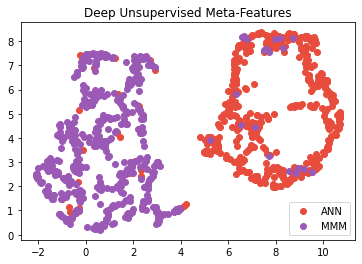

ae_feature.fit(data)deep_features = ae_feature.transform(data)Dimensionality reduction using UMAP for visualization

from umap import UMAP

deep_reduced = UMAP().fit_transform(deep_features)

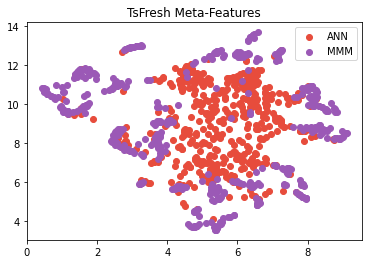

stat_reduced = UMAP().fit_transform(stat_features)Visualizing the statistical features:

plt.scatter(stat_reduced[:512, 0], stat_reduced[:512, 1], c='#e74c3c', label='ANN')

plt.scatter(stat_reduced[512:, 0], stat_reduced[512:, 1], c='#9b59b6', label='MMM')

plt.legend()

plt.title('TsFresh Meta-Features')

_ = plt.show()And similarly the auto encoder's features

plt.scatter(deep_reduced[:512, 0], deep_reduced[:512, 1], c='#e74c3c', label='ANN')

plt.scatter(deep_reduced[512:, 0], deep_reduced[512:, 1], c='#9b59b6', label='MMM')

plt.legend()

plt.title('Deep Unsupervised Meta-Features')

_ = plt.show()Creating a meta-learning pipeline with selection strategy:

from metats.pipeline import MetaLearning

pipeline = MetaLearning(method='selection', loss='mse')Adding AutoEncoder features:

from metats.features.unsupervised import DeepAutoEncoder

from metats.features.deep import AutoEncoder, MLPEncoder, MLPDecoder

enc = MLPEncoder(input_size=1, input_length=23, latent_size=8, hidden_layers=(16,))

dec = MLPDecoder(input_size=1, input_length=23, latent_size=8, hidden_layers=(16,))

ae = AutoEncoder(encoder=enc, decoder=dec)

ae_features = DeepAutoEncoder(auto_encoder=ae, epochs=200, verbose=True)

pipeline.add_feature(ae_features)You can add as many features as you like:

from metats.features.statistical import TsFresh

stat_features = TsFresh()

pipeline.add_feature(stat_features)Adding two sktime forecaster as base-forecasters

from sktime.forecasting.naive import NaiveForecaster

from sktime.forecasting.compose import make_reduction

from sklearn.neighbors import KNeighborsRegressor

regressor = KNeighborsRegressor(n_neighbors=1)

forecaster1 = make_reduction(regressor, window_length=15, strategy="recursive")

forecaster2 = NaiveForecaster()

pipeline.add_forecaster(forecaster1)

pipeline.add_forecaster(forecaster2)Specify some meta-learner

from sklearn.ensemble import RandomForestClassifier

pipeline.add_metalearner(RandomForestClassifier())Training the pipeline

pipeline.fit(data, fh=7)Prediction for another set of data

pipeline.predict(data, fh=7)- Sasan Barak

- Amirabbas Asadi

We wish to see your name in the list of contributors, So we are waiting for pull requests!

Below is a list of projects using MetaTS. If you have used MetaTS in a project, you're welcome to make a PR to add it to this list.