New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Server becomes unresponsive after sitting idle from a load spike #3550

Comments

|

When it's unresponsive, please take a JVM thread dump and a Jetty server dump. Using TLS? What OS/JDK? |

No TLS. Thanks for the hint about the Jetty server dump, I'll revisit the issue as soon I can reproduce this. |

|

I strongly recommend that you shy away from any JDK provided by Debian. Please use official versions from Oracle or adoptopenjdk.net, which are known to be A) stable and B) pass the TCK. The latest official JDK 8 release is 8u202. You are using some 8u212 with who knows what code in it and who knows how stable that is. Using standard OpenJDK binaries would allow us to possibly replicate the issue. |

|

@sbordet Thanks for the recommendation & explanation! I stumbled upon AdoptOpenJDK anyway and am planning to migrate to it (from 8 to 11 as well). |

|

I think 8u212 is a release outside of the Oracle Java 8 public support window. |

|

I will migrate to AdoptOpenJDK 11, and try Jetty 9.4.16 (or whatever version is released by then), and see if the issue can be reproduced there. Until then I'll close this issue. Thanks! |

|

Looks like https://adoptopenjdk.net/upstream.html lists 8u212 as Early Access (EA) is that right? |

|

After upgrading our test system from Jetty 9.4.15 to Jetty 9.4.16 we seem to observe the same issue. |

|

@e-hubert Thank you for reporting this! I'll reopen the issue, so you can add your findings here. We have yet to migrate to AdoptOpenJDK to contribute more to solving this. |

|

We're also seeing this issue, or something like it, on one of our systems, just updated to 9.4.17 (from 9.4.11, I think). In our case we're running illumos (Solaris) with openjdk8u212, but I don't think it's the openjdk that's the problem - we've been running that openjdk for a week with the older jetty without any problem, but jetty 9.4.17 immediately started to fail. |

|

For those having issues, can you please enable the For those running

|

|

I've experienced something similar starting today as well. 9.4.14 -> 9.4.16 upgrade, Java 8, Ubuntu 18.04. I'll try to get a As far as I can tell, the lockup (in my case) is caused when After doing some diving into code changes, I believe it is one of the changes introduced by one of these commits (which is new in 9.4.16): a982422 1097354 5177abb 9cda1ce In particular, in 9.4.14 (and 9.4.15) we only shrink QTP compared to |

Nice work, we'll see if we can replicate on our end!

We were are also looking closer at those same commits.

Interesting observation, we'll dig into this with some fervor. |

|

Additional experiments run + results (all on the "broken" instance type - c3.4xlarge)

So my conclusion says that my bug (which is similar to the original poster's and may or may not be the same) is attributed to 9cda1ce and not 1097354. So my theory about maxThreads appear wrong. P.S. If my bug proves different from OP's, apologies for thread hijacking. It just seemed so similar that it felt right to include the info here. |

|

One thing I have noticed is that our changes have also broken the QueuedThreadPool dump. So while others are working on a reproduction of the issue, I've created branch jetty-9.4.x-3550-QueuedThreadPool-stalled and I will fix the dump, so we can have a better idea of what is going on. |

…hreads previously if there were no idle threads, QueuedThreadPool.execute() would just queue the job and not start a new thread to run it Signed-off-by: lachan-roberts <lachlan@webtide.com>

previously if there were no idle threads, QueuedThreadPool.execute() would just queue the job and not start a new thread to run it Signed-off-by: lachan-roberts <lachlan@webtide.com>

|

We believe that we have found the reason for the failure. The QTP as modified in 9.4.16, allowed the number of idle threads to shrink to 0 if it does not violate minThreads requirement. When executing a new thread (or failing an existing thread), we need now need to check if a new thread should be started as there may not be an idle thread looking for queued tasks. Can you please look at the branch referenced in PR #3586 and test that it fixes your problem, if so then we will get a new release out ASAP! |

Signed-off-by: Greg Wilkins <gregw@webtide.com>

I have modified the JMH benchmark to look at latency (by measuring throughput) for executing a job with low, medium and high concurrency. It looks like the JREs threadpool is not so bad in some load ranges, but once things get busy we are still a bit better. No significant difference is seen between previous QTP impl and the one in this PR. ``` Benchmark (size) (type) Mode Cnt Score Error Units ThreadPoolBenchmark.testFew 200 ETP thrpt 3 129113.271 ± 10821.235 ops/s ThreadPoolBenchmark.testFew 200 QTP thrpt 3 122970.794 ± 8702.327 ops/s ThreadPoolBenchmark.testFew 200 QTP+ thrpt 3 121408.662 ± 12420.318 ops/s ThreadPoolBenchmark.testSome 200 ETP thrpt 3 277400.574 ± 34433.710 ops/s ThreadPoolBenchmark.testSome 200 QTP thrpt 3 244056.673 ± 60118.319 ops/s ThreadPoolBenchmark.testSome 200 QTP+ thrpt 3 240686.913 ± 43104.941 ops/s ThreadPoolBenchmark.testMany 200 ETP thrpt 3 679336.308 ± 157389.044 ops/s ThreadPoolBenchmark.testMany 200 QTP thrpt 3 704502.083 ± 15624.325 ops/s ThreadPoolBenchmark.testMany 200 QTP+ thrpt 3 708220.737 ± 3254.264 ops/s ``` Signed-off-by: Greg Wilkins <gregw@webtide.com>

Improved code formatting. Removed unnecessary code in doStop(). Now explicitly checking whether idleTimeout > 0 before shrinking. Signed-off-by: Simone Bordet <simone.bordet@gmail.com>

|

A problem has been found in the 3550 cleanup, where a race in idle thread vs job queue size results in a thread not being started when one is needed. |

|

I believe so. |

The version we are on could cause the server to become unresponsive after sitting idle from a load spike jetty/jetty.project#3550 https://github.com/eclipse/jetty.project/releases/tag/jetty-9.4.18.v20190429

The version we are on could cause the server to become unresponsive after sitting idle from a load spike jetty/jetty.project#3550 https://github.com/eclipse/jetty.project/releases/tag/jetty-9.4.18.v20190429

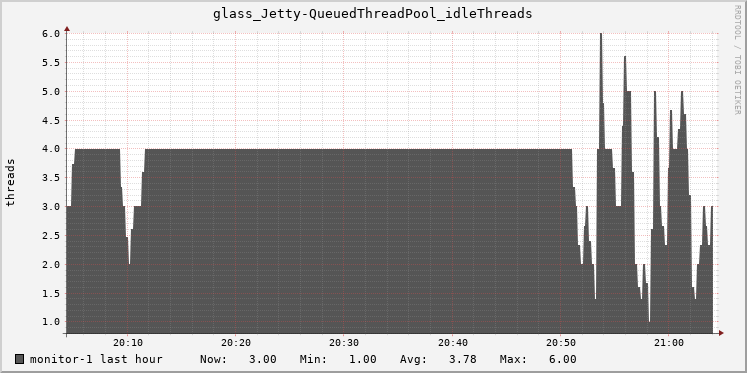

I tested Jetty 9.4.16 over the weekend with quite some load, although if's currently only available on Maven central and not officially been announced.

After a few hours, Jetty became unresponsive and I got 502 errors from my reverse proxy before it. I downgraded to Jetty 9.4.15 again and it seems to be running fine.

I really can't pinpoint what might cause this behavior, as it only happened after a few hours - but repeatedly. I use Glowroot to monitor the application and I found nothing obvious in the threaddump either. Out of ideas how to debug such situations.

Can somebody reproduce this? Any ideas how to further debug this?

The text was updated successfully, but these errors were encountered: