New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Feature/replace avl digest with merging digest #35182

Feature/replace avl digest with merging digest #35182

Conversation

|

Pinging @elastic/es-search-aggs |

|

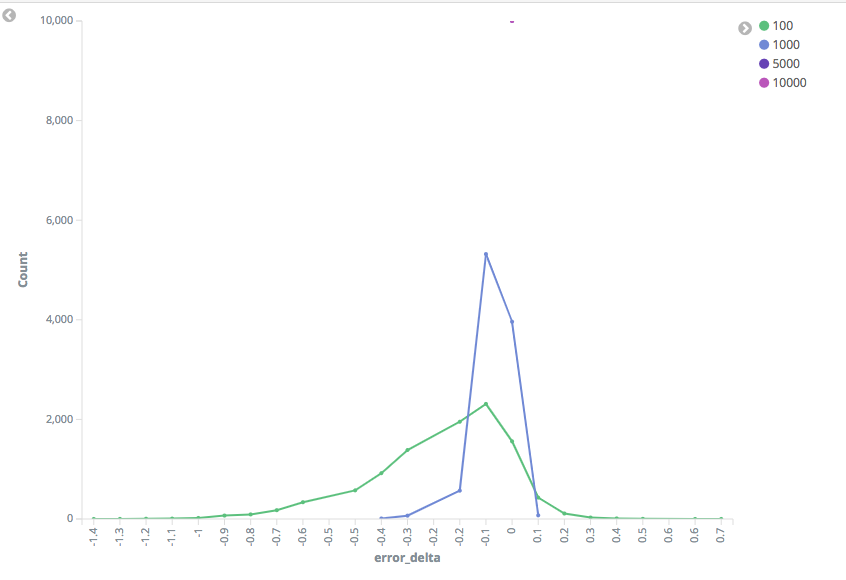

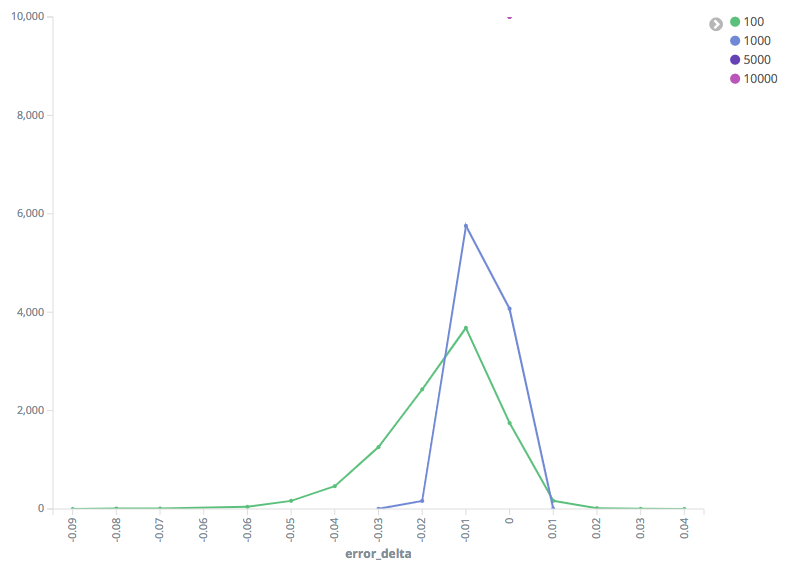

Thanks for running these benchmarks @not-napoleon. Based on the fact that the error introduced in the mixed cluster scenario is pretty low and that we expect mixed clusters to be a short-lived situation I would be happy to proceed with the switchover as is. I'd be interested to hear thoughts from @polyfractal and @jpountz on this though. Also in terms of the "flaky" test. I think we can do one of two things (again I'm interested to see what you, @polyfractal and @jpountz think here):

|

|

@colings86 both options for the test seem reasonable. I was running 10k iterations of the test in less than 120 seconds on my laptop, and a lot of that time is gradle overhead, so 50 shouldn't even be a blip in our test times. |

Gernerally ++ to this. @not-napoleon and I were chatting last night and another point came up: since the error basically goes to zero when compression is cranked up, if a user is unhappy about the potential cross-version error they could temporarily increase I did have a few questions about testing methodology after noodling over it a bit. I'm not sure if any of these are important, they are more half-baked thoughts than anything:

I'm leaning towards option 1), or just cranking the tolerance up a bit and revisiting if it still flakes out on CI. Since this is a short-lived problem/test that will go away after everyone upgrades, it probably doesn't make a ton of sense to invest lots of time trying to keep the test stable. |

|

I'm inclined to not keep the tests around; I don't think they prove anything individually that we need for the long term. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

👍

|

Hi @not-napoleon, I've created a changelog YAML for you. |

|

Pinging @elastic/es-analytics-geo (Team:Analytics) |

|

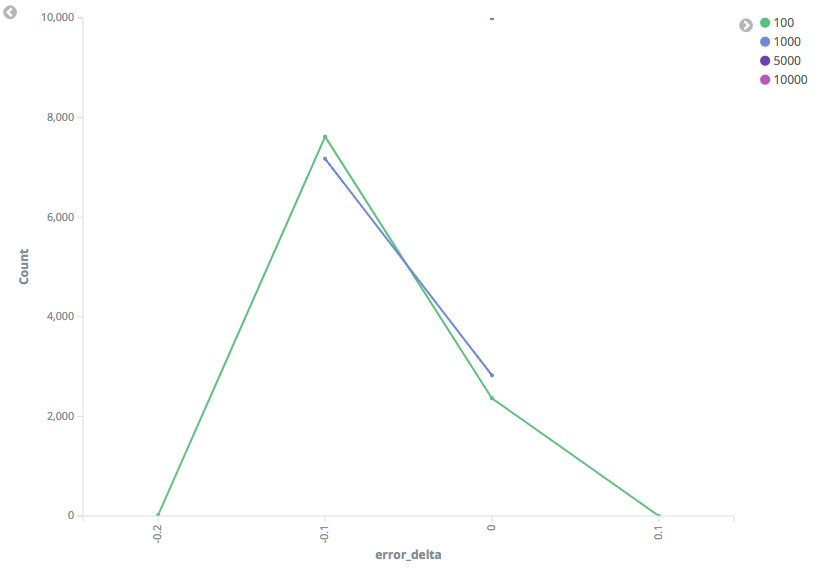

Looks like continuing to adopt merging digest is worth our while from a performance perspective: The two hour and 24 hour variant of this search showed a similar relative improvement. This based on running this search from a new tsdb rally track: The baseline is main branch and contender this PR. |

|

This is no longer relevant |

Relates to #28702

@colings86 had started this conversion in the above referenced PR. This builds on his work with unit tests for deserializing between the two t-digest implementations.

This is a work in progress because the testing methodology is ready for feedback, but specific test parameters still need to be tuned.