This repository provides a Terraform module to set up the necessary Kubernetes resources for an ExaDeploy system in an AWS EKS cluster. This includes deploying:

- Kubernetes secrets for the ExaDeploy system. This includes the Exafunction API key and optionally S3 access credentials and RDS credentials needed for the persistent module repository backend.

- ExaDeploy Helm chart responsible for bringing up ExaDeploy Kubernetes resources including the scheduler and module repository. See more details about the Helm chart here.

- Prometheus Helm chart to create a Prometheus instance for ExaDeploy monitoring and a Grafana instance for visualizing the metrics. See more details about the Helm chart here.

- NVIDIA Device Plugin Helm chart to enable GPU scheduling on the cluster. See more details about the Helm chart here.

- EKS Cluster Autoscaler used to autoscale nodes in the cluster.

Because this module uses the Kubernetes Terraform provider to create Kubernetes resources, it should not be used in the same Terraform root module that creates the EKS cluster these resources are deployed in. In simpler terms, EKS cluster creation and deployment of Kubernetes resources in that cluster should be managed with separate terraform apply operations. See this section of the official Kubernetes provider docs or this article describing the issue for more information.

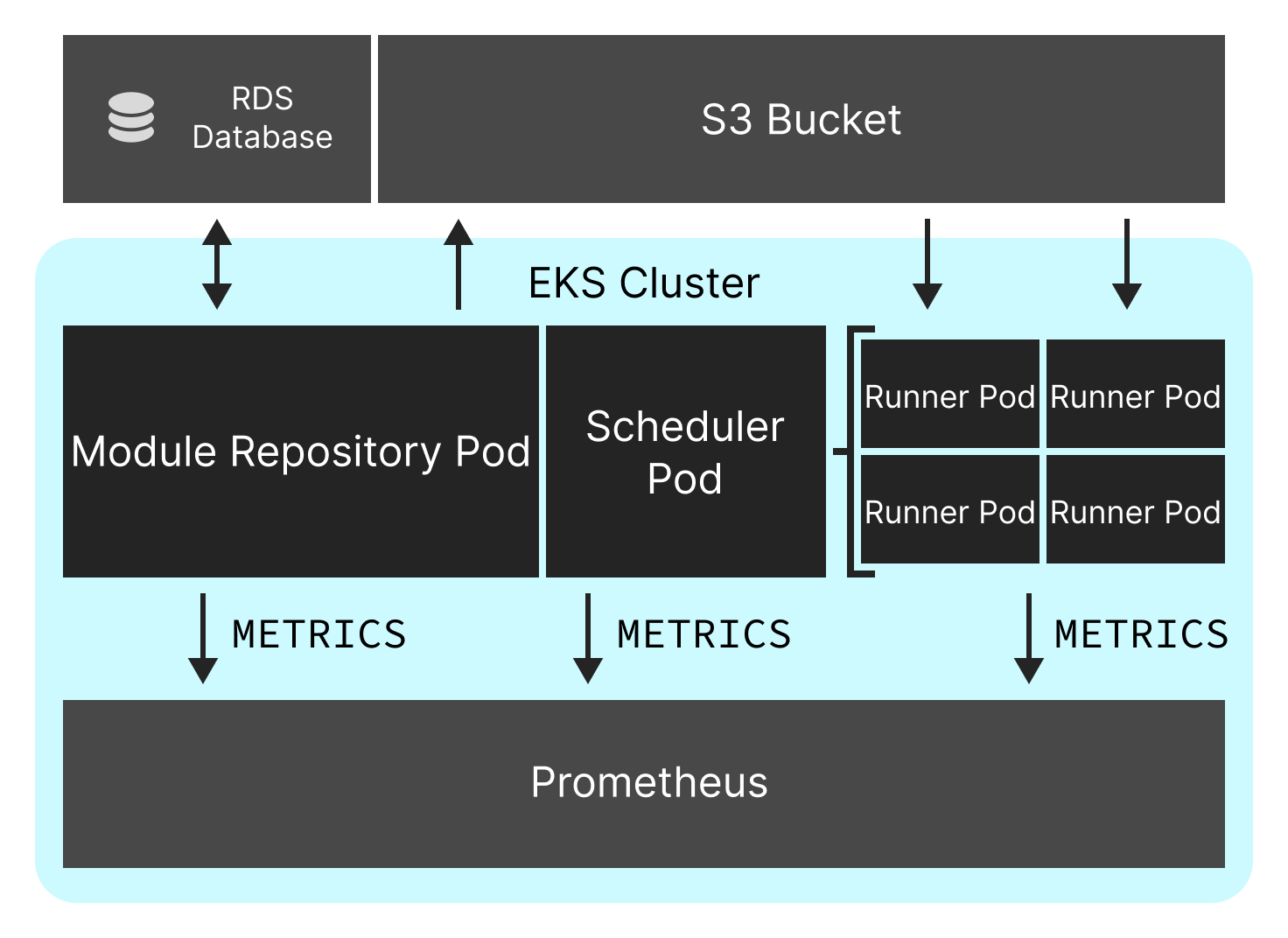

This diagram shows a typical setup of ExaDeploy in EKS (and adjacent AWS resources). It consists of:

- An RDS database and S3 bucket used as a persistent backend for the ExaDeploy module repository. These are not strictly required as the module repository also supports a local backend (which is not persistent between module repository restarts) but is recommended in production. This Terraform module is not responsible for creating these resources but must be configured to use them by providing the appropriate access credentials and addressing information (see Configuration - Module Repository Backend). To create these resources, see Exafunction/terraform-aws-exafunction-cloud/modules/module_repo_backend.

- An EKS cluster used to run the ExaDeploy system. This Terraform module is not responsible for creating the cluster. To create it, see Exafunction/terraform-aws-exafunction-cloud/modules/cluster.

- The ExaDeploy Kubernetes resources, specifically the module repository and scheduler (which is responsible for managing runners). This Terraform module is responsible for creating these resources.

- A Prometheus instance for monitoring the ExaDeploy system (along with a Grafana server used to visualize these metrics, not pictured). This Terraform module is responsible for creating these resource.

module "exafunction_kube" {

# Set the module source and version to use this module.

source = "Exafunction/exafunction-kube/aws"

version = "x.y.z"

# Set the cluster variables.

cluster_name = "cluster-abcd1234"

cluster_oidc_issuer_url = "https://oidc.eks.us-west-1.amazonaws.com/id/ABCD1234"

cluster_oidc_provider_arn = "arn:aws:iam::123456781234:oidc-provider/oidc.eks.us-west-1.amazonaws.com/id/ABCD1234"

# Set the Exafunction API Key.

exafunction_api_key = "12345678-1234-5678-1234-567812345678"

# Set the ExaDeploy component images.

scheduler_image = "123456781234.dkr.ecr.us-west-1.amazonaws.com/exafunction/scheduler:prod_abcd1234_1234567812"

module_repository_image = "123456781234.dkr.ecr.us-west-1.amazonaws.com/exafunction/module_repository:prod_abcd1234_1234567812"

runner_image = "123456781234.dkr.ecr.us-west-1.amazonaws.com/exafunction/runner@sha256:abcdef0123456789abcdef0123456789abcdef0123456789abcdef0123456789"

# Set the module repository backend.

module_repository_backend = "local"

# ...

}See the configuration sections below as well as the Inputs section and variables.tf file for a full list of configuration options.

See examples/setup_exadeploy for a working example of how to use this module.

While this module is not responsible for creating an EKS cluster, it does require information about an existing cluster to deploy resources to, including the name of the cluster and OpenID Connect (OIDC) information associated with the cluster. For clusters created using Exafunction/terraform-aws-exafunction-cloud/modules/cluster, this information can be fetched using the cluster_name, cluster_oidc_issuer_url, and oidc_provider_arn module outputs.

This Terraform module installs the ExaDeploy Helm chart in the EKS cluster (version set by exadeploy_helm_chart_version). Helm is a package manager for Kubernetes that allows for easy installation of applications in a Kubernetes cluster. Helm charts can be configured by passing in values when installing through a values yaml file or command line arguments.

The configuration of the ExaDeploy Helm chart in this Terraform module is split between required values which are managed through dedicated Terraform variables and optional values which can be supplied using the exadeploy_helm_values_path variable (see Optional Configuration). These are all detailed in the sections below.

As a note, "required" variables in this sense means they must be set in order to install the Helm chart at all. Other "optional" values may be necessary to support specific infratructure configurations, toggle features, or maximize performance.

The Exafunction API key is a unique key used to identify the ExaDeploy system to Exafunction. The API key itself should be provided by Exafunction.

To set, see the exafunction_api_key and exafunction_api_key_secret_name variables.

The Exafunction component images are the Docker images used to run the ExaDeploy system. These should be provided by Exafunction.

To set, see the scheduler_image, module_repository_image, and runner_image variables.

The module repository can be configured to use either a local backend on disk or a remote backend backed by an RDS database and S3 bucket. The remote backend allows for persistence between module repository restarts and is recommended in production. For remote module backends created using Exafunction/terraform-aws-exafunction-cloud/modules/module_repo_backend, this information can be fetched from the module outputs.

To set, see the module_repository_backend, rds_*, and s3_* variables. If module_repository_backend is local then the other variables do not need to be specified. If module_repository_backend is remote then the other variables must all be non-null.

While the above sections cover all the required values for the ExaDeploy Helm chart configuration, there are many additional values that can be set to customize the deployment. These values are specified in a yaml file (in Helm values file format) and passed to the Helm chart installation through the optional exadeploy_helm_values_path variable.

To see all the available values to be set, see the ExaDeploy Helm chart. Note that Helm chart values corresponding to the required values above should not be set through this method as they will overriden (multiple Helm values specifications are automatically merged).

This Terraform module also installs the Prometheus Helm chart in the EKS cluster. This chart provides a Prometheus server and Grafana dashboard for monitoring the ExaDeploy system.

By default, the Grafana dashboard is made available on a private address within the VPC. The enable_grafana_public_address variable can be used to instead expose the Grafana dashboard on a public address for ease of access.

This module also by default enables Prometheus remote write functionality in order to send ExaDeploy system metrics to a remote Prometheus server owned by Exafunction. The remote receiving endpoint is secured by TLS and clients are authenticated by using basic auth with a username and password that Exafunction should provide. These metrics will help Exafunction better understand your usage of ExaDeploy, and more rapidly troubleshoot any issues which may occur.

To enable / disable this feature, see enable_prom_remote_write. When enabled (default), the prom_remote_write_* variables must be specified. In particular, prom_remote_write_username and prom_remote_write_password should be the username and password provided by Exafunction.

To learn more about Exafunction, visit the Exafunction website.

For technical support or questions, check out our community Slack.

For additional documentation about Exafunction including system concepts, setup guides, tutorials, API reference, and more, check out the Exafunction documentation.

For an equivalent repository used to set up ExaDeploy in a Kubernetes cluster on Google Cloud Platform (GCP) instead of AWS, visit Exafunction/terraform-gcp-exafunction-kube.

| Name | Type |

|---|---|

| helm_release.exadeploy | resource |

| helm_release.kube_prometheus_stack | resource |

| helm_release.nvidia_device_plugin | resource |

| kubernetes_cluster_role_binding.cluster_admin | resource |

| kubernetes_namespace.prometheus | resource |

| kubernetes_secret.exafunction_api_key | resource |

| kubernetes_secret.prom_remote_write_basic_auth | resource |

| kubernetes_secret.rds_password | resource |

| kubernetes_secret.s3_access | resource |

| random_string.irsa_role_name_prefix | resource |

| aws_region.current | data source |

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| cluster_name | Name of the Exafunction EKS cluster. | string |

n/a | yes |

| cluster_oidc_issuer_url | The URL for the OpenID Connect identity provider associated with the EKS cluster. | string |

n/a | yes |

| cluster_oidc_provider_arn | The ARN of the OpenID Connect provider for the EKS cluster. | string |

n/a | yes |

| enable_grafana_public_address | Whether the Grafana service will be accessible via a public address. If false, the Grafana service will only be accessible via a private address within the VPC. | bool |

false |

no |

| enable_prom_remote_write | Whether to enable remote writing Prometheus metrics to the Exafunction receiving endpoint. If true, all prom_remote_* variables must be set. |

bool |

true |

no |

| exadeploy_helm_chart_version | The version of the ExaDeploy Helm chart to use. | string |

"1.0.0" |

no |

| exadeploy_helm_values_path | ExaDeploy Helm chart values yaml file path. | string |

null |

no |

| exafunction_api_key | Exafunction API key used to identify the ExaDeploy system to Exafunction. | string |

n/a | yes |

| exafunction_api_key_secret_name | Exafunction API key Kubernetes secret name. This secret will be created by this module. | string |

"exafunction-api-key" |

no |

| module_repository_backend | The backend to use for the ExaDeploy module repository. One of [local, remote]. If remote, s3_* and rds_* variables must be set. |

string |

"local" |

no |

| module_repository_image | Path to ExaDeploy module repository image. | string |

n/a | yes |

| prom_remote_write_basic_auth_secret_name | Prometheus remote write basic auth Kubernetes secret name. This secret will be created by this module. | string |

"prom-remote-write-basic-auth-secret" |

no |

| prom_remote_write_password | Prometheus remote write basic auth password. This will be stored in the Prometheus remote write basic auth Kubernetes secret. | string |

null |

no |

| prom_remote_write_target_url | Prometheus remote write target url. | string |

"https://prometheus.exafunction.com/api/v1/write" |

no |

| prom_remote_write_username | Username (e.g. company name) for Prometheus remote write which will be used as a Prometheus label and basic auth username. This will be stored in the Prometheus remote write basic auth Kubernetes secret. | string |

null |

no |

| rds_address | Address of the RDS database. | string |

null |

no |

| rds_password | Password for RDS instance. This will be stored in the RDS password Kubernetes secret. | string |

null |

no |

| rds_password_secret_name | RDS password Kubernetes secret name. This secret will be created by this module. | string |

"rds-password" |

no |

| rds_port | Port of the RDS database. | string |

null |

no |

| rds_username | Username for the RDS database. | string |

null |

no |

| runner_image | Path to ExaDeploy runner image. | string |

n/a | yes |

| s3_access_key_secret_name | S3 access key Kubernetes secret name. This secret will be created by this module. | string |

"s3-access-key" |

no |

| s3_bucket_id | ID for S3 bucket. | string |

null |

no |

| s3_iam_user_access_key | Access key ID for the S3 IAM user. This will be stored in the S3 access key Kubernetes secret. | string |

null |

no |

| s3_iam_user_secret_key | Secret access key for the S3 IAM user. This will be stored in the S3 access key Kubernetes secret. | string |

null |

no |

| scheduler_image | Path to ExaDeploy scheduler image. | string |

n/a | yes |

| Name | Description |

|---|---|

| cluster_autoscaler_helm_release_metadata | Cluster autoscaler Helm release attributes. |

| exadeploy_helm_release_metadata | ExaDeploy Helm release attributes. |

| nvidia_device_plugin_helm_release_metadata | NVIDIA device plugin Helm release attributes. |

| prometheus_helm_release_metadata | Prometheus Helm release attributes. |