-

Notifications

You must be signed in to change notification settings - Fork 551

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Making built wheels available for install #533

Comments

Apparently it's now 100MB. I've requested an increase to 300MB which should give us some margin

That's the reason why we are currently using conda, which handles this sort of dependencies. In any case, a follow-up task would be to check at run-time that the pytorch version has not changed What do you think? |

|

Overall I think its a good plan, some comments:

Could this in anyway lead to endless loops? I don't know how, but my spider sense is tingling. Also, the order of installs here matters right? it won't not be possible to pip install torch xformers --extra-index-url https://download.pytorch.org/whl/cu116

same experience Perhaps maybe a bit more concretely on the plan forward:

|

I think we can have pytorch an install dependency or something like that.

This does not solve the issue with multiple versions of pytorch/cuda (unless we ignore cuda mismatch errors, and add a pinned version of pytorch - latest? - as a dependency) |

Oh I meant just download the built binary files (not the entire package). Like |

|

@danthe3rd now that we have pypi storage, what is the next step? is there something I can help with? do we still need to worry about the pip index? it is just an html page after all but still. |

|

I'm trying to figure out internally how we can store that on s3 - it's thanksgiving in the US so hopefully we will have more news to share next week. |

|

@danthe3rd happy thanksgiving! ping me when you have any updates. |

|

It looks a bit more complicated than I expected - for now I think we could upload to pypi directly. What do you think? |

|

for 1) we are already building on manylinux xformers/.github/workflows/wheels.yml Line 65 in 1515f77

but the generated wheel has the suffix linux_x64_86, maybe we can cheese it and sed to manylinux, I used the 2014 version for python version support https://github.com/pypa/manylinux#manylinux

for 2) I always thought this was handled by And for 3) an additional source build should be easy, since we don't need cuda / torch / compilation, unless I am missing something. I have never uploaded to pip before, so I will have to play around first to get a feeling for it.... |

|

I've just added a secret "PYPI_TOKEN" (starting with (Maybe it's easier if you create your own pypi account/repo to test ...) |

|

@danthe3rd yeah, I will start with a dummy repo and https://test.pypi.org/, also check the versioning thing, hopefully over the weekend. |

|

Sorry for the lack of updates recently, with the pytorch conference it took some time to get the information I needed. So apparently there is this way of building libraries that pytorch provides called NOVA (see the doc). Once we have our setup in this format, we can just add the secrets and it should work. Would you be interested in getting us started with NOVA? It should take care of all the specificities of each platform for us in theory |

|

@danthe3rd I can give it a look, it seems like it simplifies (or maybe just unifies) the build workflows across all pytorch related repos. From a first glance, it seems that it still does not handle the case of uploading to pypi, but only to pytorch's s3. would that be enough? do we will still need a separate step with regarding your point of getting it to stable, maybe it is just an env variable? |

|

That is right. @fmassa how is pypi upload handled in torchvision? Otherwise, we can also upload manually with twine if you want to give it a shot with a test repo. |

|

@danthe3rd I managed to get NOVA running: https://github.com/AbdBarho/xformers-wheels/actions/runs/3655705207 I will clean up the code and create a PR. pypi upload could be added after that from the generated artefacts |

|

Oh wow that was fast :o |

It might be the way forward for the time being, as NOVA does not seem to work for us, and we already have the builds on the CI. What do you think? Do you have time to work on that? I have already setup the |

|

@danthe3rd already working on it! https://github.com/AbdBarho/xformers-wheels/actions/runs/3684497349/jobs/6234302879 with a successful upload: https://test.pypi.org/project/formers/0.0.15.dev383/ I used the name I will clean up the code and create a PR. I have some questions that we can clear up then. |

|

Oh nice! Let's only upload for the latest pytorch stable (1.13 at the moment), with cuda 11.7 for instance. |

|

Thanks for working on this 🙏 I've downloaded the whl file |

|

@danthe3rd That is one question answered, which is the same as what I had in mind: The second question is regarding the version, do we want to upload I saw the conda example, the generated version string is not accepted by pypi, what I have now is just |

|

@FrancescoSaverioZuppichini oh oh! can you give me some details about your os? the wheel should (in theory) work on any linux machine, if it doesn't, we have a problem. |

|

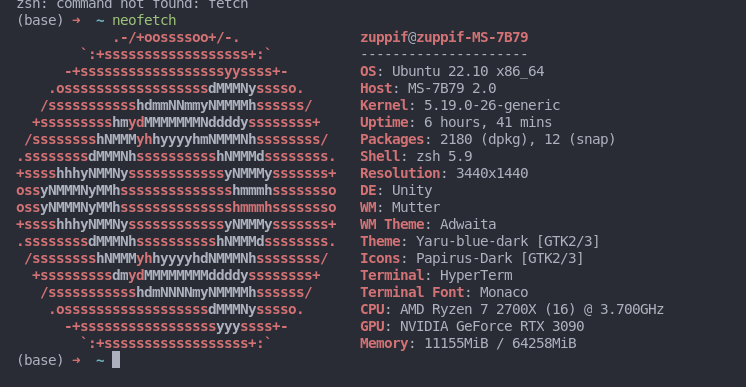

@AbdBarho Sure, from |

The optimal solution I see would be:

Yes, pypi won't accept local suffixes. Let's remove them from the version number. |

|

@FrancescoSaverioZuppichini I managed to install the wheel on a debian and centos system, the error you have is unexpected. This wheel is for python 3.10, can you try again with ? |

|

And we just have our first binary wheel uploaded! You can test it with |

|

Thanks Dan! I really appreciate your hard work! After installing xformers with

and starting up the stable diffusion 2.0 model (run through the stable-diffusion-ui), I get the following error:

Full paste here: https://pastebin.com/HZFrgE3M |

|

@JacobAsmuth are you running pytorch 1.12.x? |

|

I am not, I'm running pytorch 1.11.0 . |

|

Unfortunately, The wheels are built against the latest pytorch 1.13 :/ Maybe @danthe3rd has any ideas? |

|

I'm using 1.11 only because that's what https://github.com/Stability-AI/stablediffusion installs with their default requirments.txt - let me see if it's compatible with later versions. |

|

Did we miss something @AbdBarho ? pip installing XFormers should have updated PyTorch as it's a requirement to have version==1.13... We definitely need a better error message btw. |

|

Not sure, checking the METADATA of the wheel shows that it looks okay: and the same applies to the source distribution. Edit: I tested both on a fresh install and on an env with torch 1.12, in both cases torch 1.13 is downloaded. Maybe because it is a pre-release? or maybe its because torch was installed with conda and xformers with pip? |

|

@danthe3rd @AbdBarho Does this work for torch 1.14 (aka 2.0) please? |

Would it be possible to relax the |

xFormers works with any version of pytorch if you build yourself, but for the pre-built binary wheels, they are only compatible with the pytorch version they were built for. For now we chose to stick to the latest stable |

|

@danthe3rd Sorry I thought that |

|

Hum it also looks like we have issues with the RC releases now: https://github.com/facebookresearch/xformers/actions/runs/3703118568/jobs/6274182582#step:10:21 |

|

@AbdBarho it works! 🚀 Thanks a lot ❤️ Tested on |

|

@AbdBarho wait ... you are the hero who made https://github.com/AbdBarho/stable-diffusion-webui-docker sending all the love of this world man! 🤗 |

|

This is awesome! We (Hugging Face diffusers team) really want to recommend users to install xFormers, and it'll be much easier when pip wheels are available. My understanding from #591 is that wheels will be automatically made available via |

|

Yes that is correct! We will also pin the associated PyTorch version, as only a single pytorch can be supported per pypi version for binary wheels |

Excellent, we'll update our documentation when |

|

Can we close this now? it seems that everything is working fine. |

|

Yes indeed, good point. |

🚀 Feature

Motivation

After #523 #534, the wheels can be built, but are not available for install anywhere. But users want this #532 #473

Pitch & Alternatives

There a couple of ways that I know of to achieve this:

Upload to pypi

Probably the most obvious solution

pipfind the correct wheel for a given torch & cuda version?External hosting

Upload the built wheel to an external storage / hosting service (s3 & co.)

pipfind the correct wheel for a given torch & cuda version?Upload to the release page on github

Similar to the above, but using github

maybe it would be easiest to dump all wheels to github releases and then the users would have to find the version they want and install it.

pip install https://github.com/..../xformers-1.14.0+torch1.12.1cu116-cp39-cp39-linux_x86_64.whlAlthough this would make it really annoying for libraries that use xformers and want to build for different python /cuda versions, you cannot just add

xformersto yourrequirements.txt.Additional info & Resources

https://peps.python.org/pep-0503/

https://packaging.python.org/en/latest/guides/hosting-your-own-index/

I am willing to contribute if I know how.

The text was updated successfully, but these errors were encountered: