You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Since both flashlight and jax are proposed for high performance and easy low-level ops implementation, it is desired to compare them. I simply tried a compiled MLP model (flashlight/fl/examples/Perceptron.cpp) and reproduced Jax+Flax+Jit python MLP. The results show that Flashlight is much slower than Jax.

This isn't surprising. The ArrayFire CPU backend is single-threaded and not optimized for performance -- it hardly JITs computations and primarily exists as a debugging tool. While the ArrayFire OpenCL backend is better (uses a JIT and some async/parallel computation), CPU performance may still be underwhelming, and it isn't properly supported at the moment in Flashlight.

We're currently developing a CPU backend based on Intel's oneDNN library which should significantly improve performance (and will also enable proper interoperability with the ArrayFire OpenCL backend). Stay tuned for updates -- commits on that backend will appear in main directly.

Question

Since both flashlight and jax are proposed for high performance and easy low-level ops implementation, it is desired to compare them. I simply tried a compiled MLP model (

flashlight/fl/examples/Perceptron.cpp) and reproduced Jax+Flax+Jit python MLP. The results show that Flashlight is much slower than Jax.Additional Context

All tests are conducted on a CPU-only machine.

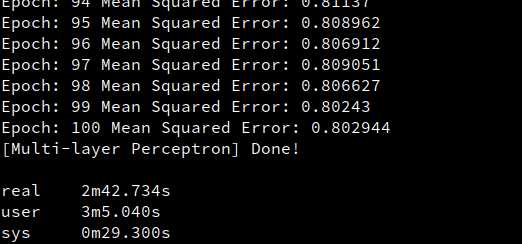

Flashlight:

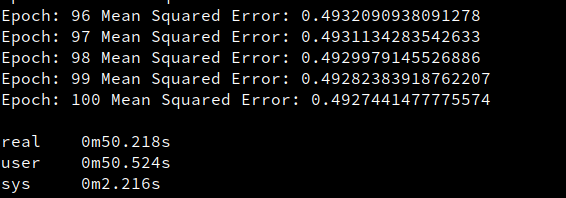

Jax:

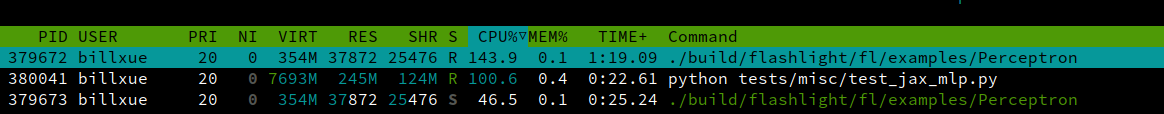

Htop monitor:

Click to toggle contents of `test_jax_mlp.py`

The text was updated successfully, but these errors were encountered: