New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Blocker] Thread exhaustion #6319

Comments

|

I am haunted by a similar issue. Since the 'contained in branches' request is very expensive in my repo (> 1 min), a lot of git processes are spawned. It would be nice if CommitInfo was able to cancel its tasks when another commit is loaded. My workaround is to disable most 'extra' features for CommitInfo.... Not a real solution and I have to do this for most colleagues, since they work on the same repo. |

Combine all async calls that load commit information into a single background call that reduces the number of used threads. Add additional breaks for the background execution whenever cancellations are requested. Fixes gitextensions#6319

Combine all async calls that load commit information into a single background call that reduces the number of used threads. Add additional breaks for the background execution whenever cancellations are requested. Fixes gitextensions#6319

|

I'll take a look |

|

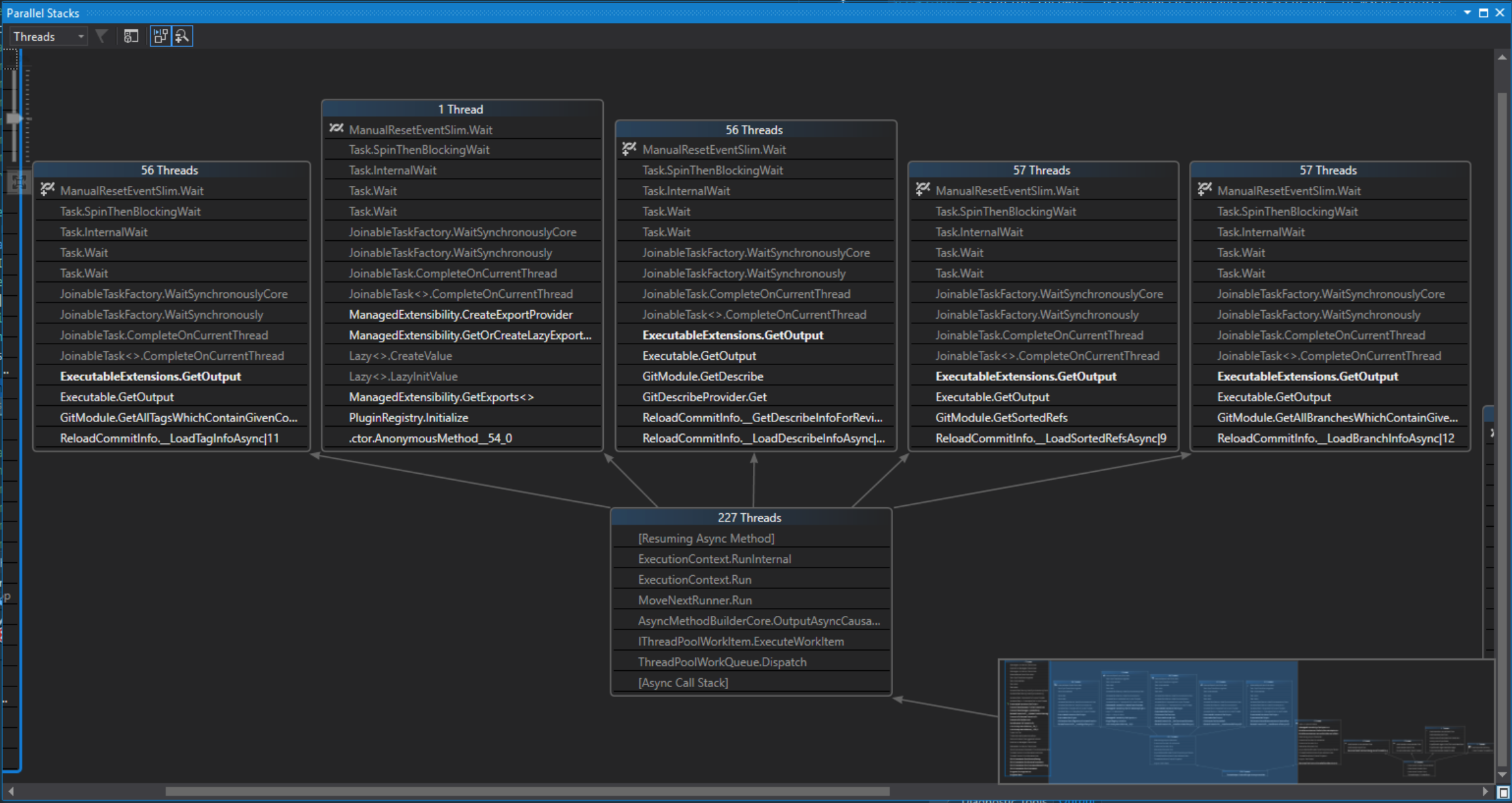

In review today, I found that

The first is easily solved, so I'll focus on the second. It turns out that

In profiling the scenario described by this issue for a couple of repositories, the operations that used the most time on the thread pool for |

|

Ideally we shouldn't block UI, if we can help it.

…On Mon, Mar 18, 2019, 7:03 AM Sam Harwell ***@***.*** wrote:

In review today, I found that CancellationException really isn't a

substantial part of the problem. There are two primary areas that showed up:

1. We are using the workstation GC instead of the server GC

2. ExecutableExtensions.GetOutput getting called on thread pool threads

The first is easily solved, so I'll focus on the second. It turns out that

GetOutput will not lead to thread pool starvation as long as one of the

following is true:

1. It is called from the UI thread (it might lead to UI delays here,

but not thread pool starvation)

2. It is called from a dedicated background thread, which is not part

of the thread pool (it would use unnecessary resources in this case, but

the blocked thread was created specifically for this block and would not

interfere with other operations)

In profiling the scenario described by this issue for a couple of

repositories, the operations that used the most time on the thread pool for

GetOutput were these:

[image: image]

<https://user-images.githubusercontent.com/1408396/54497211-c7d6a180-48c5-11e9-8ace-2339583bba89.png>

—

You are receiving this because you were assigned.

Reply to this email directly, view it on GitHub

<#6319 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AEMyXsakzhqGqBlSNvbhNtlookcyKbG1ks5vXp-GgaJpZM4bWfd4>

.

|

|

@RussKie agreed, but the UI thread isn't part of the thread pool so I was making sure to point out explicitly that UI thread operations don't directly cause thread pool starvation 😄 |

|

@sharwell See my coment #6357 (comment) I did every call async what made the UI clickable but I wouldn't call it responsive. I could click on a file in the FileList but had to wait a few seconds until the file content was loaded while the UI was clickable all the time when I was waiting. We will see this behavior every time we use too many default threadpool threads. I agree that we should make all the calls async, but it is not enough to keep the app responsive. We also have to control how much background job is being done at the time. |

|

@jbialobr Yes, that's a separate issue as well - the number of git.exe operations running at once. However, that concern is unrelated to the number of threads in use since the git operation does not necessarily mean a thread is blocked. |

|

No, I am not talking about git.exe processes. The problem is with overloading the threadpool. |

|

@jbialobr do you know of an example GitHub repository that has something like that? Also note that in the case you describe, the 100 tasks still isn't the problem. The problem only occurs if a thread on the threadpool runs a blocking operation where it should have yielded execution with an |

|

Sure I can provide you such a repo when I am back home. |

See #6357 (updated the instructions there to create 100 submodules). You probably do not 100 GE submodules to reproduce to problem though... |

|

Here is the repo Open it and then open It seems you are right. I tried to reproduce the scenario in isolation by creating 100 Tasks that call an async method on GitModule but could not reproduce the lag. I am looking forward to the results of your investigation. |

|

@jbialobr I'm still having a bit of trouble reproducing performance problems. I have tried the repository you posted and also tried 17 recursive submodules of Git Extensions itself using the script from @gerhardol. I'm guessing we just aren't at a scale that stresses the system I use for performance measurements (2990WX + 905P). The profiling tools are still able to aggregate time spent on background threads when the user impact is not observable (e.g. #6319 (comment)), but there are two challenges:

I'm going to try to scale this case up by another factor of 10 and see if things are clearer, but otherwise I'm going to have to do my testing based on a precise sequence of steps you provide. |

|

Perhaps it is a matter of some differences in the GE settings. Could you post here the Tools->Git commands log content, that I could compare with mine to see if you are hitting the same path? |

Combine all async calls that load commit information into a single background call that reduces the number of used threads. Fixes gitextensions#6319

Combine all async calls that load commit information into a single background call that reduces the number of used threads. Fixes gitextensions#6319

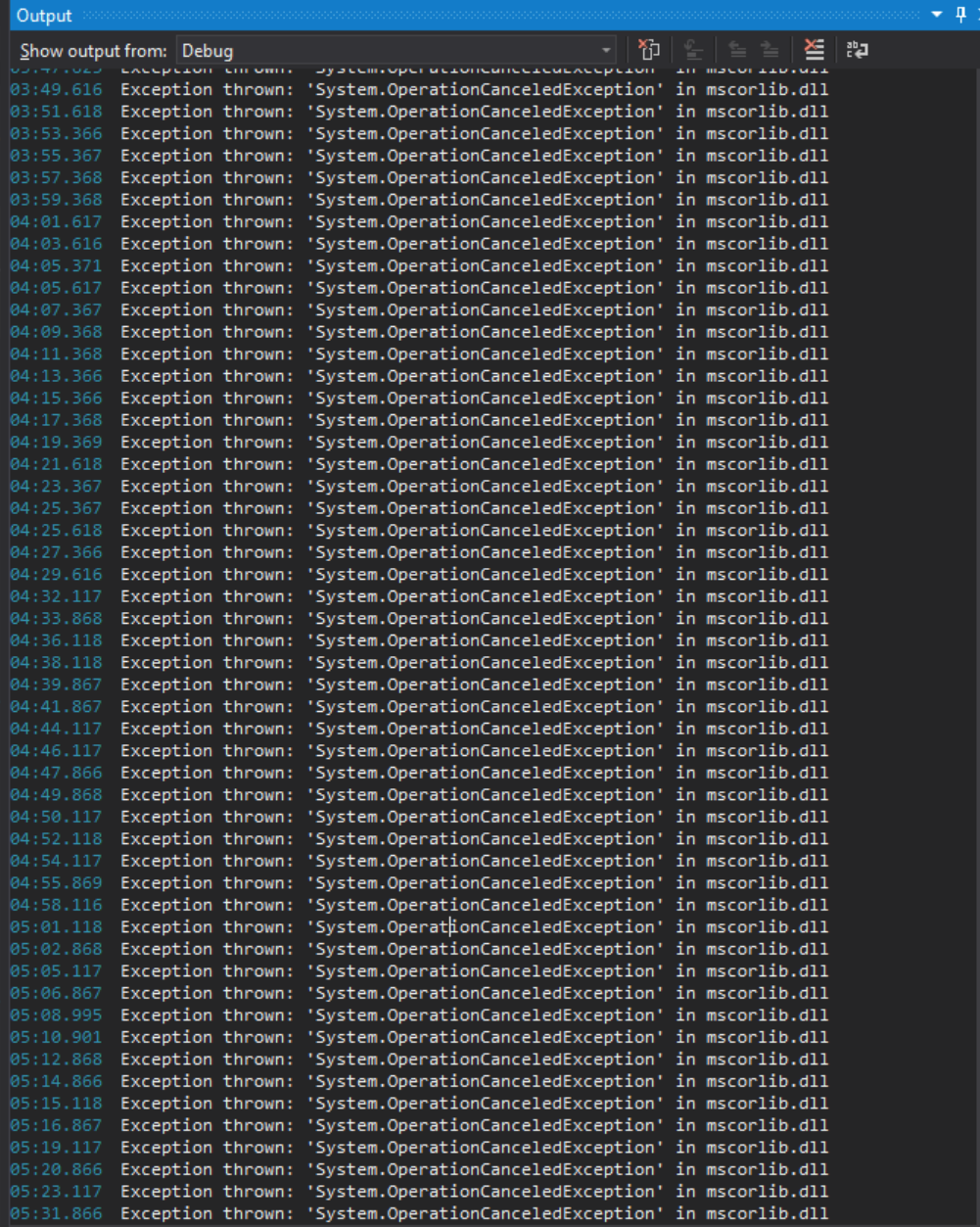

Current behaviour

Scrolling the revision graph (with attached debugger) is very slow and jerky.

It looks like we are firing too many background threads and this leads to thread exhaustion

Obviously our threading flows are far from efficient or performant.

The main problem seems to be

StartAsyncDataLoad:gitextensions/GitUI/CommitInfo/CommitInfo.cs

Lines 228 to 259 in 1d88978

Expected behaviour

The scroll is fast and smooth. No excessive use of blocked thread.

Steps to reproduce

Did this work in previous version of GitExtensions

Yes, v2 didn't exhibit as bad perf.

Environment

Diagnostics

The text was updated successfully, but these errors were encountered: