New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Automatic retry mechanism if task fail to fetch material #6252

Comments

|

@ariefrahmansyah If it's an auto polled material, it should retry in around a minute. If not, there's currently no mechanism to retry (a forced trigger or timer, for instance). |

|

@arvindsv sorry, it's not auto poll material, but it's when running task failed to fetch material. the task will be failed. If it happens, we need to manually retrigger the task. |

|

This issue has been automatically marked as stale because it has not had activity in the last 90 days. |

|

I would really love to have this feature! |

|

We run into this issue every now and then, it would be great to have a method to set retry count and / or a wait between retries. |

|

@mattgauntseo-sentry Have you determined root cause of the issue in your case? Is it a git material or some other material type or plugin material? Is it a temporary connectivity problem or target material source rate limit? |

|

We use github and it presents itself as a ssh connection issue. It's always intermittent. I don't believe this is a rate limit, but wouldn't rule it out. I'll share an example log when it happens again. |

|

Hmm. GitHub definitely has some sort of rate limit on https (although mixed info available on that, as they say they dont limit?) and GoCD's polling nature can be a problem for that, but not 100% sure what it looks like for ssh, and whether it's different on enterprise plans. Regardless useful to know, as there are probably multiple layers this could be addressed (specific material type, more abstract for all materials - that type of thing. Is this a standard OOTB GoCD git material or some extra plugin (eg for PRs)? |

|

This is a standard OOTB git material with an ssh url (git@github.com/...). I believe these are repos in an enterprise plan. |

|

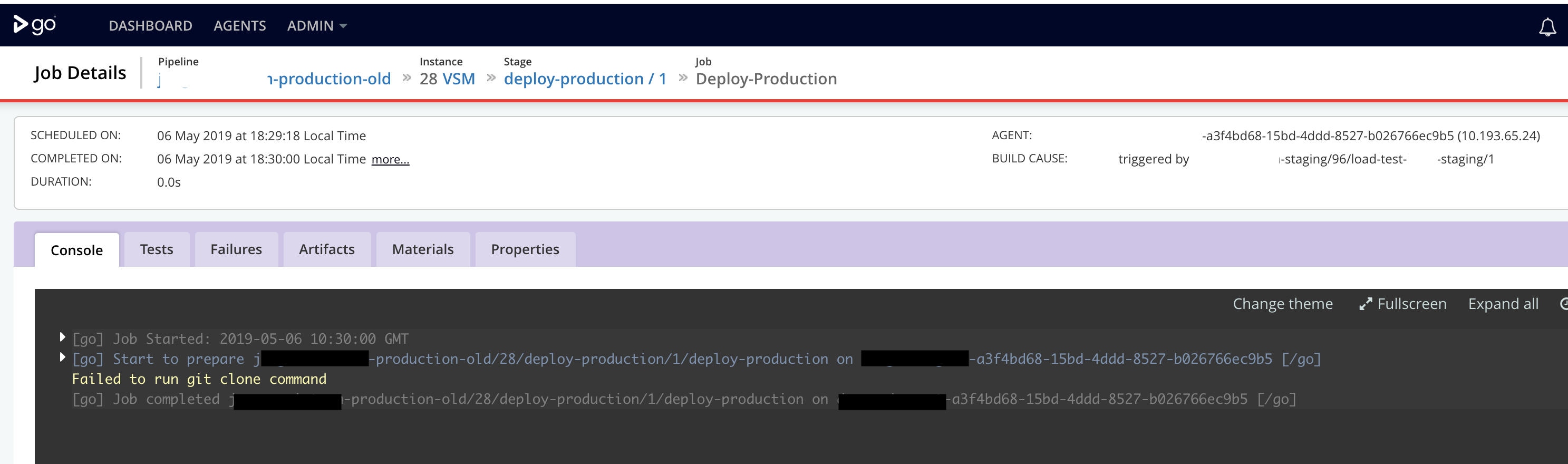

Just ran into this during a test: |

|

Yeah, that looks like a general networking issue where at best anything done at GoCD level will be a bit of a fudge. But perhaps a necessary one in some setups. If you see these types of errors exclusively on agents rather than the server logs you might want to explore anything different about the agent environment or specific to elastic agents (although the server can typically recover due to its polling nature, assuming you're not using webhooks). |

|

I've seen this on the server too with config repos hitting this error, but in those cases the polling gets us to an eventual good state. |

Issue Type

Summary

I'm using GitLab as main Git repository and sometimes I have connectivity issue that made git fetching failed. Can GoCD have an automatic retry mechanism to re-trigger the material fetching task?

Any other info

I'm thinking for something like Concourse's attempt: https://concourse-ci.org/attempts-step-modifier.html

The text was updated successfully, but these errors were encountered: