New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

C++ gRPC server implementation spawns uncontrolled number of threads #25145

Comments

|

Esun and/or Vijay, can you please take a look at this? What I find particularly interesting is that they continued to have this problem even when switching to the C++ async API, which I didn't think used the ThreadManager at all, so I'm not sure what's going on here. |

|

Maybe this will help a bit. As I understood when was browsing source code, in file src/cpp/server/server_builder.cc:220 in method BuildAndStart, there is always one initial completion queue created and then passed to Server class, then in its constructor sync_server_cqs_ != nullptr check passes and sync_req_mgrs_ gets initialized with single SyncRequestThreadManager instance and then in _Start(grpc::ServerCompletionQueue cqs, size_t num_cqs)_** it calls SyncRequestThreadManager's method Start which then calls Initialize of ThreadManager class. |

|

Can you mention your platform? I believe that I've seen a similar concern about this before which was on the Mac but want to know your platform for the most detailed information. |

|

It is Centos 7. |

|

I had the same problem |

|

Are there any news regarding it? We also face this issue :( |

|

@lieroz the number of arena is limited, so what's problem with memory? here's from your referred article: Number of arena’s: In above example, we saw main thread contains main arena and thread 1 contains its own thread arena. So can there be a one to one mapping between threads and arena, irrespective of number of threads? Certainly not. An insane application can contain more number of threads (than number of cores), in such a case, having one arena per thread becomes bit expensive and useless. Hence for this reason, application’s arena limit is based on number of cores present in the system. For 32 bit systems: |

|

The number is limited, but one thread can use quite a bit of it and it will be mostly equal in this case, cause each request does the same almost same things. For example, each thread consumes 20mb, total number of arenas is 56 cpus (in this case) * 8 multiplied by 20mb, that becomes 9gb of ram marked by allocator as used. Thus, memory is reused, but footprint is large. That is not the case with tcmalloc, cause it has tls cache for small objects and intermediate list for reusable memory. And allocator doesn’t give memory back in most cases until process exited. |

Is your feature request related to a problem? Please describe.

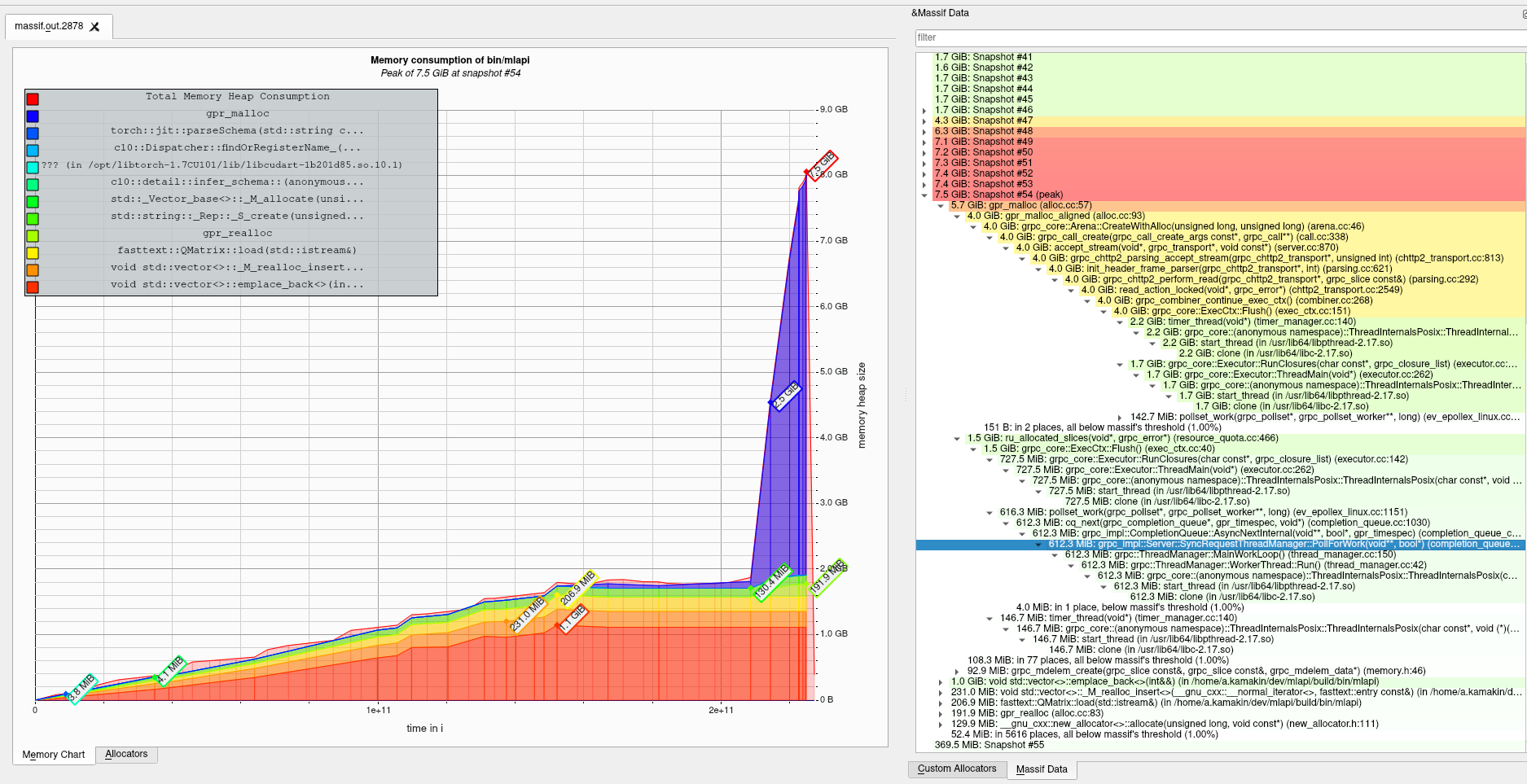

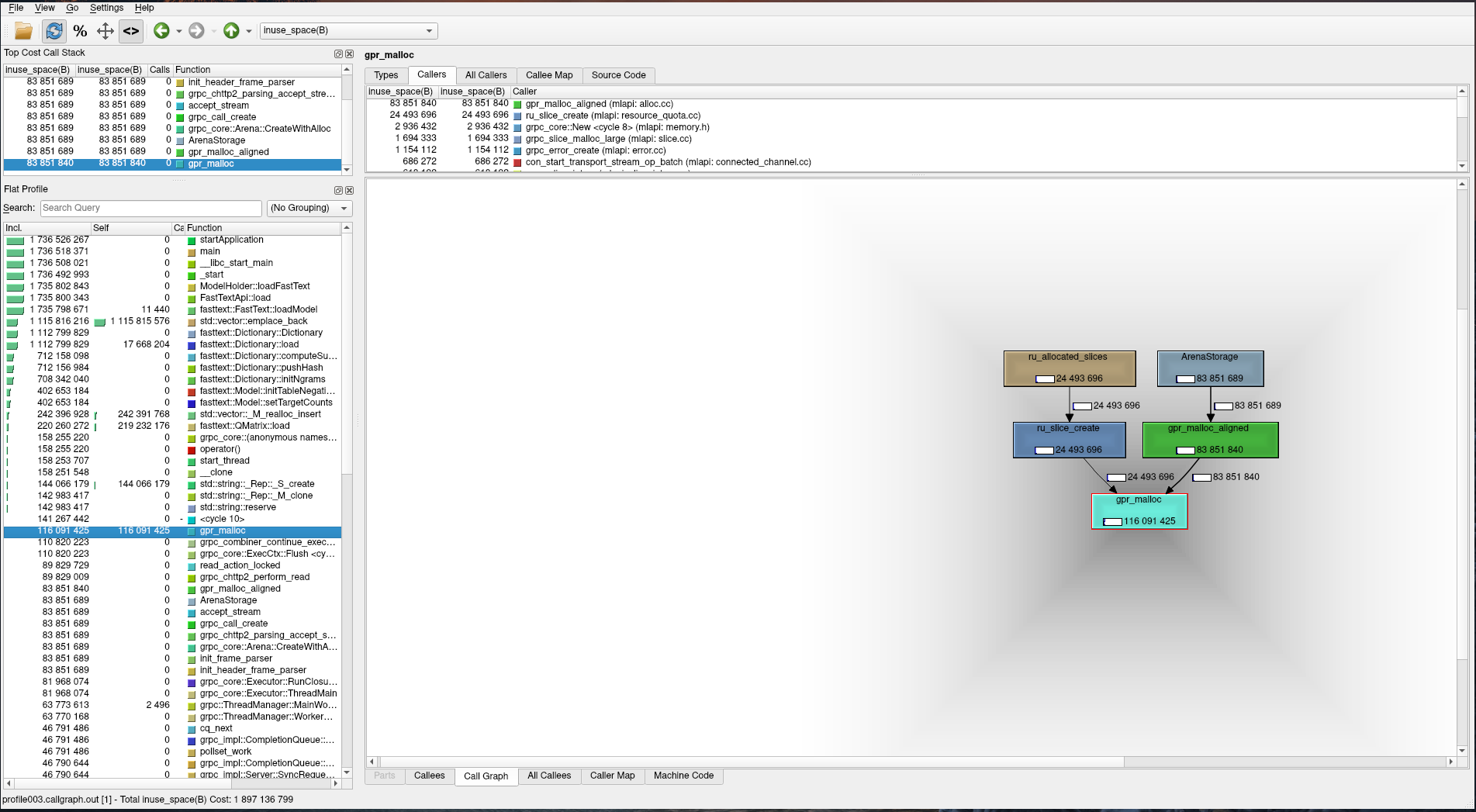

We tried using gRPC in one of our projects, a simple microservice that computes math models and returns result to user. We found out that during its work, service can spawn a lot of threads and most of them are waiting on gpr_cv_wait. After further investigation, we understood that it is caused by current ThreadManager implementation, function MainWorkLoop. And there is a comment about that. Uncontrolled thread creation causes huge memory consumption when using standard glibc allocator:

We decided to profile app with gperftools, which requires tcmalloc to make memory measurements, and accidentally found out that memory footprint is several times lower than when using standard linux allocator:

Here is a post that addresses this problem: https://habr.com/en/company/mailru/blog/534414/.

Describe the solution you'd like

As of now, SetResourceQuota won't limit thread spawning, only active threads in some specific moment. How about an idea that ThreadManager manages some underlying thread pool and user can pass it an integer to control threads. This way some problems will be resolved:

Describe alternatives you've considered

Heavy thread creation can't be avoided with current implementation and tcmalloc can be used to bypass memory consumption problems.

The text was updated successfully, but these errors were encountered: