https://s3-us-west-1.amazonaws.com/udacity-drlnd/P2/Crawler/Crawler_Windows_x86_64.zip

This repository contains an implementation of reinforcement learning based on:

* Proximal Policy Optimization with a Critic Network as a baseline and with a Generalized Advantage Estimation

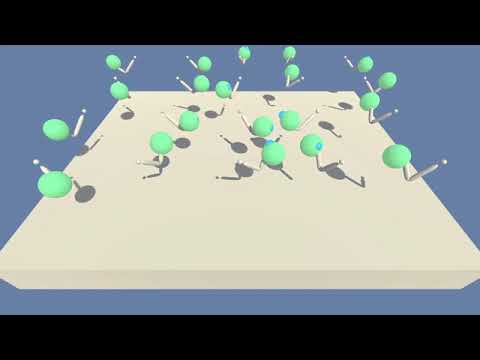

The agent being training is a creature with 4 arms and 4 forearms. It has a 20 double-jointed arms. The agent rewards are

* +0.03 times body velocity in the goal direction.

* +0.01 times body direction alignment with goal direction.

This environment is simliar to the crawler of Unity.

The action space is continuous [-1.0, +1.0] and consists of 20 values, corresponding to target rotations for joints.

The environment is considered as solved if the average score of the 20 agents is +30 for 100 consecutive episodes.

A video of a trained agent can be found by clicking on the image here below

- folder agents: contains the implementation of

- a Gaussian Actor Critic network for the PPO

- an implementation of a Proximal Policy Optimization

- folder weights:

- weights of the Gaussian Actor Critic Network that solved this environment with PPO

- Notebooks

- jupyter notebook Continuous_Control-PPO-LeakyReLU.ipynb: run this notebook to train the agents using PPO

To run the codes, follow the next steps:

- Create a new environment:

- Linux or Mac:

conda create --name drlnd python=3.6 source activate drlnd- Windows:

conda create --name drlnd python=3.6 activate drlnd

- Perform a minimal install of OpenAI gym

- If using Windows,

- download swig for windows and add it the PATH of windows

- install Microsoft Visual C++ Build Tools

- then run these commands

pip install gym pip install gym[classic_control] pip install gym[box2d]

- If using Windows,

- Install the dependencies under the folder python/

cd python

pip install .- Fix an issue of pytorch 0.4.1 to allow backpropagate the torch.distribution.normal function up to its standard deviation parameter

- change the line 69 of Anaconda3\envs\drlnd\Lib\site-packages\torch\distributions\utils.py

# old line

# tensor_idxs = [i for i in range(len(values)) if values[i].__class__.__name__ == 'Tensor']

# new line

tensor_idxs = [i for i in range(len(values)) if isinstance(values[i], torch.Tensor)]- Create an IPython kernel for the

drlndenvironment

python -m ipykernel install --user --name drlnd --display-name "drlnd"-

Download the Unity Environment (thanks to Udacity) which matches your operating system

-

Start jupyter notebook from the root of this python codes

jupyter notebook- Once started, change the kernel through the menu

Kernel>Change kernel>drlnd - If necessary, inside the ipynb files, change the path to the unity environment appropriately