New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

'bert-serving-start' is not recognized as an internal or external command #194

Comments

|

Did you try adding |

|

@sailormoon2016 please refer to the step 1 of #99 (comment) for the latest |

|

fyi, this is fixed in #212, the new feature is available since 1.7.7. Please do pip install -U bert-serving-server bert-serving-clientfor the update. |

|

@A6Matrix version too old, please do |

|

Create a start-bert-as-service.py with the following code so you can run with the following command |

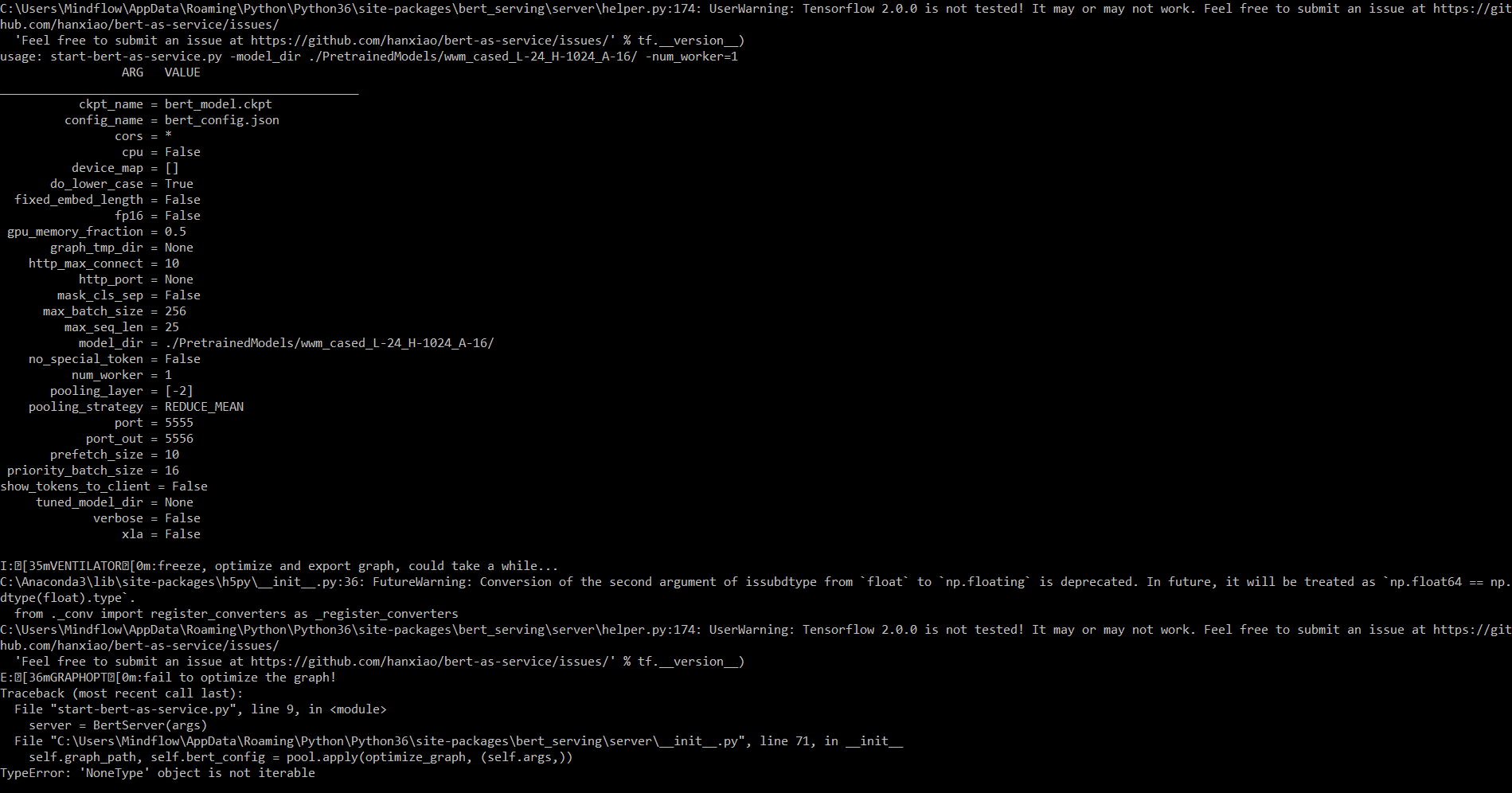

@monk1337 Getting this Error using this solution on windows 10 with python 3.6 and tensorflow 2.0.0 . |

|

Hi, |

@askaydevs You should be really shamed of yourself! |

|

@hanxiao My sincere apologies for that earlier comment. I was just starting with BERT and at that moment there were not enough resources to learn about BERT and also I was an amateur, after giving numerous unsuccessful tries I got frustrated and wrote that; didn't mean any of that. I totally understand you are doing exceptional job to keep things clean and bug free. Again I am sorry @hanxiao. |

|

@askaydevs You may delete your comment and wish the internet forget what you did. I hope you learn to respect other open source work and be responsible of what you did. |

Did you get any solution ? Even I am facing the same issue. |

I have the same error. |

Hi,

This is a very silly question.....

I have python 3.6.6, tensorflow 1.12.0, doing everything in conda environment, Windows 10.

I pip installed bert-serving-server/client and it shows

Successfully installed GPUtil-1.4.0 bert-serving-client-1.7.2 bert-serving-server-1.7.2 pyzmq-17.1.2but when I run the following as CLI

bert-serving-start -model_dir /tmp/english_L-12_H-768_A-12/ -num_worker=4it says

'bert-serving-start' is not recognized as an internal or external commandI found bert-serving library is located under C:\Users\Name\Anaconda\Lib\site-packages. So I tried to run bert-serving-start again under these three folders:

However, the result is same as not recognized. Can anyone help me?

The text was updated successfully, but these errors were encountered: