-

Notifications

You must be signed in to change notification settings - Fork 1

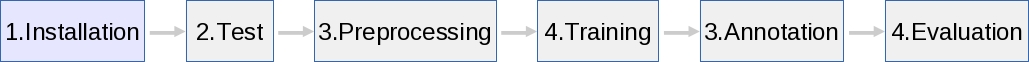

Step by Step Tutorial

This guide explains how to set up and use EOP. It offers step-by-step instructions to download, install, configure, and run EOP code and its related support resources and tools. It has been thought for users who want to use the software platform as it is whereas developers who want to contribute to the code have to follow the instruction reported in: https://github.com/hltfbk/Excitement-Open-Platform/wiki/Contribution-Guide

The EOP library contains several components for pre-processing data (Linguistic Analysis Pipeline -LAP)and for annotating textual entailment relations (Entailment Decision Algorithm -EDA). Components for pre-processing allow for annotating data with useful information (e.g. lemma, part-of-speech) whereas textual entailment components allow for training new models end then using them to annotate entailment relations in new data. Each of these facilities is accessible via the EOP Application Program Interface ( ). In addition, a Command Line Interface (

). In addition, a Command Line Interface ( ) is provided for convenience of experiments and training.

) is provided for convenience of experiments and training.

In the rest of this guide we will report examples for both of these possibilities whereas Java code examples is on the line of the material used in the Fall School class for Textual Entailment in Heidelberg; see their web site for further information and code updates: http://fallschool2013.cl.uni-heidelberg.de/

We assume some familiarity with Unix-like command-line shells such as bash for Linux and the reference operating system to be Ubuntu 12.04 LTS (http://www.ubuntu-it.org/download/).

Questions about EOP should be directed to the EOP mailing lists.

- [Downloading and Installing EOP](#Downloading and Installing EOP)

- [Hello World!](#Hello World!)

- [Pre-processing data sets](#Pre-processing data sets)

- [Creating new models](#Creating new models)

- [Annotating text/hypothesis pairs](#Annotating text/hypothesis pairs)

- [Evaluating the results](#Evaluating the results)

Appendix A: [Hello World! code and additional examples](Hello World! code and additional examples)

Appendix B: [TreeTagger Installation](Step-by-Step, TreeTagger-Installation)

Appendix C: [Utility Code](Step-by-Step, Utility-Code)

Appendix D: BIUTEE Installation

Installation, main steps:

- [Installing the needed tools and environment](#Installing needed tools and environments)

- [Obtaining the EOP code and installing it](#Obtaining the EOP code and installing it)

EOP is written for Java 1.7+. If your JVM is older than 1.7, then you should upgrade. The same applies to Apache Maven: EOP requires version 3.0.x and older versions might not work. The necessity to install some tools like Maven itself or environments like Eclipse depends on the modality being used to run EOP:  vs

vs  . In the following we report the list of the tools required to run EOP whereas a note after the name of the tool (i.e.

. In the following we report the list of the tools required to run EOP whereas a note after the name of the tool (i.e.  or

or  ) let users know if such tool is necessary for the chosen EOP modality.

) let users know if such tool is necessary for the chosen EOP modality.

This is the list of the tools and environments needed by EOP:

- Java 1.7

- Eclipse + m2e Maven plugin (Juno or later)

- Maven tool (3.x or later)

Installing Java 1.7: Java is a programming language and computing platform first released by Sun Microsystems in 1995. There are lots of applications like EOP that will not work unless you have Java installed. Regardless of your operating system, you will need to install some Java virtual machine (JVM). Given that we want to use Eclipse for Java development, then we need to install the Java Development Kit JDK (the JDK includes--among other useful things--the source code for the standard Java libraries). There are several sources for JDK. Here are some of the more common/popular ones (listed alphabetically):

There are two ways of installing Java in Ubuntu:

- Using Ubuntu Software Center

- Download one of the following Java distributions and then install manually:

Installing Eclipse: Installing Eclipse is relatively easy, but does involve a few steps and software from at least two different sources. Eclipse is a Java-based application and, as such, requires a Java runtime environment (JRE) in order to run.

There are two ways of installing Eclipse IDE in Ubuntu:

- Using Ubuntu Software Center

- Download Eclipse IDE package and then install manually (http://www.eclipse.org/juno/)

In addition to Eclipse we would need to install m2e Maven plugin. Apache Maven is a software project management and comprehension tool. Based on the concept of a project object model (POM), we use Maven to manage the EOP project's build and test. The goal of the m2e project is to provide a first-class Apache Maven support in the Eclipse IDE, making it easier to edit Maven's pom.xml, run a build from the IDE and much more. Any “Eclipse for Java” later than “Juno” version already has this plug-in pre-installed whereas if you have installed Eclipse Juno you would need to install m2e separately: http://www.eclipse.org/m2e/

Installing Maven tool: Apache Maven is a software project management and comprehension tool. Based on the concept of a project object model (POM), we use Maven to manage the EOP project's build and test. Maven tool (3.x or later) is required to use EOP and you can download and install it from its web site: http://maven.apache.org/

Using EOP via  involves using the EOP Maven artifacts whereas

involves using the EOP Maven artifacts whereas  can be used by downloading the Java distribution. Follow the instruction reported in the Installation page to install one of 2 distributions: Java distribution, Maven artifacts distribution.

can be used by downloading the Java distribution. Follow the instruction reported in the Installation page to install one of 2 distributions: Java distribution, Maven artifacts distribution.

Hello World example will show you how to pre-process a data set, and how to create a new model to be used for annotating a text/hypothesis(T/H) pair; running it it also a good way to check if the EOP installation works correctly.

The main steps to annotate a text/hypothesis(T/H) pair are:

- Pre-processing a data set by calling a LAP.

- Creating a new model for the selected EDA

- Using the model to annotate a T/H pair.

We will propose this example by  and

and  .

.

This section shows, with minimal code, how to annotate entailment relation via  . We will use Eclipse IDE to write and run the code.

. We will use Eclipse IDE to write and run the code.

- Open Eclipse IDE

- In Eclipse, navigate to File > New > Other… in order to start the project creation wizard.

3. Scroll to the Maven folder, open it, and choose Maven Project. Then choose Next.

4. Choose to Create a simple project. For the purposes of this tutorial, we will choose the simple project. This will create a basic, Maven-enabled Java project. Leave other options as is, and click Next.

5. Now, you will need to enter information regarding the Maven Project you are about to create. Visit the Maven documentation for a more in-depth look at the Maven Coordinates (http://maven.apache.org/pom.html#Maven_Coordinates). Basically, the Group Id should correspond to your organization name, and the Artifact Id should correspond to the project’s name. The version is up to your discretion as is the packing and other fields. Fill out the appropriate information, and click Finish.

6. At this point your project should have been created. We will place our Java code in /src/main/java.

7. Open the pom.xml file to view the structure Maven has set up. In this file, you can see the information entered in Step 5. Now we have to specify the EOP dependencies and the repository where they are into the pom.xml file. 8. Always in Eclipse, navigate to src/main/java > New > Class in order to start writing your code. You can name it as HelloWorld.

9. Add the following code into the page of the created Java class; here to make the example easier we will not create a package for our Java class but we will use the default one.import java.io.File;

import org.apache.uima.jcas.JCas;

import eu.excitementproject.eop.common.DecisionLabel;

import eu.excitementproject.eop.common.EDABasic;

import eu.excitementproject.eop.common.EDAException;

import eu.excitementproject.eop.common.TEDecision;

import eu.excitementproject.eop.common.configuration.CommonConfig;

import eu.excitementproject.eop.common.exception.ComponentException;

import eu.excitementproject.eop.common.exception.ConfigurationException;

import eu.excitementproject.eop.core.ClassificationTEDecision;

import eu.excitementproject.eop.common.utilities.configuration.ImplCommonConfig;

import eu.excitementproject.eop.core.MaxEntClassificationEDA;

import eu.excitementproject.eop.lap.LAPAccess;

import eu.excitementproject.eop.lap.LAPException;

import eu.excitementproject.eop.lap.PlatformCASProber;

import eu.excitementproject.eop.lap.dkpro.OpenNLPTaggerEN;

//

//

// Hello World!

//

// A simple, minimal code that runs one LAP & EDA to check if the EOP installation is Okay.

//

//

public class HelloWorld {

//

// Data Set pre-processing by using a LAP (i.e. OpenNLP tagger)

// @param dataSet the data set to be pre-processed (e.g. RTE-3)

// @param dirOut the directory containing the .xmi files produced by pre-processing the data set

//

private void preprocessingDataSet(String dataSet, String dirOut) {

LAPAccess ttLap = null;

try {

//ttLap = new TreeTaggerEN(); //this requires to have TreeTagger already installed

ttLap = new OpenNLPTaggerEN(); //the OpenNLP tagger

} catch (LAPException e) {

System.err.println("Unable to initiated LAP: " + e.getMessage());

System.exit(1);

}

//the English RTE data set

File f = new File(dataSet);

//the output directory; the directory has to exist prior to starting.

File outputDir = new File(dirOut);

try {

ttLap.processRawInputFormat(f, outputDir);

} catch (LAPException e) {

System.err.println("Failed to process EOP RTE data format: " + e.getMessage());

System.exit(1);

}

}

//

// Train the EDA (i.e. MaxEntClassificationEDA) on the pre-processed Data Set

// @param configurationFile the EDA configuration file

//

public void creatingNewModels(String configurationFile) {

CommonConfig config = null;

try {

//This is the configuration file to be used with the selected EDA (i.e. MaxEntClassification EDA).

File configFile = new File(configurationFile);

config = new ImplCommonConfig(configFile);

}

catch (ConfigurationException e) {

System.err.println("Failed to read configuration file: "+ e.getMessage());

System.exit(1);

}

try {

@SuppressWarnings("rawtypes")

EDABasic eda = null;

//creating an instance of MaxEntClassification EDA

eda = new MaxEntClassificationEDA();

//EDA initialization and start training

eda.startTraining(config); // This *MAY* take some time.

eda.shutdown(); //shutdown

} catch (EDAException e) {

System.err.println("Failed to do the training: "+ e.getMessage());

System.exit(1);

} catch (ConfigurationException e) {

System.err.println("Failed to do the training: "+ e.getMessage());

System.exit(1);

} catch (ComponentException e) {

System.err.println("Failed to do the training: "+ e.getMessage());

System.exit(1);

}

}

//

// Annotating a single T/H pair b using a pre-trained model

// @param configurationFile the EDA configuration file

// @param T the text

// @param H the hypothesis

//

public void annotatingSingle_T_H_Pair(String configurationFile, String T, String H) {

//1) Pre-processing T/H pair by using the LAP (i.e. OpenNLP)

JCas annotated_THpair = null;

try {

LAPAccess lap = new OpenNLPTaggerEN(); // make a new OpenNLP based LAP

annotated_THpair = lap.generateSingleTHPairCAS(T, H); // ask it to process this T-H.

} catch (LAPException e) {

System.err.print(e.getMessage());

System.exit(1);

}

//

//2) Initialize an EDA with a configuration (& corresponding model). You have to check that the

// model path in the configuration file points to the directory where the model is, e.g.:

// home/user_name/eop-resources-1.2.0/model/MaxEntClassificationEDAModel_Base+OpenNLP_EN

//

System.out.println("Initializing the EDA.");

EDABasic<ClassificationTEDecision> eda = null;

try {

// TIE (i.e. MaxEntClassificationEDA): a simple configuration with no knowledge resource.

File configFile = new File(configurationFile);

CommonConfig config = new ImplCommonConfig(configFile);

eda = new MaxEntClassificationEDA();

eda.initialize(config);

} catch (Exception e) {

System.err.print(e.getMessage());

System.exit(1);

}

//

//

// 3) Now, one input data is ready, and the EDA is also ready.

// Call the EDA.

//

System.out.println("Calling the EDA for decision.");

TEDecision decision = null; // the generic type that holds Entailment decision result

try {

decision = eda.process(annotated_THpair);

} catch (Exception e) {

System.err.print(e.getMessage());

System.exit(1);

}

//

System.out.println("Run complete: EDA returned decision: " + decision.getDecision().toString());

//

}

//

// This method shows how to annotate a data set of multiple T/H pairs by using an EDA with

// one existing (already trained) model. The data set has to been pre-processed as

// described in the Preprocessing class.

// @param configurationFile the EDA configuration file

// @param preprocessedDataSet the directory containing the .xmi files

//

public void annotatingDataSet(String configurationFile, String preprocessedDataSet) {

//EDA configuration

@SuppressWarnings("rawtypes")

EDABasic eda = null;

try {

//creating an instance of MaxEntClassification EDA

eda = new MaxEntClassificationEDA();

//this is the configuration file we want to use with MaxEntClassification EDA

File configFile = new File(configurationFile);

//Loading the configuration file

CommonConfig config = new ImplCommonConfig(configFile);

//EDA initialization

eda.initialize(config);

}

catch (EDAException e)

{

System.err.println("Failed to init the EDA: "+ e.getMessage());

System.exit(1);

}

catch (ConfigurationException e)

{

System.err.println("Failed to init the EDA: "+ e.getMessage());

System.exit(1);

}

catch (ComponentException e)

{

System.err.println("Failed to init the EDA: "+ e.getMessage());

System.exit(1);

}

//The directory where the pre-processed data set (i.e. the serialized annotated files) is

File outputDir = new File(preprocessedDataSet);

//Annotating the data set

try {

//loading the pre-processed files

for (File xmi : (outputDir.listFiles())) {

if (!xmi.getName().endsWith(".xmi")) {

continue;

}

// The annotated pair has been added into the UIMA CAS.

JCas cas = PlatformCASProber.probeXmi(xmi, null);

// Call process() method to get Entailment decision.

TEDecision decision = eda.process(cas);

// Entailment decisions are represented with "TEDecision" class.

DecisionLabel r = decision.getDecision();

System.out.println("The result is: " + r.toString());

}

} catch (EDAException e) {

System.err.print("EDA reported exception" + e.getMessage());

System.exit(1);

} catch (ComponentException e) {

System.err.print("EDA reported exception" + e.getMessage());

System.exit(1);

}

//shutdown

eda.shutdown();

}

public static void main( String[] args ) {

System.out.println("Hello World!");

HelloWorld helloWorld = new HelloWorld();

//Pre-processing the Data Set to be used fot training the EDA

//The pre-processed data set will be put in /tmp/EN/dev/; make sure that this directory exists

helloWorld.preprocessingDataSet("/home/user_name/eop-resources-1.2.0/data-set/English_dev.xml", "/tmp/EN/dev/");

//Training the EDA on the pre-processed Data Set

String configurationFile = "/home/user_name/eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml";

helloWorld.creatingNewModels(configurationFile);

//Annotating a T/H pair by using the created model

//The text

String T = "The students had 15 hours of lectures and practice sessions on the topic of Textual Entailment.";

//The hypothesis

String H = "The students must have learned quite a lot about Textual Entailment.";

helloWorld.annotatingSingle_T_H_Pair(configurationFile, T, H);

//Annotating a T/H pair by using the created model

//The pre-processed data set will be put in /tmp/EN/test/; make sure that this directory exists

//helloWorld.preprocessingDataSet("/home/user_name/eop-resources-1.2.0/data-set/English_test.xml", "/tmp/EN/test/");

//helloWorld.annotatingDataSet(configurationFile, "/tmp/EN/test/");

}

}

Before going on be sure that the configFile variable in the code above contains the right path to the configuration file on your file system, i.e. /home/user_name/eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml

and that the model file name written in that configuration file points to the right model to be used, i.e. /home/user_name/eop-resources-1.2.0/model/MaxEntClassificationEDAModel_Base+OpenNLP_EN.

- Navigate to HelloWorld.java > Run As > Java Application to run the code.

Another way to run the example before is by using a command line standalone Java class, serving as a unique entry point to the EOP main included functionalities. The class that is located in the util package can call both the linguistic analysis pipeline to pre-process the data to be annotated and the selected entailment algorithm (EDA) and it is the simplest way to use EOP.

Go into the EOP-1.2.0 directory, i.e.

> cd ~/Excitement-Open-Platform-1.2.0/target/EOP-1.2.0/

and call the EOPRunner class with the needed parameters as shown in the example below. (For the example, make sure /tmp/EN/dev directory is available for writing pre-processed training data, if you have not done so already.)

A complete list of the EOPRunner parameters can be seen here.

> java -Djava.ext.dirs=../EOP-1.2.0/ eu.excitementproject.eop.util.runner.EOPRunner

-config ./eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml

-train

-trainFile ./eop-resources-1.2.0/data-set/English_dev.xml

-trainDir /tmp/EN/dev

-test

-text "The students had 15 hours of lectures and practice sessions on the topic of Textual Entailment."

-hypothesis "The students must have learned quite a lot about Textual Entailment."

-output ./eop-resources-1.2.0/results/

where:

- config the configuration containing the linguistic analysis pipeline and the EDA.

- train indicates that the system will train first, and produce a model that will be used to annotate the test pair(s).

- trainFile the RTE-formatted file containing training T-H pairs. If the training data was already preprocessed, then this parameter is not necessary, but the trainDir must be provided either in the configuration file or as a parameter.

- trainDir the directory to output the preprocessed pairs. If specified in the configuration file, this parameter is not necessary.

- test the selected EDA has to make its annotation by using a pre-trained model.

- text the text.

- hypothesis the hypothesis.

- output the directory where the result file (_results.xml) containing the prediction has to be stored.

All textual entailment algorithms requires some level of pre-processing; they are mostly linguistic annotations like tokenization, sentence splitting, Part-of-Speech tagging, and dependency parsing.

EOP standardizes linguistic analysis modules in two ways:

- it has one common output data format

- it defines common interface that all pipelines needs to perform.

-

Common Data format EOP has borrowed one powerful abstraction that is called CAS (Common Analysis Structure). CAS is a data structure that is used in Apache UIMA. It has type system strong enough to represent any annotation, and metadata. CAS is the data output format in EOP linguistic analysis pipelines (LAPs). LAPs output their results in CAS and we can see CAS as a big container with type system that defines many annotations.

-

Common access interfaces In EOP, all pipelines are provided with the same set of “access methods”. Thus, regardless of your choice of the pipeline (e.g. tagger only pipeline, or tagging, parsing, and NER pipeline), they all react to the same set of common methods.

In this example we will use OpenNLP tagger to pre-process all the T/H pairs of the RTE-3 English training data set. The annotation will include tokenization, sentence splitting and Part-Of-Speech tagging.

LAPs implement the interface LAPAccess and the first step to pre-process data consists in initializing a LAP; in the current example we will see how to instantiate and use a LAP for the English language based on OpenNLP tagger:

LAPAccess ttLap = null;

try {

//ttLap = new TreeTaggerEN(); //this requires to have TreeTagger already installed

ttLap = new OpenNLPTaggerEN(); //the OpenNLP tagger

} catch (LAPException e) {

System.err.println("Unable to initiated LAP: " + e.getMessage());

System.exit(1);

}

Then we need to prepare the input file containing the T/H pairs and output directory. In the example the input file is the English RTE-3 data set that is distributed as part of the eop-resources file while the output directory is the tmp directory:

//the English RTE data set

File f = new File("/home/user_name/eop-resources-1.2.0/data-set/English_dev.xml");

//the output directory

File outputDir = new File("/tmp/EN/dev/");

After that we call the method for file processing. This could take some time given that RTE data contains 800 T/H pairs. Each case, will be first annotated as a UIMA CAS, and then it will be serialized into one XMI file.

try {

ttLap.processRawInputFormat(f, outputDir);

} catch (LAPException e) {

System.err.println("Failed to process EOP RTE data format: " + e.getMessage());

System.exit(1);

}

Go into the EOP-1.2.0 directory, i.e.

> cd ~/Excitement-Open-Platform-1.2.0/target/EOP-1.2.0/

and call EOPRunner:

> java -Djava.ext.dirs=../EOP-1.2.0/ eu.excitementproject.eop.util.runner.EOPRunner

-config ./eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml

-trainFile ./eop-resources-1.2.0/data-set/English_dev.xml

where:

- config is the configuration containing the linguistic analysis pipeline, the EDA and the pre-trained model that have to be used to annotate the data to be annotated.

- trainFile is the RTE-formatted data to be pre-processed

The pre-processed files are stored in /tmp/EN/dev/ as set in the configuration file.

This step shows how to train an EDAs on a new data set and build a new model. MaxEntClassification EDA will be used for this purpose.

Training also requires the configuration file. We will load a configuration file first:

CommonConfig config = null;

try {

//This is the configuration file to be used with the selected EDA (i.e. MaxEntClassification EDA).

File configFile = new File("/home/user_name/eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml");

config = new ImplCommonConfig(configFile);

}

catch (ConfigurationException e) {

System.err.println("Failed to read configuration file: "+ e.getMessage());

System.exit(1);

}

Then we can start training:

try {

EDABasic eda = null;

//creating an instance of MaxEntClassification EDA

eda = new MaxEntClassificationEDA();

//EDA initialization and start training

eda.startTraining(config); // This *MAY* take a some time.

} catch (Exception e) {

System.err.println("Failed to do the training: "+ e.getMessage());

System.exit(1);

}

Go into the EOP-1.2.0 directory, i.e.

> cd ~/Excitement-Open-Platform-1.2.0/target/EOP-1.2.0/

and run the following command:

> java -Djava.ext.dirs=../EOP-1.2.0/ eu.excitementproject.eop.util.runner.EOPRunner

-config ./eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml

-train

-trainFile ./eop-resources-1.2.0/data-set/English_dev.xml

-trainDir /tmp/EN/dev/

where:

- config is the configuration containing the linguistic analysis pipeline and the EDA.

- train indicates that the system will perform training

- trainFile is the file with RTE-formatted data for training. If the data was already preprocessed, this parameter is not necessary, but make sure the directory with the preprocessed data is provided either in the configuration file, or through the trainDir parameter.

- trainDir the directory for LAP's output. If this is already specified in the configuration file, no need to pass it as an argument here.

- annotating a single T/H pair.

- annotating the English RTE-3 data set containing multiple T/H pairs.

This step involves annotating a single T/H pair with an entailment relation by using a pre-trained model.

First we need to create an instance of EDA (i.e. MaxEntClassification EDA) and then we need to initialize it:

EDABasic eda = null;

try {

//creating an instance of MaxEntClassification EDA

eda = new MaxEntClassificationEDA();

//this is the configuration file we want to use with MaxEntClassification EDA

File configFile = new File("/home/user_name/eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml");

//Loading the configuration file

CommonConfig config = new ImplCommonConfig(configFile);

//EDA initialization

eda.initialize(config);

} catch (Exception e) {

System.err.println("Failed to init the EDA: "+ e.getMessage());

System.exit(1);

}

The EDA is ready now. Let's prepare one T-H pair and use it. Note that (as written in the configuration file), the current configuration uses OpenNLP tagger for preprocessing the data set.

//T

String text = "The sale was made to pay Yukos' US$ 27.5 billion tax bill, Yuganskneftegaz was originally sold for US$ 9.4 billion to a little known company Baikalfinansgroup which was later bought by the Russian state-owned oil company Rosneft.";

//H

String hypothesis = "Baikalfinansgroup was sold to Rosneft.";

JCas thPair = null;

try {

//this requires to have TreeTagger already installed

//LAPAccess lap = new TreeTaggerEN();

//OpenNLP tagger

LAPAccess lap = new OpenNLPTaggerEN();

// T/H pair preprocessed by LAP

thPair = lap.generateSingleTHPairCAS(text, hypothesis);

} catch (LAPException e) {

System.err.print("LAP annotation failed:" + e.getMessage());

System.exit(1);

}

The annotated pair has been added into the UIMA CAS. Call process() method to get Entailment decision. Entailment decisions are represented with TEDecision class.

TEDecision decision = null;

try {

decision = eda.process(thPair);

} catch (Exception e) {

System.err.print("EDA reported exception" + e.getMessage());

System.exit(1);

}

And let's look at the result.

DecisionLabel r = decision.getDecision();

System.out.println("The result is: " + r.toString());

We can call process() multiple times as much as you like. Once all is done, we should call the shutdown method.

//shutdown

eda.shutdown();

Go into the EOP-1.2.0 directory, i.e.

> cd ~/Excitement-Open-Platform-1.2.0/target/EOP-1.2.0/

EOPRunner calls the specified LAP for pre-processing the data set and puts the produced files into the directory always specified in the EDA's configuration file by its own parameter (e.g. testDir). Before running EOPRunner you should check that that directory (e.g. /tmp/EN/test/) exists and in case create one (e.g. mkdir -p /tmp/EN/test/). After that you can call the EOPRunner class with either of the following sets of parameters:

> java -Djava.ext.dirs=../EOP-1.2.0/ eu.excitementproject.eop.util.runner.EOPRunner

-config ./eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml

-test

-text "The sale was made to pay Yukos' US$ 27.5 billion tax bill, Yuganskneftegaz was originally sold for US$ 9.4 billion to a little known company Baikalfinansgroup which was later bought by the Russian state-owned oil company Rosneft."

-hypothesis "Baikalfinansgroup was sold to Rosneft."

-output ./eop-resources-1.2.0/results/

where:

- config is the configuration containing the linguistic analysis pipeline, the EDA and the pre-trained model that have to be used to annotate the data to be annotated.

- test means that the selected EDA has to make its annotation by using a pre-trained model.

- text the text portion of the text/hypothesis entailment pair.

- hypothesis the hypothesis portion of the text/hypothesis entailment pair.

- output is the directory where the result file (_results.xml) containing the prediction has to be stored.

Differently to the previuos step when a single T/H pair was annotated, this step involves annotating multiple T/H pairs that are stored in a file by using a pre-trained model. Also in this case we will work with MaxEntClassification EDA.

As usual we first need to create an instance of an EDA (i.e. MaxEntClassification EDA) and then we have to initialize it:

EDABasic eda = null;

try {

eda = new MaxEntClassificationEDA();

//the configuration file to be used with the selected EDA

File configFile = new File("/home/user_name/eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml");

//Loading the configuration file

CommonConfig config = new ImplCommonConfig(configFile);

//EDA initialization

eda.initialize(config);

} catch (Exception e) {

System.err.println("Failed to init the EDA: "+ e.getMessage());

System.exit(1);

}

The EDA is ready now. Let's pre-process the T/H pairs as described in the previous section:

try {

//this requires to have TreeTagger already installed

//LAPAccess ttLap = new TreeTaggerEN();

//the OpenNLP tagger

LAPAccess ttLap = new OpenNLPTaggerEN();

//Set the input file containing the T/H pairs (i.e. RTE-3 English test data set), and

//the output directory where to store the pre-processed pairs.

File f = new File("/home/user_name/eop-resources-1.2.0/data-set/English_test.xml");

//the output directory where to store the serialized annotated files

File outputDir = new File("/tmp/EN/test/");

if (!outputDir.exists()) {

outputDir.mkdirs();

}

//file pre-processing

ttLap.processRawInputFormat(f, outputDir);

} catch (LAPException e) {

System.err.println("Training data annotation failed: " + e.getMessage());

System.exit(1);

}

Now we can annotate the test data set to be annotated:

try {

//loading the pre-processed files

for (File xmi : (outputDir.listFiles())) {

if (!xmi.getName().endsWith(".xmi")) {

continue;

}

// The annotated pair has been added into the UIMA CAS.

JCas cas = PlatformCASProber.probeXmi(xmi, null);

// Call process() method to get Entailment decision.

TEDecision decision = eda.process(cas);

// Entailment decisions are represented with "TEDecision" class.

DecisionLabel r = decision.getDecision();

System.out.println("The result is: " + r.toString());

}

} catch (Exception e) {

System.err.print("EDA reported exception" + e.getMessage());

System.exit(1);

}

Th last thing to do is to call the method shutdown():

//shutdown

eda.shutdown();

Go into the EOP-1.2.0 directory, i.e.

> cd ~/Excitement-Open-Platform-1.2.0/target/EOP-1.2.0/

Pre-process the test data set file as you did for the training one and then run the following command:

> java -Djava.ext.dirs=../EOP-1.2.0/ eu.excitementproject.eop.util.runner.EOPRunner

-config ./eop-resources-1.2.0/configuration-files/MaxEntClassificationEDA_Base+OpenNLP_EN.xml

-test

-testDir /tmp/EN/test/

-output ./eop-resources-1.2.0/results/

where:

- config provides the configuration file containing the linguistic analysis pipeline, the EDA and the pre-trained model that have to be used to annotate the data.

- test means that the selected EDA has to perform its annotation by using a pre-trained model.

- testDir is the directory for writing the analysed (LAP processed) T/H pairs (optional if it is provided in the configuration file).

- output is the directory where the result file (_results.xml) containing the prediction has to be stored.

The annotation produced by EDAs can be evaluated in terms of accuracy, Precision, Recall, and F1 measure. EOP provides a scorer for producing those measures.

EDAScorer is the class implementing the scorer for evaluating annotation results. The annotated data set needs to be in a particular format to be evaluated by EDAScorer. Here is an example of such a file:

747 NONENTAILMENT NonEntailment 0.2258241758241779

795 ENTAILMENT Entailment 0.5741758241758221

60 ENTAILMENT Entailment 0.24084249084248882

546 NONENTAILMENT NonEntailment 0.15309690309690516

.....................

.....................

509 ENTAILMENT Entailment 0.07417582417582214

The first columns is the T/H pair id, the second is the annotation of the gold standard, the third is the annotation made by the EDA and the last one is the confidence of that annotation. To produce that file we need to revise a little bit the code in Section 5. In the rest of the section we suppose that the data set has been already processed by LAPs.

The first thing to do when one wants to use EDAScorer is to add an additional EOP dependency into your project. Open the pom.xml file of your project and add this dependency:

<dependency>

<groupId>eu.excitementproject</groupId>

<artifactId>util</artifactId>

<version>1.2.0</version>

</dependency>

Then you would need to import these classes into your java code too:

import org.apache.uima.cas.FSIterator;

import org.apache.uima.jcas.cas.TOP;

import eu.excitement.type.entailment.Pair;

import eu.excitementproject.eop.util.eval.EDAScorer;

After that we would need to process the data set to be annotated and to save the produced annotation into a file. In fact EDAScorer evaluates annotated T/H pairs from files. To do that we reuse the code also used in Section 5 with some changes done: the annotation are written into a file instead of the standard output.

Writer writer = null;

try {

//this is the file needed by EDAScorer containing the EDA prediction

//as well as the gold standard annotation.

writer = new BufferedWriter(new OutputStreamWriter(

new FileOutputStream("/home/user_name/eop-resources-1.2.0/results/myResults.txt"),"utf-8"));

//loading the pre-processed files

for (File xmi : (outputDir.listFiles())) {

if (!xmi.getName().endsWith(".xmi")) {

continue;

}

// The annotated pair has been added into the CAS.

JCas cas = PlatformCASProber.probeXmi(xmi, null);

// Call process() method to get Entailment decision.

// Entailment decisions are represented with "TEDecision" class.

TEDecision decision = eda.process(cas);

// the file containing the annotated T/H pairs needed by EDAScorer is produced.

// getGoldLabel (i.e. returns the gold standard annotation) and

// getPairID (i.e. returns the pairID of the T-H pair) are methods whose

// implementation is given below.

writer.write(getPairID(cas) + "\t" + getGoldLabel(cas) + "\t" + decision.getDecision().toString() + "\t" + decision.getConfidence() + "\n");

System.out.println("The result is: " + decision.getDecision().toString());

}

writer.close();

} catch ( Exception e ) {

System.err.print("Error:" + e.getMessage());

}

here is the implementation of the getPairID and getGoldLabel methods that we used in the code above and that you have to add into your Java code:

/**

* @param aCas

* @return returns the pairID of the T-H pair

*/

String getPairID(JCas aCas) {

FSIterator<TOP> pairIter = aCas.getJFSIndexRepository().getAllIndexedFS(Pair.type);

Pair p = (Pair) pairIter.next();

return p.getPairID();

}

/**

* @param aCas

* @return if the T-H pair contains the gold answer, returns it; otherwise, returns null

*/

String getGoldLabel(JCas aCas) {

FSIterator<TOP> pairIter = aCas.getJFSIndexRepository().getAllIndexedFS(Pair.type);

Pair p = (Pair) pairIter.next();

if (null == p.getGoldAnswer() || p.getGoldAnswer().equals("") || p.getGoldAnswer().equals("ABSTAIN")) {

return null;

} else {

return p.getGoldAnswer();

}

}

Finally EDAScorer can be called.

EDAScorer.score(new File(/home/user_name/eop-resources-1.2.0/results/myResults.txt), /home/user_name/eop-resources-1.2.0/results/myResults.eval);

where myResults.eval will contain the scorer output consisting of the accuracy and F1 measure for the annotated data set while myResults.txt is the file containing the annotated T/H pairs produced with the code above.

Go into the EOP-1.2.0 directory, i.e.

> cd ~/Excitement-Open-Platform-1.2.0/target/EOP-1.2.0/

and call the EOPRunner class with the needed parameters as reported below, i.e.

> java -Djava.ext.dirs=../EOP-1.2.0/ eu.excitementproject.eop.util.runner.EOPRunner

-score

-results <ResultsFile>

where:

- score signals to the system to perform scoring

- ResultsFile is the file containing the annotated entailment relations.

The results are saved in a file having the same ResultsFile's name but with extension .xml.

The Step by Step tutorial is finished; now you could try to travel through again the steps with a different EDA like EditDistance EDA or move to Appendix B to install TreeTagger that allow using additional EDAs' configurations (The EOP Results Archive contains a number of configuration that you can download and use). Finally to exploit the full potential of EOP, Appendix D will teach you how to use BIUTEE EDA.

- Licence

- Requirements

- Installation

- Quick Start

- Step by Step Tutorial

- Entailment Algorithms

- BIUTEE

- EditDistance

- TIE

- P1EDA

- Lexical Resources

- Configuration Files

- FAQ