GDFS is a method for dynamically selecting features based on conditional mutual information. It was developed in the paper Learning to Maximize Mutual Information for Dynamic Feature Selection.

In the dynamic feature selection (DFS) problem, you handle examples at test-time as follows: you begin with no features, progressively select a specific number of features (according to a pre-specified budget), and then make predictions given the available information. The problem can be addressed in many different ways, but GDFS tries to greedily select features according to their conditional mutual information (CMI) with the response variable. CMI is difficult to calculate, so GDFS approximates these selections using a custom training approach.

You can get started by cloning the repository, and then pip installing the package in your Python environment as follows:

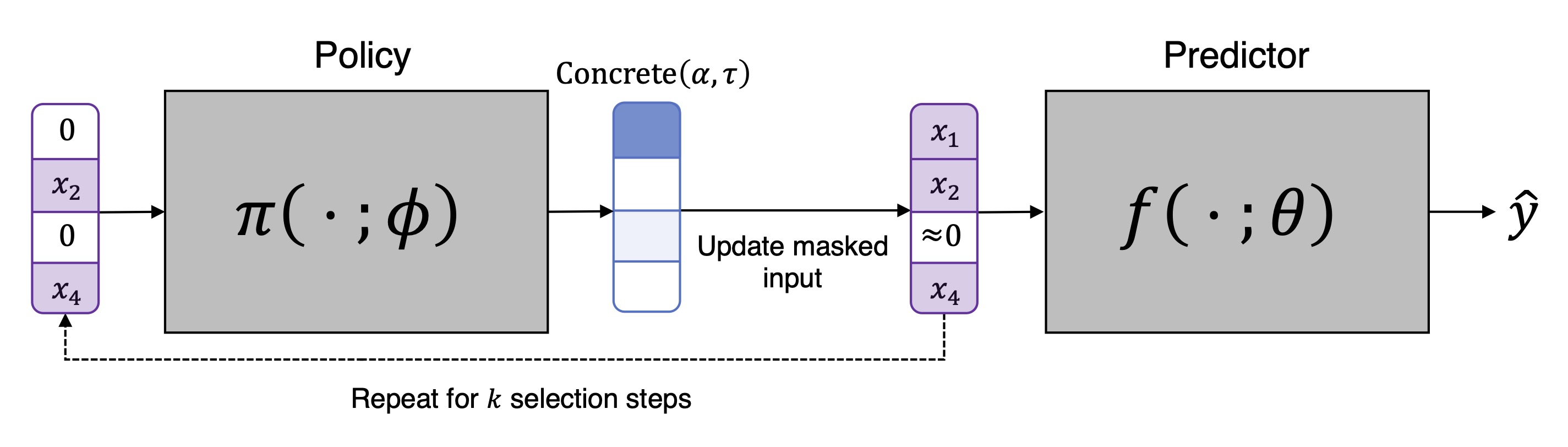

pip install .GDFS involves learning two separate networks: one responsible for making predictions (the predictor) and one responsible for making selections (the policy). Both networks receive a subset of features as their input, and the policy outputs probabilities for selecting each feature. During training, we sample a random feature using the Concrete distribution, but at test time we simply use the argmax.

The diagram below illustrates the training approach:

For usage examples, see the following:

- Spam: a notebook showing an example with the UCI spam detection dataset.

- MNIST: a notebook example with MNIST (digit recognition).

- MNIST-Grouped: shows how to use feature grouping, which is necessary for some datasets (e.g., when using one-hot encoded categorical features).

- The experiments directory contains code to reproduce experiments from the original paper

- Ian Covert (icovert@cs.washington.edu)

- Wei Qiu

- Mingyu Lu

- Nayoon Kim

- Nathan White

- Su-In Lee

Ian Covert, Wei Qiu, Mingyu Lu, Nayoon Kim, Nathan White, Su-In Lee. "Learning to Maximize Mutual Information for Dynamic Feature Selection." ICML, 2023.