-

Notifications

You must be signed in to change notification settings - Fork 37

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add performance instrumentation to ongoing metrics #84

Comments

|

Thank you for this @jessicaschilling 🙏 |

|

I’m not the best one to advise on the best providers in this space, but just wanted to call out that Countly does offer performance metrics as part of our existing enterprise edition contract: https://support.count.ly/hc/en-us/articles/900000819806-Performance-Monitoring |

That is indeed a good point. |

|

Waiting to see results at a point after it's impacted the user isn't ideal. |

|

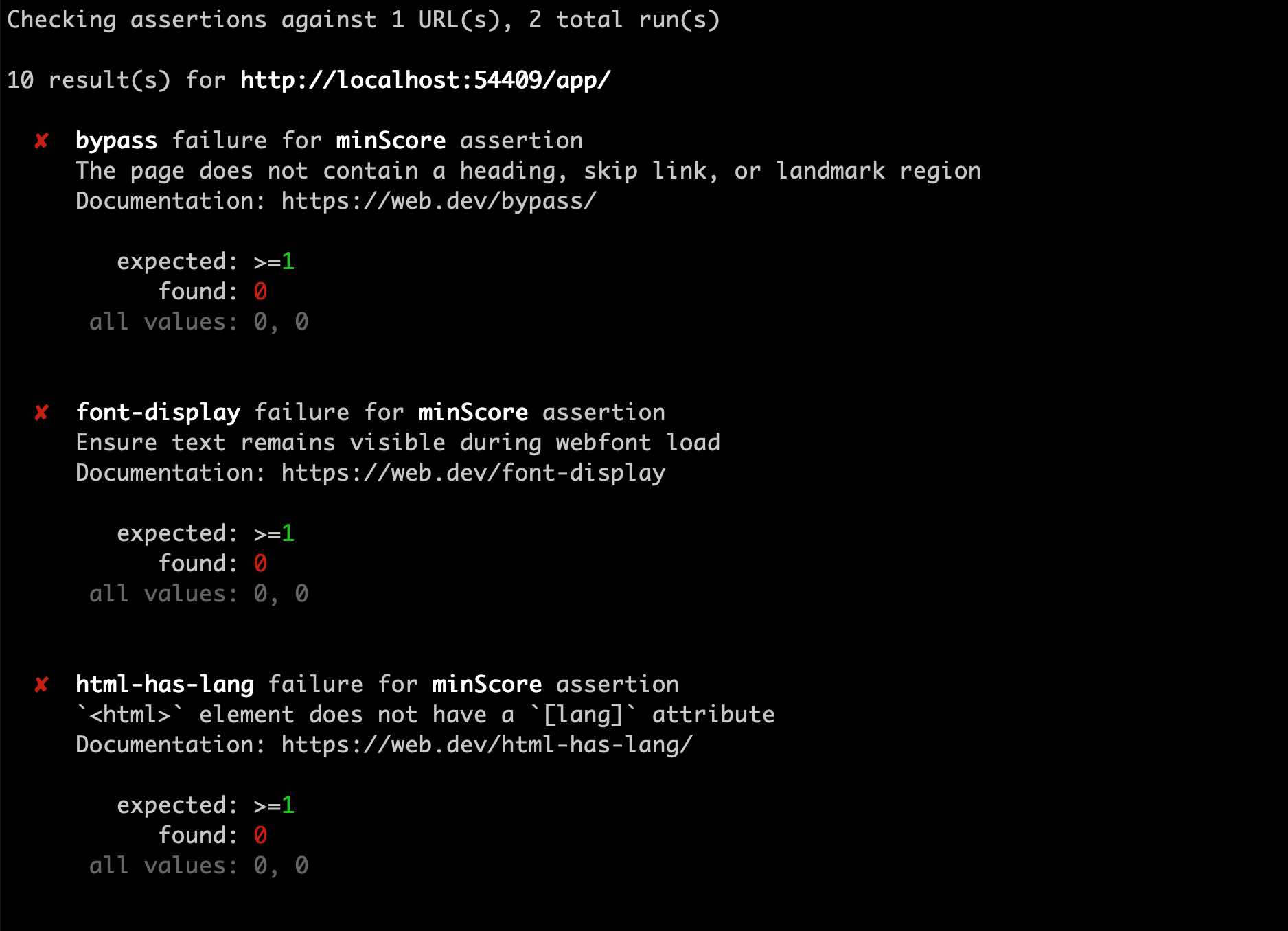

After searching for a bit I realized most services (including SpeedCurve) actually use Google's Lighthouse under the hood, so I propose using lighthouse directly using a Github Action: https://github.com/GoogleChrome/lighthouse-ci It will not provide historical data (unless we setup a server to store the data), but it flags when specific metrics go over a threshold and we can access the full lighthouse report which is what we need. Example of how it would work can be see here: https://github.com/GoogleChrome/lighthouse-ci#features I will address another important performance issue next: images. UPDATE: by using Github Actions here and not running lighthouse directly on fleek will avoid increasing the preview deployment time, even though we will be building the app twice (once on fleek and another on github actions). |

|

@zebateira @terichadbourne This sounds like a great starting point to help us evaluate how we want to use this info in the longer term - even if we don't have access to the historical data, we have an idea of how an individual PR might negatively impact performance. That said, we need to defer to @atopal and @cwaring to make a decision on whether this is an adequate next step. Can you please weigh in? |

|

The above suggested method for tracking performance of the website is a good starting point, but we can already predict one issue that will likely continue to occur frequently: image sizes. Thank you @cwaring for the input 🙏 Compressing imagesIn order to reduce image payloads we should have an automatic process in place to compress and convert all the images when they are added on a blog post. We do have the image cropper which we could use it to also compress the image, but since this is not mandatory to use when creating a post in forestry, it can't be used to tackle this issue completely. A common solution is to add this compression to the build process, but this is unnecessary processing – no need to keep the original version of the images on the repo. A better solution might be to hook into the commit and compress the image automatically before they are committed, but I couldn't find any information how to do this with forestry in the docs? I don't see an easy straightforward solution, but here are some other ideas:

|

|

@zebateira I'm afraid requiring content authors to expand their workflow isn't really a viable option. A GitHub action also has the benefit of being able to be reused on other PL sites independent of Forestry, which is appealing. Any estimate of how long creating that might take? |

There are some already built so we can probably find a good one and use it (example: Image Actions). |

|

I'm fine with the Github actions solution, but what's the problem with keeping the original image version in the repo? |

|

@zebateira and I had a quick chat about all this the other day. A few notes to expand my thoughts:

With this in mind please ensure any client-side metric collection is all non-blocking and passive, so if a user has an ad-blocker enabled (brave) or is viewing the site offline everything still works smoothly. 🖼️ On images:

|

|

Here are the lighthouse metrics for the currently deployed blog.ipfs.io

This will serve as a very rudimentary basis of comparison to the new version. |

|

@zebateira - I see you've already got an issue to add this to IPFS docs, and I added ipfs/ipfs-website#9 for the replatformed website. Are you OK closing this issue? |

|

@jessicaschilling yes 👍 |

Per @atopal request, we need to add some form of site performance instrumentation to (at minimum) the primary IPFS websites going forward:

Kicking this work off in this repo, since we're already doing a lot of pre-launch work on the blog.

@zebateira: Can you please research potential approaches? Some notes:

@atopal: Please edit this comment or add additional comments for any other specs or notes. I'm tentatively labeling this as P2, on the assumption that it's acceptable to work on this post-blog-launch as long as payload problems in #41 are resolved for launch — but please revise if you feel this is needed sooner.

cc @terichadbourne @johnnymatthews @yusefnapora @johndmulhausen for context and/or discussion.

The text was updated successfully, but these errors were encountered: