-

Notifications

You must be signed in to change notification settings - Fork 589

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Nice! Would you release your implementation of the loss function? #2

Comments

|

Hi! |

|

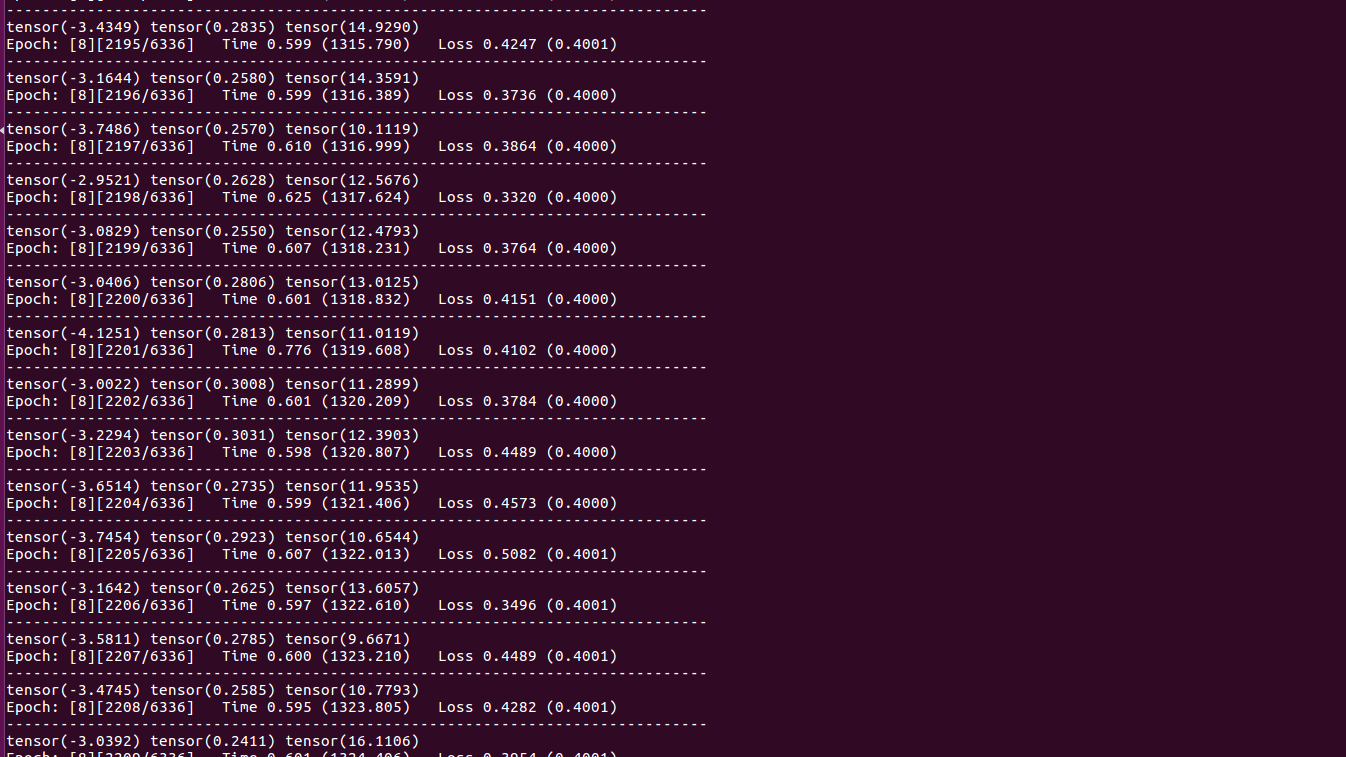

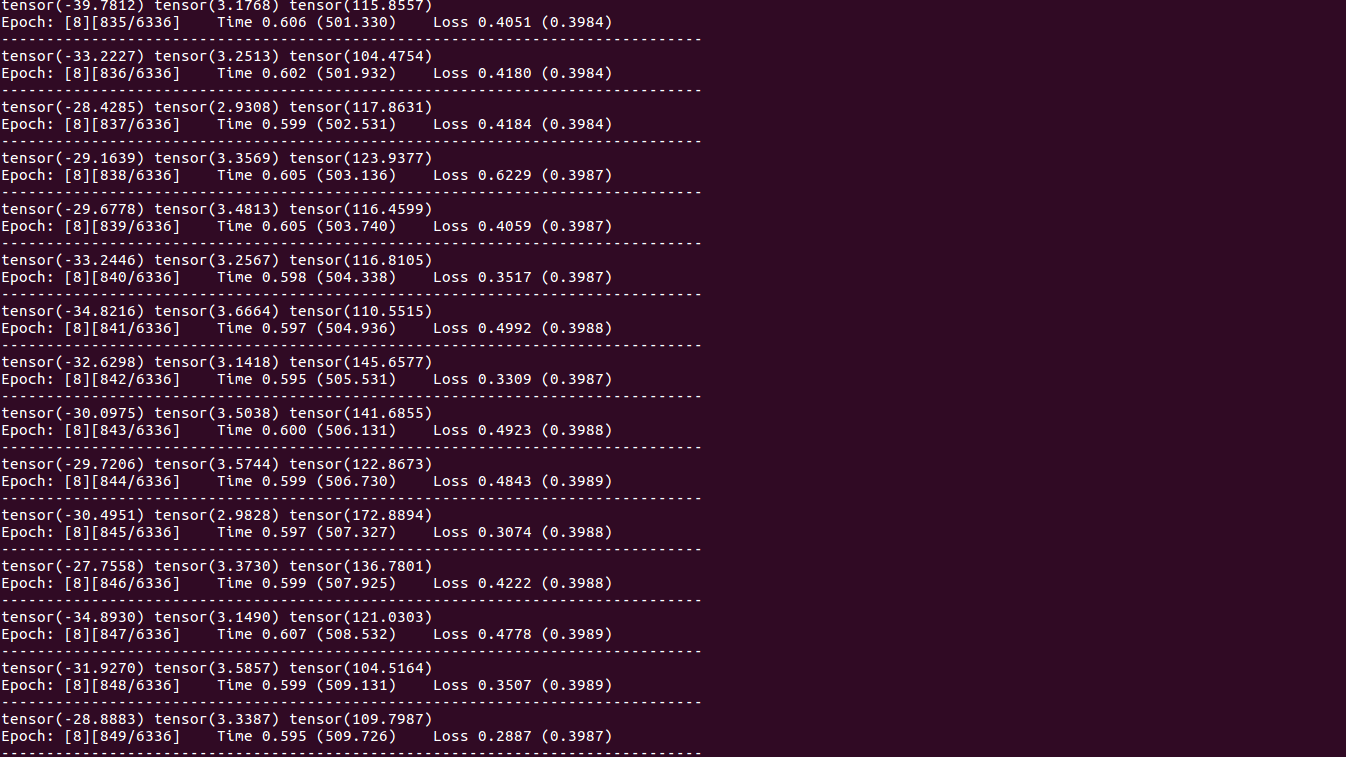

@dvdhfnr I implemented the loss by myself. I found that the range of prediction is unstable. It can be -3 -- 8, -10 -- 30, -200 -- 1000, in differernt training attempts. I wonder if your met the problem when you trained the network. Thank you very much. |

|

No, I did not met this problem. Did you scale/shift the target depths to [0, 10], as described in the paper? |

|

@dvdhfnr I train my model on NYUDepthV2, and scale/shift the depth value by How did you scale/shift the depth map? |

|

To be more precise: |

|

The predictions resemble the target inverse depths up to a scale and shift. |

|

As @dvdhfnr mentioned: This is by construction, as the value of the loss is independent of the output scale. Thus the ranges are dependent on the initialization. If you need a fixed scale range, you could try to add a (scaled) sigmoid to the output activations. However, this might change training behavior and you likely need a sensible initialization. |

Hi this link is failed, could you share a new one |

@dvdhfnr Hi, I found the normalization range is [0, 1] in original paper, so which range is correct? thx |

|

For the latest version of the paper we used the range [0, 1] (https://arxiv.org/pdf/1907.01341v3.pdf, Page 7). #2 (comment) was referring to an earlier version (https://arxiv.org/pdf/1907.01341v1.pdf, Page 6). |

|

@dvdhfnr Hi, did you use this loss function for MiDaS v3.0 as well? |

|

@dvdhfnr @ranftlr Hi,

Does my understanding right? if not, could you please tell us how do you do "For all datasets, we shift and scale the ground-truth inverse depth to the range [0, 1]."? Thx. |

|

@Twilight89 |

I'm working on a relative article that use your great work, and would like to use your loss function.

Thanks in advance!

The text was updated successfully, but these errors were encountered: